Introduction (TS 3.1, 3.3, 3.6, S6) #

Overview of main models #

We are now ready to introduce the main models with full generality, and to discuss an overall estimation, fitting, and forecasting approach. The main models we will review are:

- Autoregressive models of order

- Moving averages of order

Variations #

- Multiplicative seasonal ARIMA models:

- Pure seasonal autoregressive moving average

- Multiplicative seasonal autoregressive moving average models

- Seasonal autoregressive integrated moving average models

- Pure seasonal autoregressive moving average

- Multivariate time series—Vector Auto-Regressive

- Extra models mentioned by the CS2 syllabus:

- bilinear model

- threshold autoregressive model

Partial Autocorrelation Function (PACF) #

Motivation #

- There is one more property we need to introduce, the Partial Autocorrelation Function (PACF).

- The ACF can help identify

- However, the order of an

- What we need for autoregressive models is a measure of dependence at two different points in time,

with the effect of the other points in time removed. - We will remove the linear effect of all variables in-between, and so by increasing the lag iteratively, this partial correlation should be 0 after some lag (

Reminder: partial correlation #

- Let

- The partial correlation between

- Regressing

- Regressing

- Regressing

- Then the partial correlation is

- In the special case where the random variables are multivariate normal (and hence dependence is linear),

The partial autocorrelation function (PACF) #

The partial autocorrelation function (PACF) of a stationary process,

- The PACF,

- The PACF of an

- The R function

pacfwill display a sample

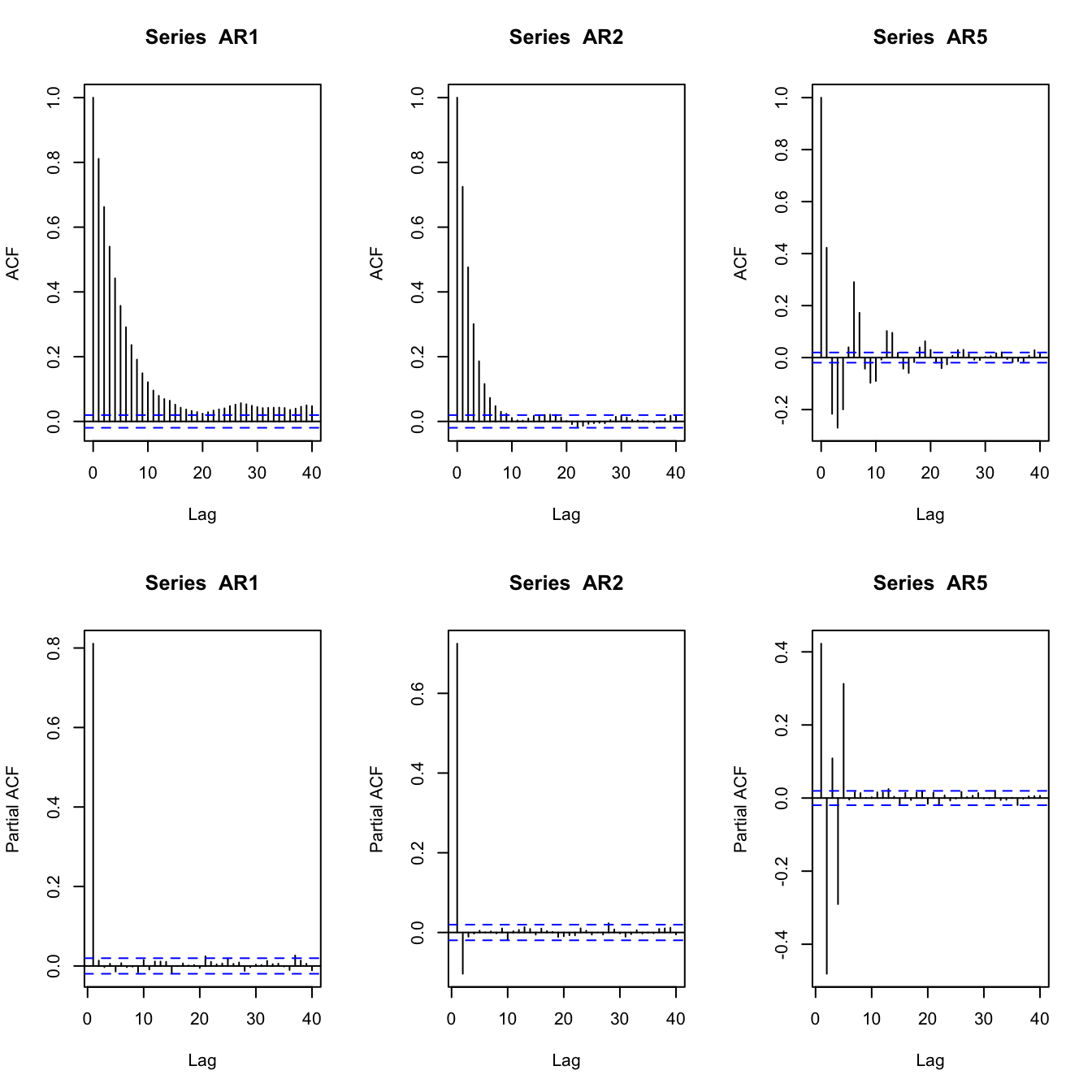

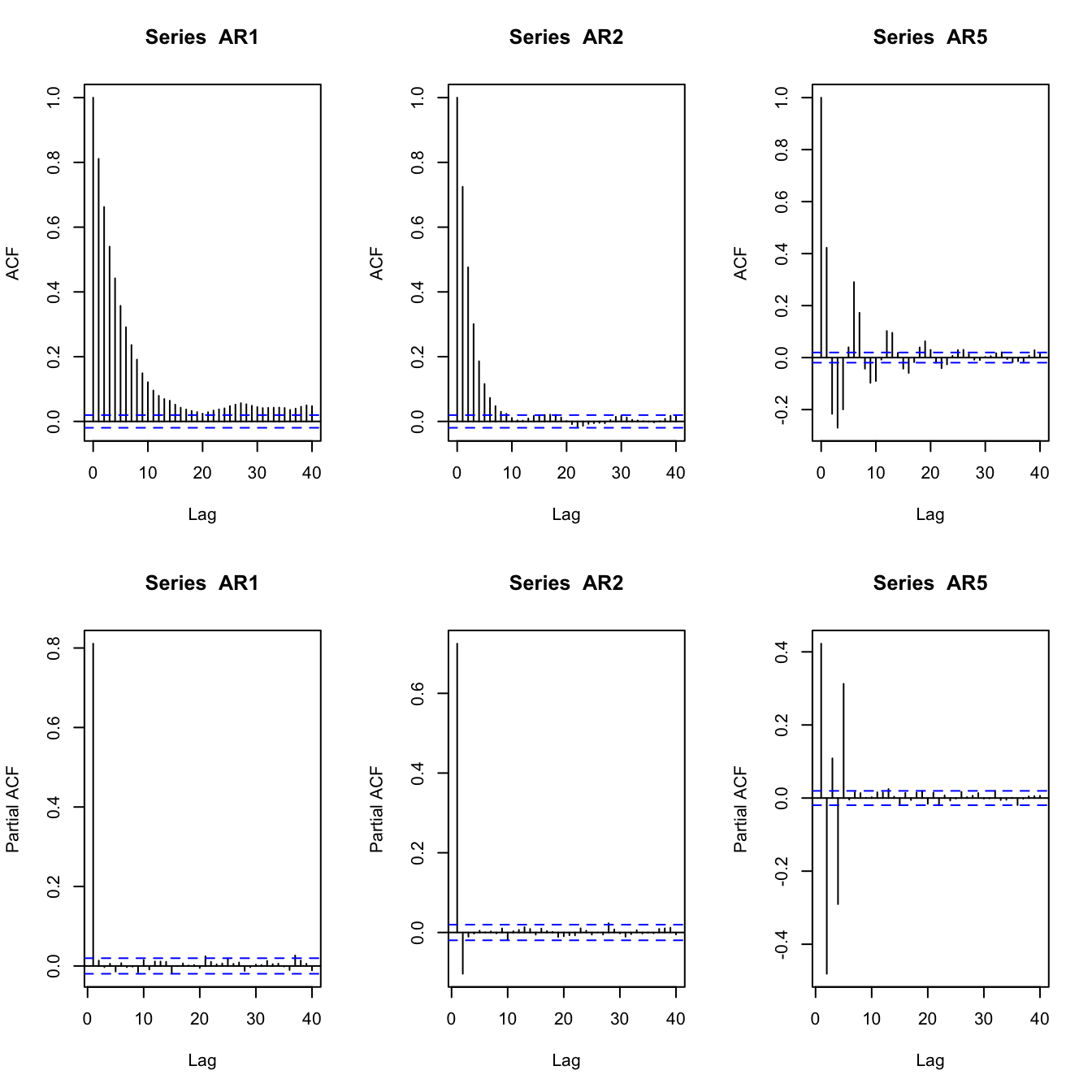

Examples of AR PACFs #

set.seed(2)

AR1 <- arima.sim(list(order = c(1, 0, 0), ar = 0.8), n = 10000)

AR2 <- arima.sim(list(order = c(2, 0, 0), ar = c(0.8, -0.1)),

n = 10000)

AR5 <- arima.sim(list(order = c(5, 0, 0), ar = c(0.8, -0.8, 0.5,

-0.5, 0.3)), n = 10000)

par(mfrow = c(2, 3))

acf(AR1)

acf(AR2)

acf(AR5)

pacf(AR1)

pacf(AR2)

pacf(AR5)

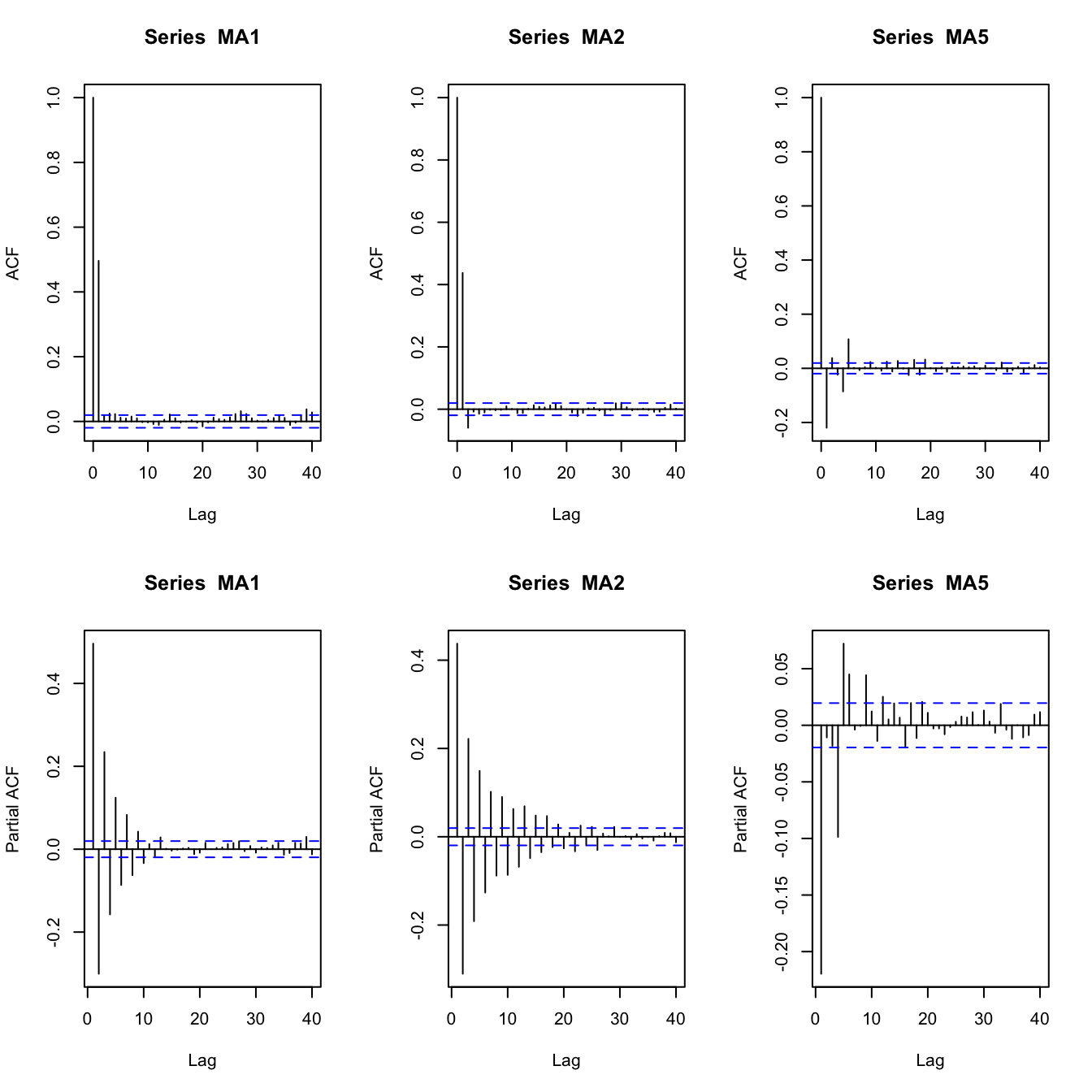

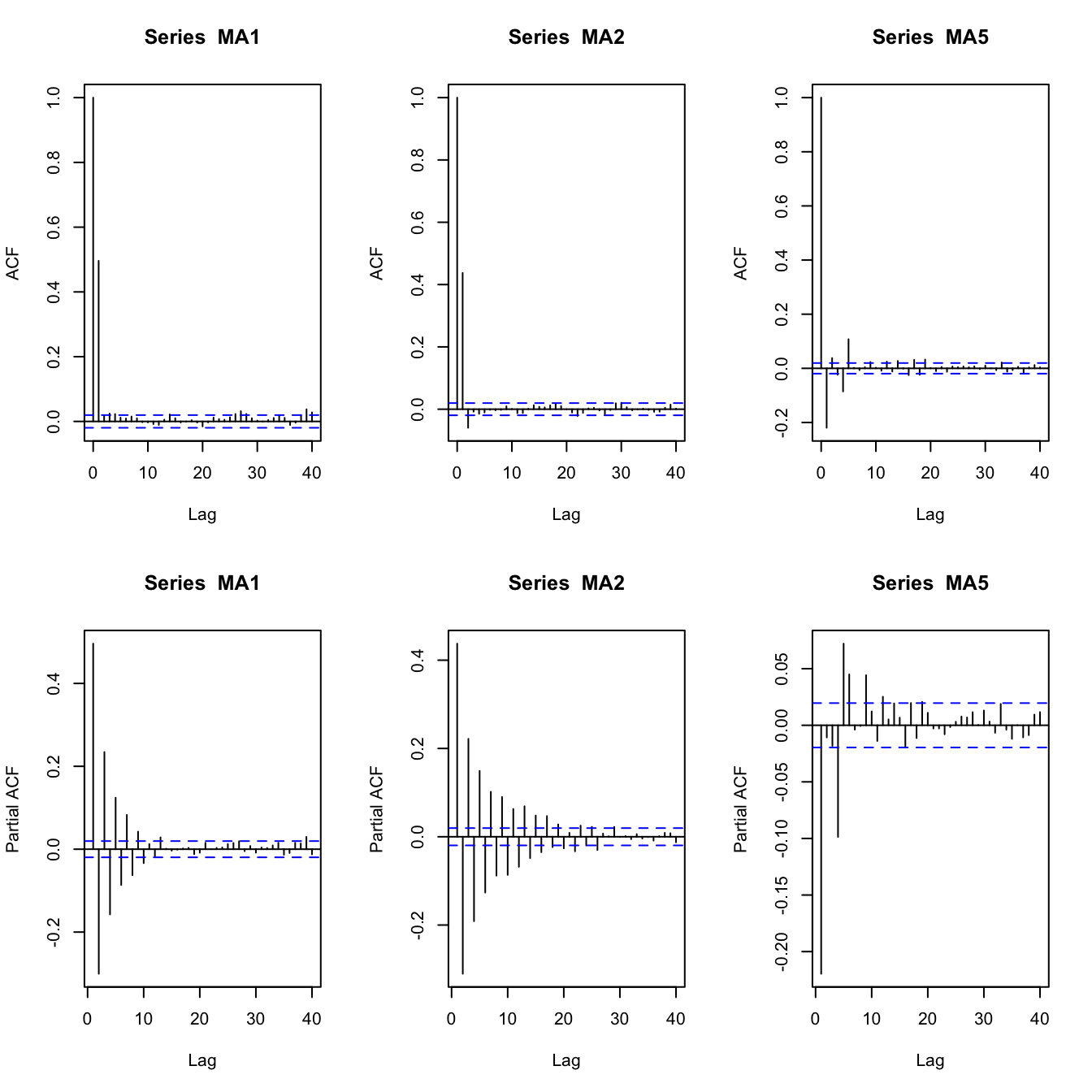

Examples of MA PACFs #

set.seed(2)

MA1 <- arima.sim(list(order = c(0, 0, 1), ma = 0.8), n = 10000)

MA2 <- arima.sim(list(order = c(0, 0, 2), ma = c(0.8, -0.1)),

n = 10000)

MA5 <- arima.sim(list(order = c(0, 0, 5), ma = c(0.8, -0.8, 0.5,

-0.5, 0.3)), n = 10000)

par(mfrow = c(2, 3))

acf(MA1)

acf(MA2)

acf(MA5)

pacf(MA1)

pacf(MA2)

pacf(MA5)

Definition #

An autoregressive model of order

Assume

- Unless stated otherwise we assume

- Recall that here the regressors

- The autoregressive operator is defined as

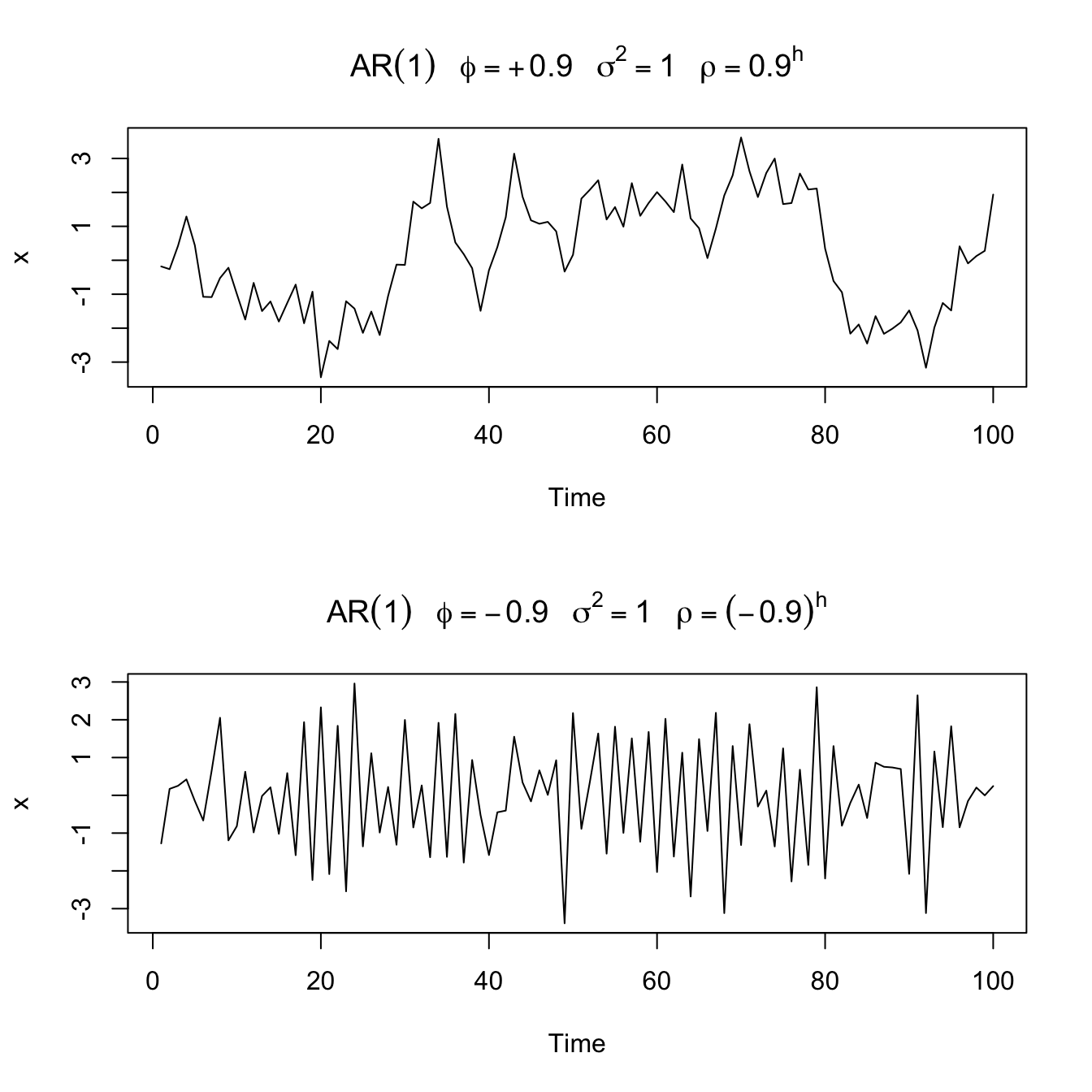

Example: AR(1) model #

An

The stationary solution of the model has mean

Sample paths of

par(mfrow = c(2, 1))

plot(arima.sim(list(order = c(1, 0, 0), ar = 0.9), n = 100),

ylab = "x", main = (expression(AR(1) ~ ~~phi == +0.9 ~ ~~sigma^2 ==

1 ~ ~~rho == 0.9^h)))

plot(arima.sim(list(order = c(1, 0, 0), ar = -0.9), n = 100),

ylab = "x", main = (expression(AR(1) ~ ~~phi == -0.9 ~ ~~sigma^2 ==

1 ~ ~~rho == (-0.9)^h)))

Stationarity (and Causality) #

Stationarity of

The time series process

We will get a sense of why as we make our way through this module.

ACF and PACF #

Autocovariances #

Remember that for stationary

R can calculate this for you (code is provided later).

Note:

- we expect

- this is equivalent to

- this is equivalent to our iff condition for

Yule-Walker equations #

- We have a solution form for

- Sometimes, it is quicker to use the so-called “Yule-Walker equations”. Consider the equation for an

- A matrix representation will be introduced later

Example #

- For an

- The second and third equations can be solved linearly to obtain expressions for

- For numerical values of

The matrix representation will help.

ACF #

As illustrated above

Note:

- For stationary and causal AR,

- If all the roots are real, then

- If some of the roots are complex, then they will be in conjugate pairs and

- This property flows on to ARMA models.

PACF #

When

[why? because this means that

This means that

Hence the PACF

In summary, we know that

“In-between”, we have that

Furthermore, it can be shown that

Explosive AR models and causality #

Remember the random walk (an

Examination of the

Unfortunately not all AR models are stationary. For the random walk,

Assume

Because

When a process does not depend on the future— such as

The model above with

Here is the lesson for

- stationary and causal are not equivalent conditions

- depending on the parameters of your

- that’s why the condition above stated “stationary and causal”

- this is further discussed in the ARMA section, where the argument above is further generalised to

Definition #

The moving average model of order

Note:

- The AR combines linearly the

- The moving average operator is defined as

- Unlike the AR, the MA is stationary for any value of the parameters

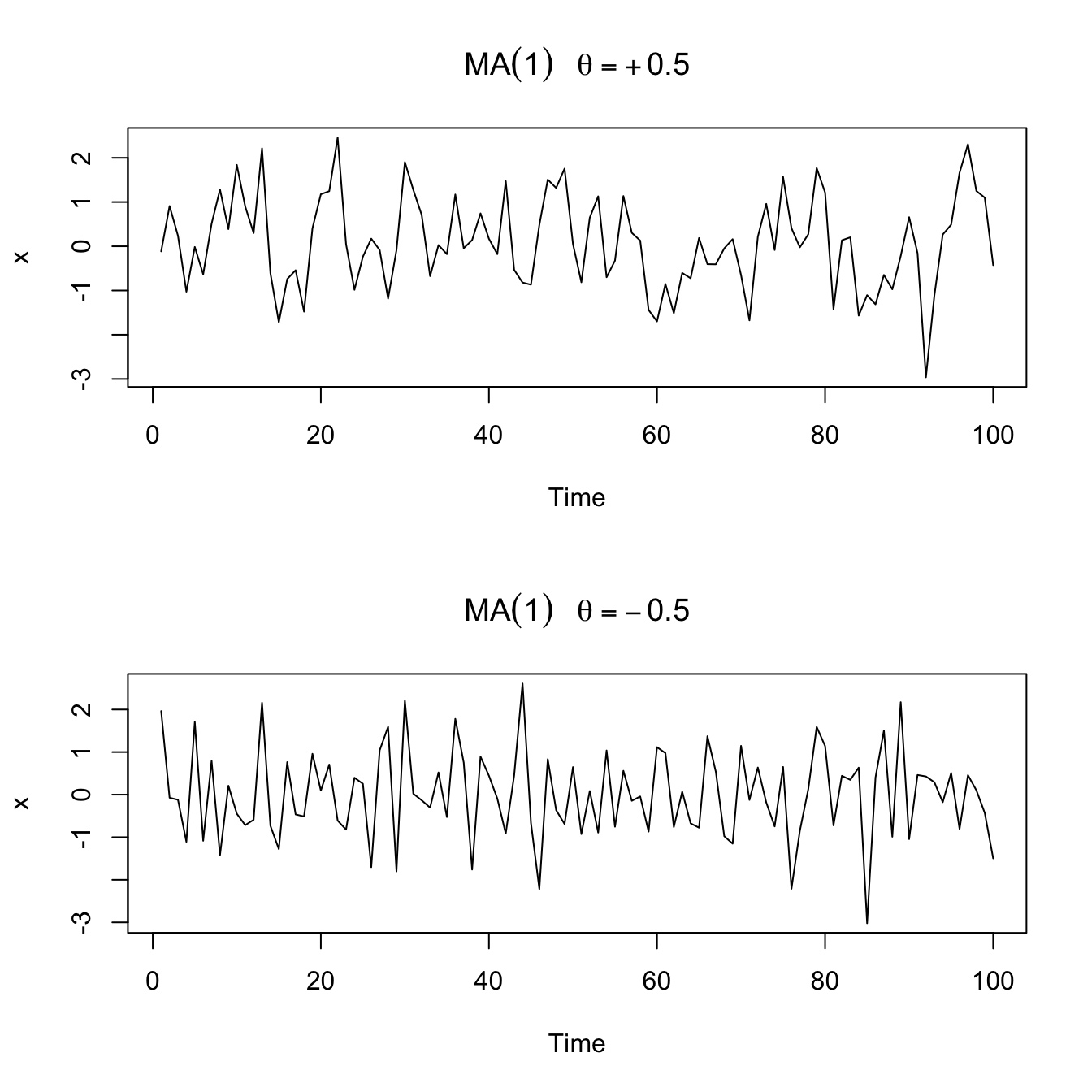

Example:

An

Sample paths of

par(mfrow = c(2, 1))

plot(arima.sim(list(order = c(0, 0, 1), ma = 0.5), n = 100),

ylab = "x", main = (expression(MA(1) ~ ~~theta == +0.5)))

plot(arima.sim(list(order = c(0, 0, 1), ma = -0.5), n = 100),

ylab = "x", main = (expression(MA(1) ~ ~~theta == -0.5)))

Non-uniqueness and invertibility #

- Note that (multiply the RHS by

- Furthermore, the pair

- Thus, the

- We can only observe

- We encountered this phenomenon already in Tutorial Exercise

TS5.

For convenience, we will systematically choose the version that is invertible, which means a process that has an infinite AR representation, as explained below.

Example:

- Consider the following inversion of the roles of

- Since we need

How can we generalise this to

- As in the AR case, the polynomial

- Just as we required

Example: in the

ACF and PACF #

Autocovariance #

We have

- the cutting off of

ACF #

The ACF is then

PACF #

An invertible

Example: For an

Definition #

A time series

This can be rewritten very concisely as

Parameter redundancy #

Multiplying both sides by a third operator

- Consider the white noise process

- If we multiply both sides by

- We have hidden that fact through over-parametrisation, leading to parameter redundancy.

- Fitting results are likely to suggest that all parameters (including

the unnecessary ones) are significant.

The AR and MA polynomials #

The AR and MA polynomials are defined as

These will be used extensively to study the properties of ARMA processes.

Properties #

Problems to keep in mind #

In the previous sections, we identified the following potential “problems”, or potential “issues to keep in mind”:

- parameter redundant models,

- stationary AR models that depend on the future, and

- MA models that are not unique.

We now discuss how to avoid those issues.

Parameter redundant models #

We will require:

This will ensure that when referring to an

Future dependence and causality #

[What does causal mean?] An

Note:

- key here is that the sum starts at

- the

[When is it causal?] An

To see this, note that the coefficients of the linear process given above can be determined by solving

Equivalently, an ARMA process is causal only when the roots of

lie outside the unit circle, that is,

Invertibility #

[What does invertible mean?] An

Note:

- key here is that we express the model as “$w_t$ is a function of

- the

[When is it invertible?] An

Example: parameter redundancy, causality, invertability #

Consider the process:

Factorise the common factor

[Parameter redundancy: checked!]

We next check whether the process is causal. We need the root of

We next check wether the model is invertible. We need the root of

Now, we would like to find the linear representation of the process, that is, get the

We obtain then

format(ARMAtoMA(ar = 0.9, ma = 0.5, 10), digits = 2) # first 10 psi-weights

## [1] "1.40" "1.26" "1.13" "1.02" "0.92" "0.83" "0.74" "0.67" "0.60"

## [10] "0.54"

[Linear representation: checked!]

(no, it’s not over yet!)

Next, we want to determine the invertible representation of the model. Because

We get

Again, this is much quicker in R: (

format(ARMAtoMA(ar = -0.5, ma = -0.9, 10), digits = 1) # first 10 pi-weights

## [1] "-1.400" " 0.700" "-0.350" " 0.175" "-0.087" " 0.044" "-0.022"

## [8] " 0.011" "-0.005" " 0.003"

[Invertible representation: checked!]

Stationarity, Causality and Invertibility #

Wrapping it up #

First, it is helpful to rewrite the ARMA representation as

- first, we require

- pure

models.

- pure

- now, even though

- Again, stationarity does not require the roots of

Revisiting the future-dependent example #

Let us revisit that example: We have

We distinguish three cases:

ACF and PACF #

Autocovariance and ACF #

For a causal

Alternatively, it is possible to write a general homogeneous equation for the ACF of a causal ARMA process to solve for the

(The proof is outside scope but available in TS):

Example: ACF of

Consider the

(see Example 3.12 in the textbook).

Solving for

Definition #

A process

ARMA models to include differencing.

Remarks #

- Because of nonstationarity, care must be taken when deriving forecasts. It is best to use so-called state-space models for handling nonstationary models (but these are outside the scope of this course).

- Since

- Derivation of prediction errors is slightly more involved (but also outside the scope of this course).

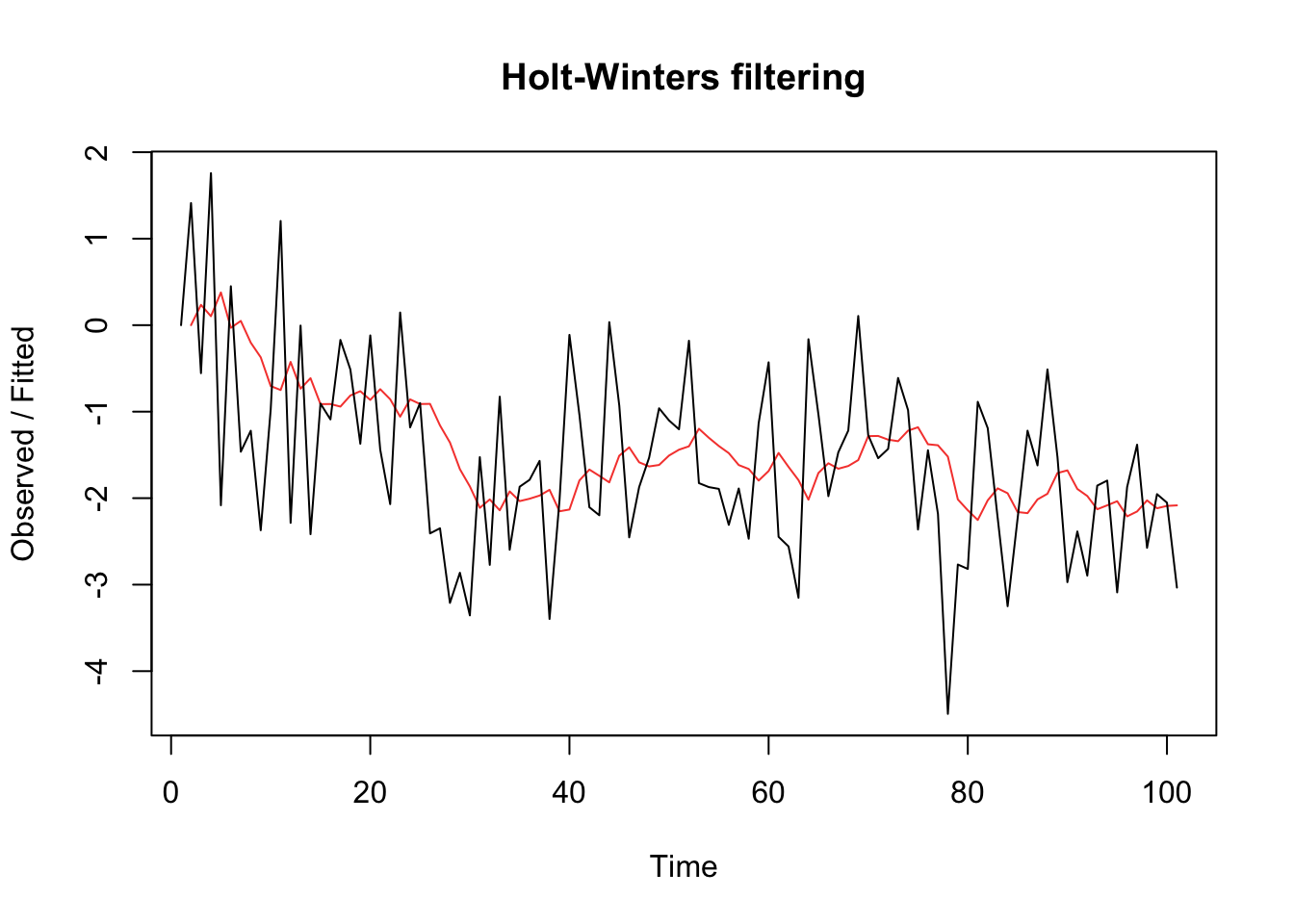

The IMA(1,1) model and exponential smoothing #

IMA(1,1) and EWMA #

- The

- The model leads to a frequently used— and abused! —forecasting method called exponentially weighted moving averages (EWMA) (“exponential smoothing” in S6).

- One should not use this method unless there is strong statistical evidence that this is the right model to use!

(as per the following sections)

EWMA example #

set.seed(666)

x <- arima.sim(list(order = c(0, 1, 1), ma = -0.8), n = 100) #simulate IMA(1,1)

x.ima <- HoltWinters(x, beta = FALSE, gamma = FALSE) # fit EWMA

plot(x.ima)

Multiplicative seasonal ARIMA models (TS 3.9) #

Introduction #

- We observed seasonality in many time series so far. This is manifested when the dependence on the past tends to occur most strongly at multiples of some underlying seasonal lag

- monthly economic data: strong yearly component occurring at lags that are multiples of

- data taken quarterly: will exhibit the yearly repetitive period at

- monthly economic data: strong yearly component occurring at lags that are multiples of

- In this section, we introduce several modifications made to the ARIMA model to account for seasonal and nonstationary behavior.

- The reference for this section is TS 3.9.

- Note that seasonality can be due to:

- Deterministic reasons: include in trend

- Stochastic dependence at seasonal lags

Pure seasonal

The pure seasonal autoregressive moving average model

Here, inter-temporal correlations are exclusively seasonal (there are no non-seasonal correlations).

Note: (analogous to nonseasonal ARMA)

- It will be causal only when the roots of

- It will be invertible only when the roots of

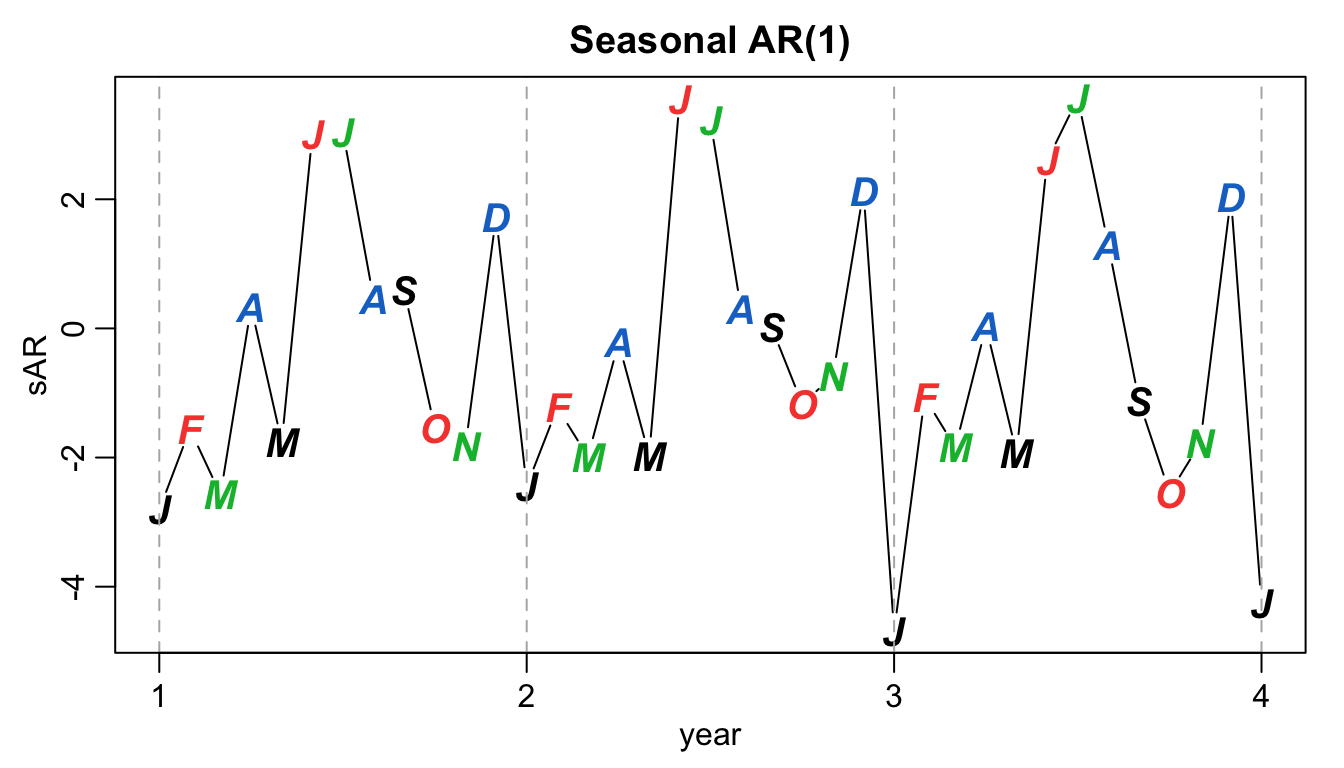

Example: first order seasonal

A first-order seasonal autoregressive series that might run over months could be written as

- very similar to the unit lag model

- causal if

Simulated example (with

set.seed(666)

phi <- c(rep(0, 11), 0.9)

sAR <- arima.sim(list(order = c(12, 0, 0), ar = phi), n = 37)

sAR <- ts(sAR, freq = 12)

par(mar = c(3, 3, 2, 1), mgp = c(1.6, 0.6, 0))

plot(sAR, axes = FALSE, main = "Seasonal AR(1)", xlab = "year",

type = "c")

Months <- c("J", "F", "M", "A", "M", "J", "J", "A", "S", "O",

"N", "D")

points(sAR, pch = Months, cex = 1.25, font = 4, col = 1:4)

axis(1, 1:4)

abline(v = 1:4, lty = 2, col = gray(0.7))

axis(2)

box()

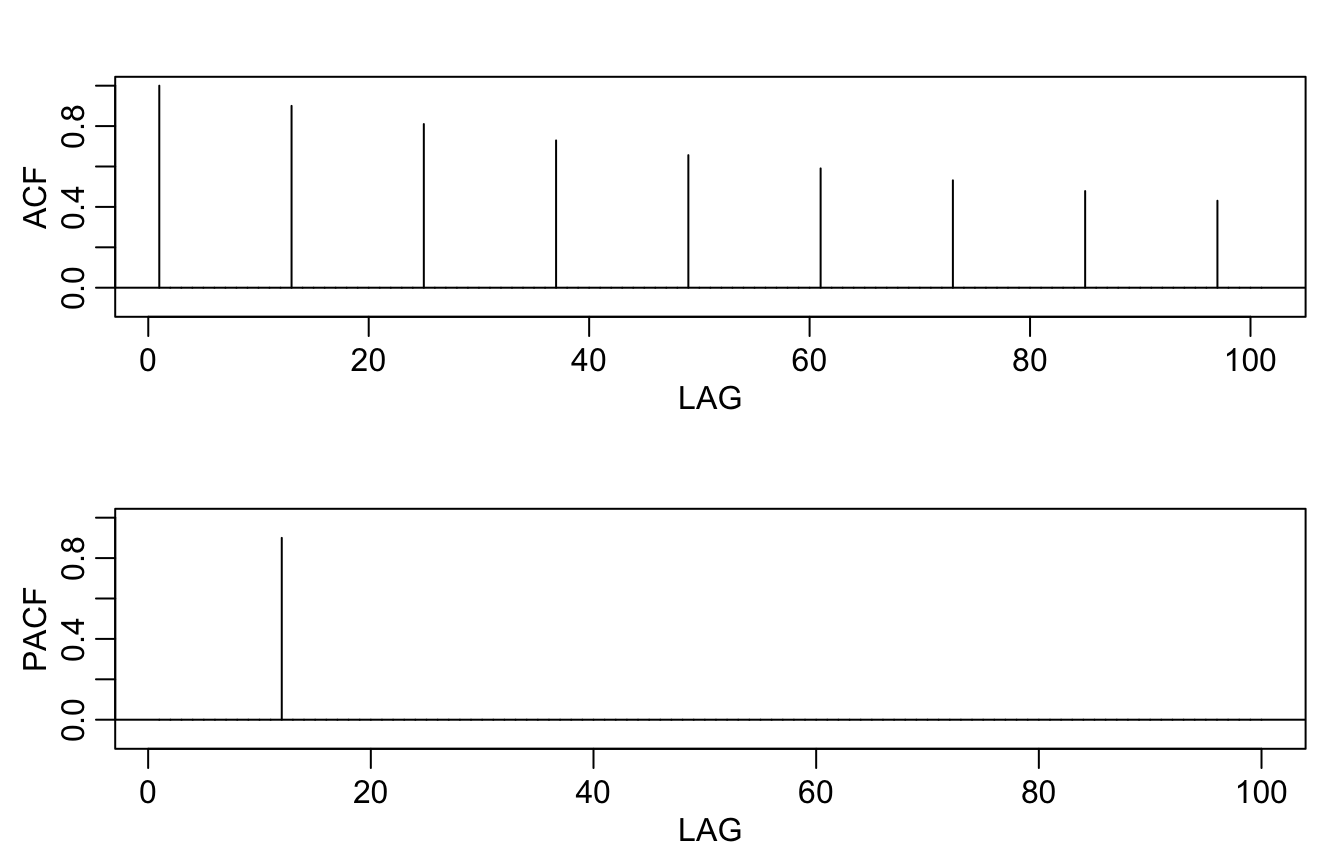

Theoretical ACF and PACF of the model:

ACF <- ARMAacf(ar = phi, ma = 0, 100)

PACF <- ARMAacf(ar = phi, ma = 0, 100, pacf = TRUE)

par(mfrow = c(2, 1), mar = c(3, 3, 2, 1), mgp = c(1.6, 0.6, 0))

plot(ACF, type = "h", xlab = "LAG", ylim = c(-0.1, 1))

abline(h = 0)

plot(PACF, type = "h", xlab = "LAG", ylim = c(-0.1, 1))

abline(h = 0)

Autocovariance and ACF of first-order seasonal models #

- For the first-order seasonal

- For the first-order seasonal

Multiplicative seasonal

In general, we can combine the seasonal and nonseasonal operators into a multiplicative seasonal autoregressive moving average model

Note:

- When selecting a model, we will need to carefully examine the ACF and PACF of the data.

- Choosing the seasonal autoregressive and moving average components first will generally lead to better results.

- This will be discussed more in Module 10.

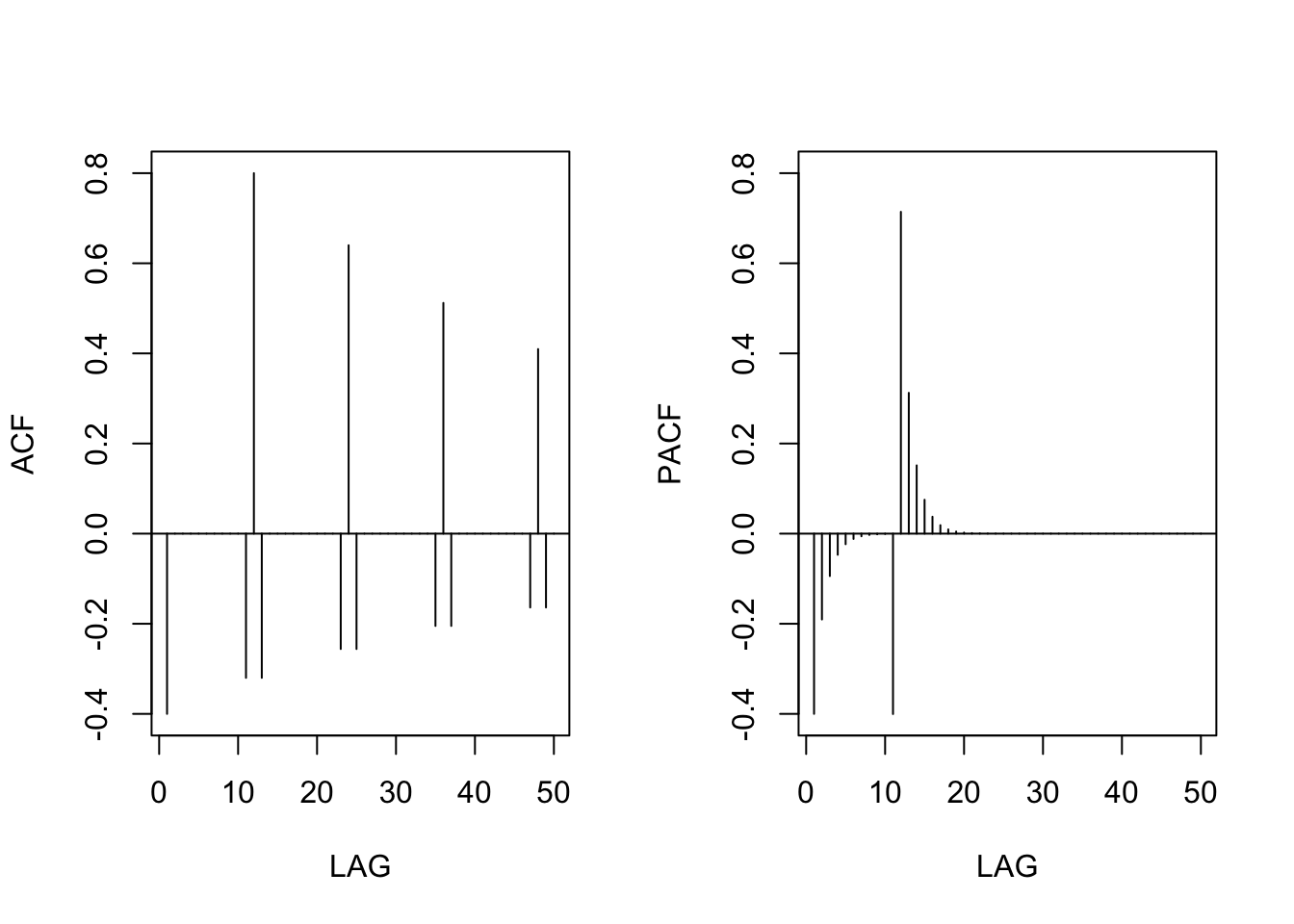

Example: A mixed seasonal model

Consider an

Because

then

Furthermore, multiplying the model by

Thus, the ACF for this model (requires some algebra) is

Example: if

phi <- c(rep(0, 11), 0.8)

ACF <- ARMAacf(ar = phi, ma = -0.5, 50)[-1] # [-1] removes 0 lag

PACF <- ARMAacf(ar = phi, ma = -0.5, 50, pacf = TRUE)

par(mfrow = c(1, 2))

plot(ACF, type = "h", xlab = "LAG", ylim = c(-0.4, 0.8))

abline(h = 0)

plot(PACF, type = "h", xlab = "LAG", ylim = c(-0.4, 0.8))

abline(h = 0)

Seasonal differencing #

Motivating example #

- Consider average temperatures over the years.

- Each January would be approximately the same (as would February, etc…).

- In this case we might think of the average monthly temperature as

- Here,

- Note

- If we subtract the effect of successive years from each other (“seasonal differencing”), we find that

- This model is a stationary purely seasonal

- Such a model would exhibit an ACF that is large and decays very slowly at lags

Seasonal differencing #

- In general, seasonal differencing can be indicated when the ACF decays slowly at multiples of some season

- The seasonal difference of order

- Typically,

How do we combine this idea with an ARIMA model?

SARIMA model

The multiplicative seasonal autoregressive integrated moving average model, or SARIMA model is given by

Note:

- This is denoted as

- The ordinary autoregressive and moving average components are represented by polynomials

- The seasonal autoregressive and moving average components by

- The ordinary and seasonal difference components by

A typical SARIMA model #

Consider the following

Note:

- This model often provides a reasonable representation for seasonal, nonstationary, economic time series.

- This model can be represented equivalently as

Yet another representation is

- The multiplicative nature implies that the coefficient of

- However, this often works well, and reduces the number of parameters needed.

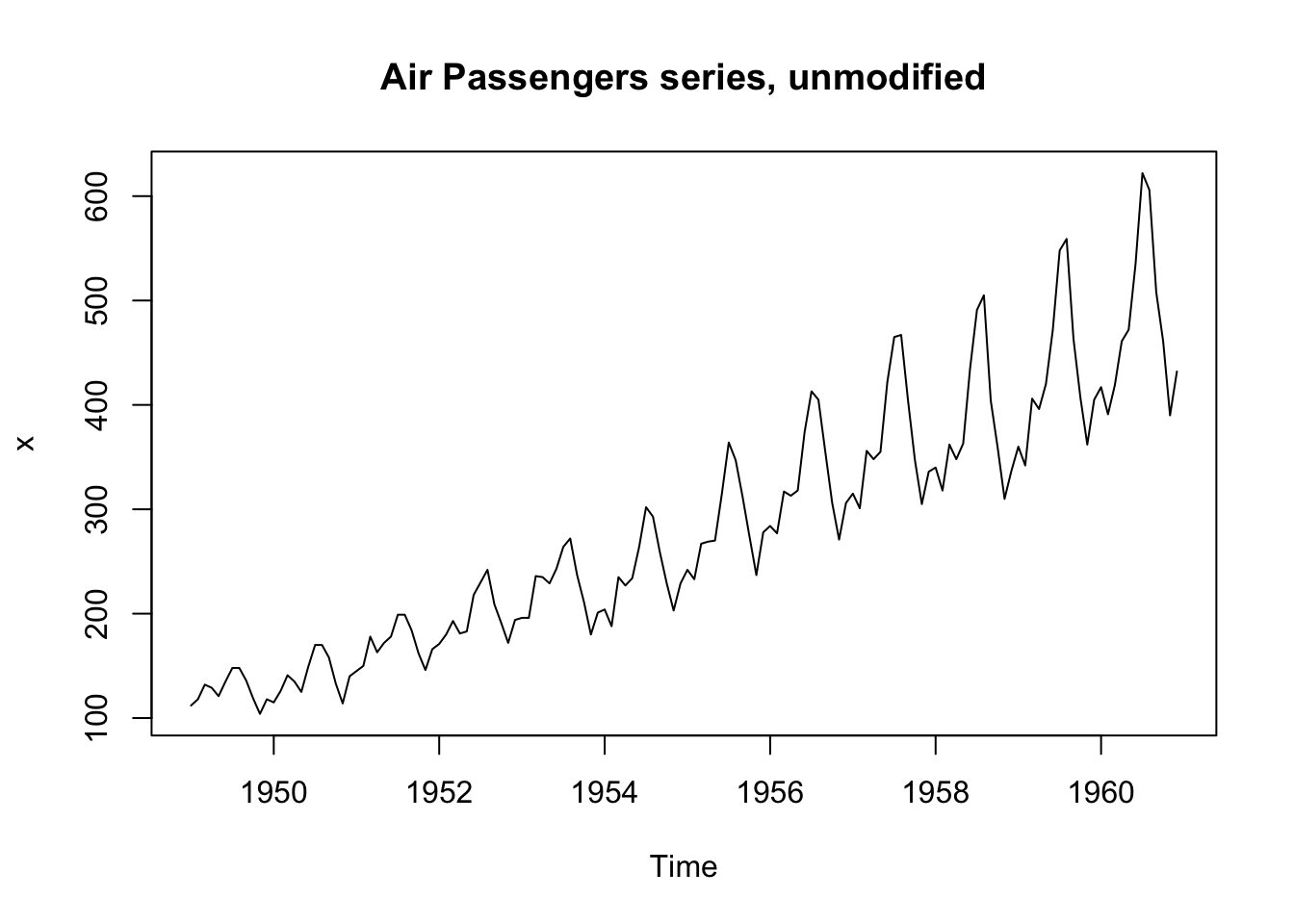

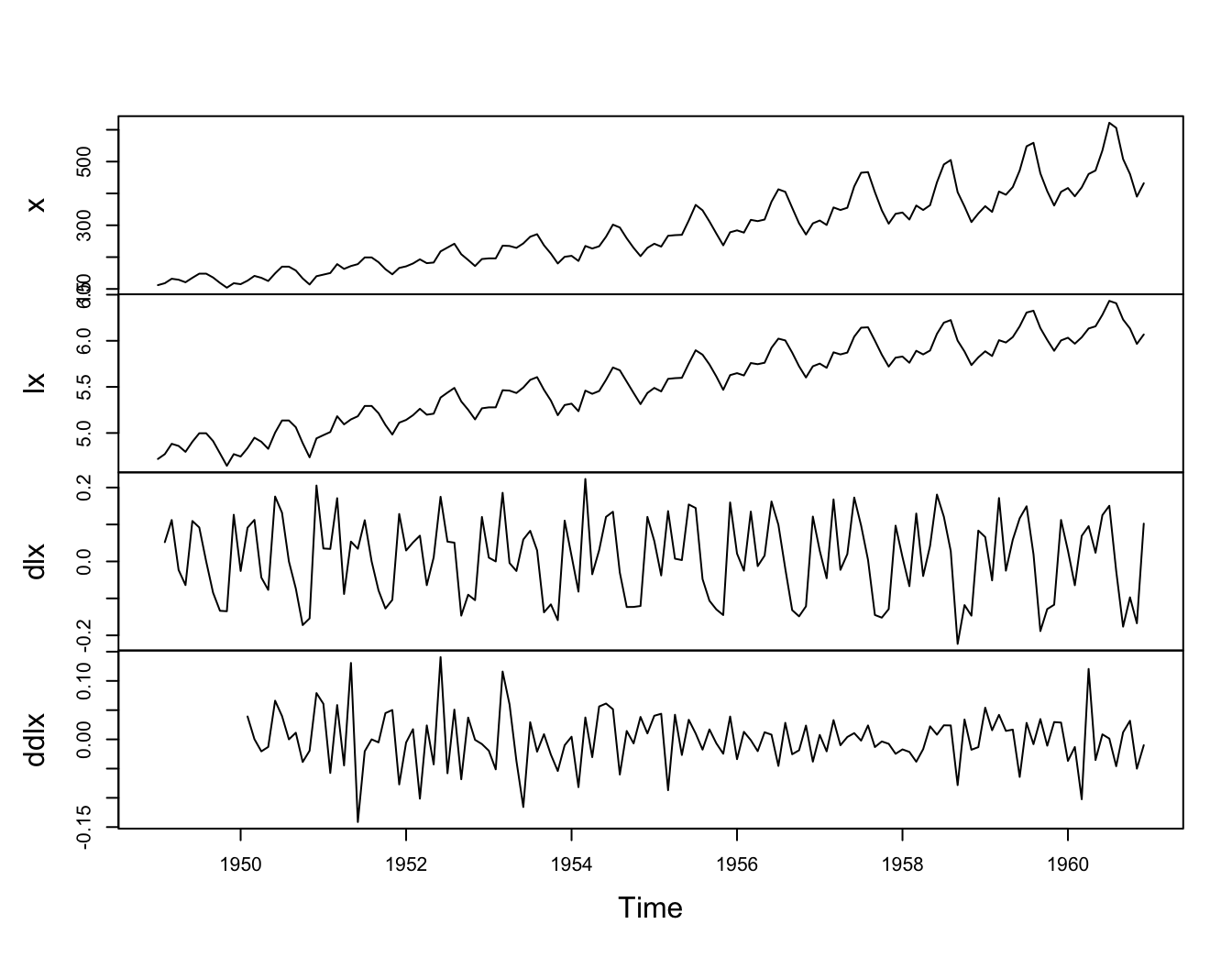

Example: Air Passengers #

Consider the R data set AirPassengers, which are the monthly totals of international airline passengers, 1949 to 1960, taken from Box & Jenkins (1970):

x <- AirPassengers

We’ll explore this dataset and see we can “difference out” some seasonality.

Let’s have a look at the series:

plot.ts(x, main = "Air Passengers series, unmodified")

This shows trend plus increasing variance

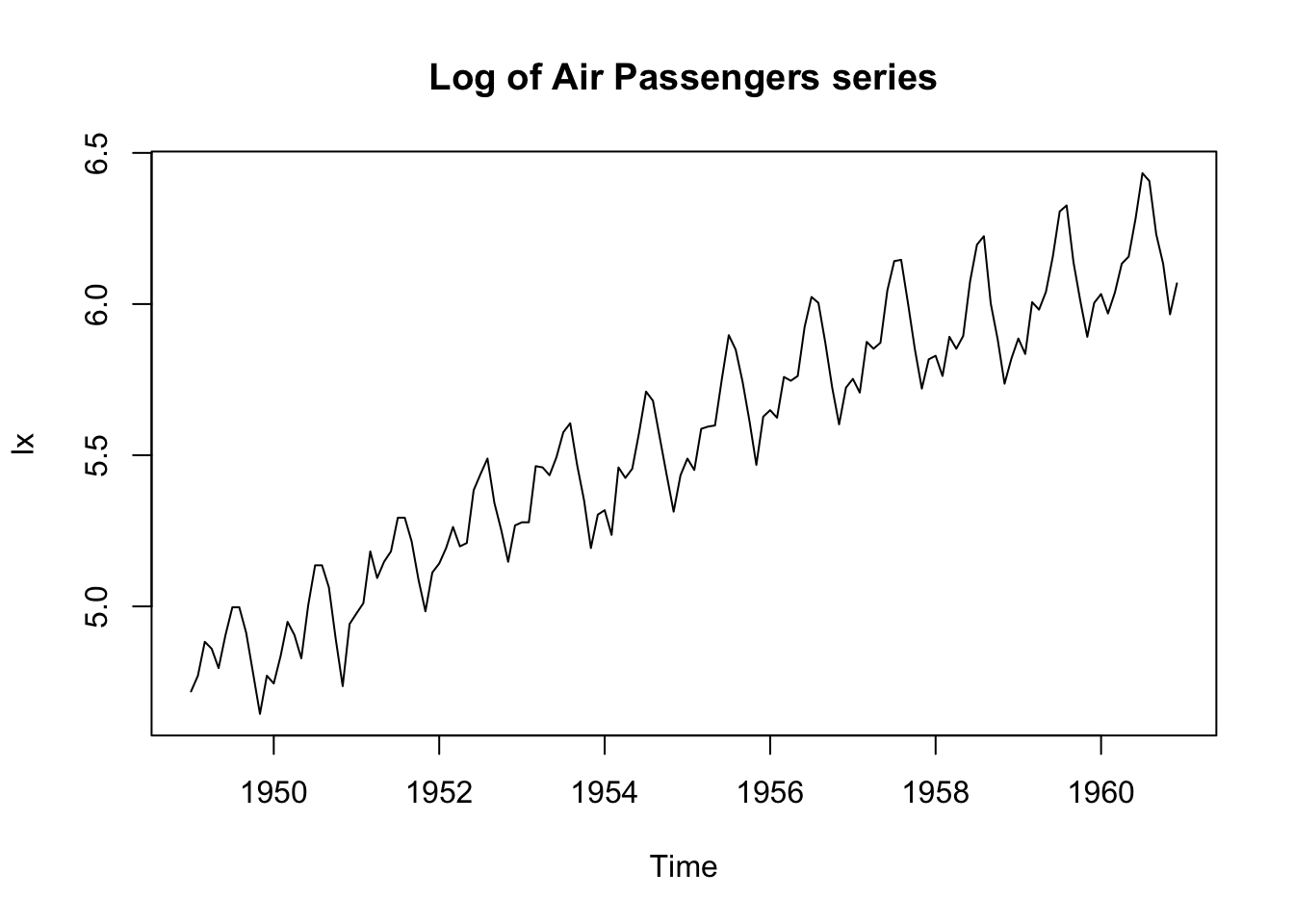

lx <- log(x)

plot.ts(lx, main = "Log of Air Passengers series")

The log transformation has stabilised the variance.

We now need to remove the trend, and try differencing:

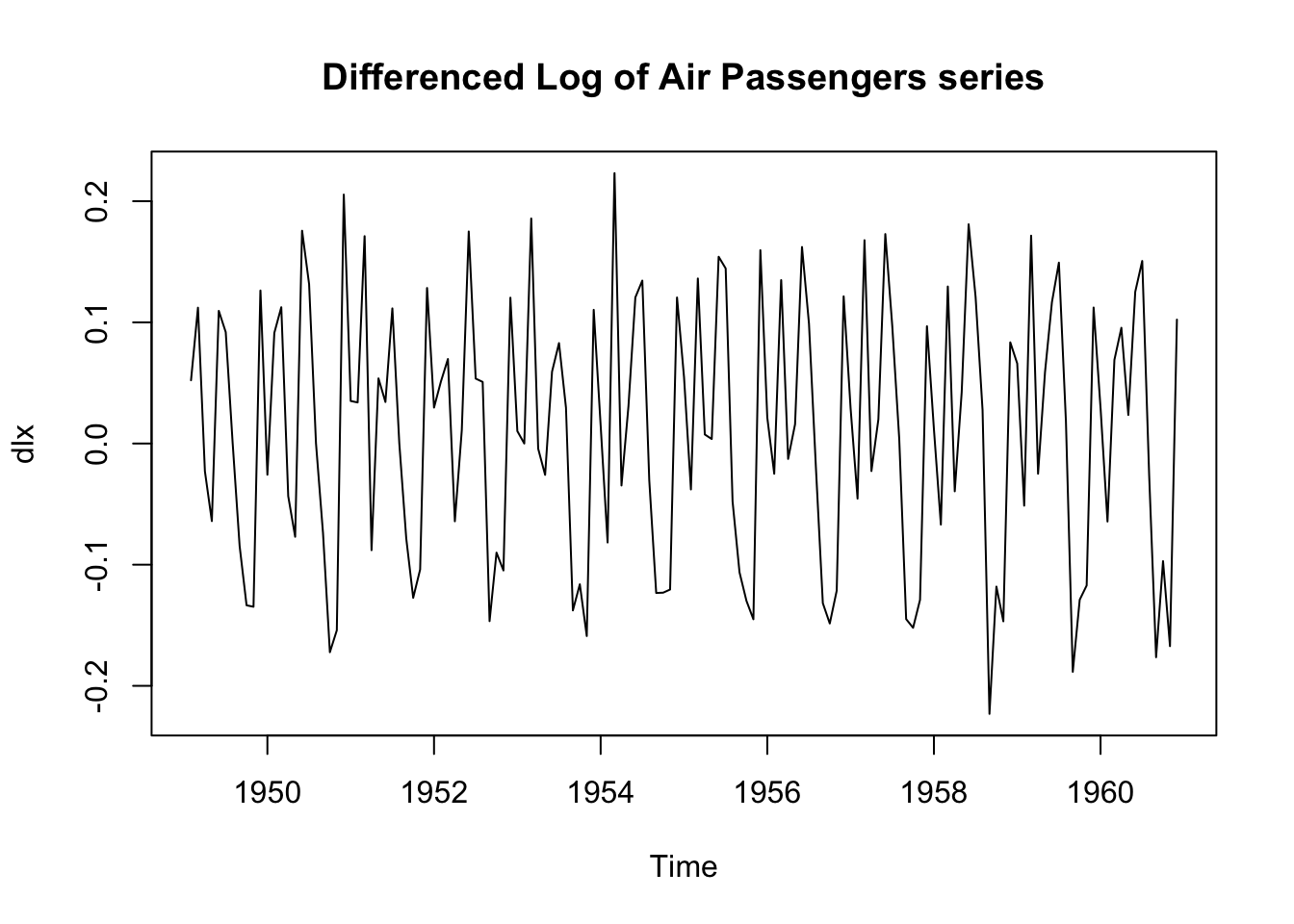

dlx <- diff(lx)

plot.ts(dlx, main = "Differenced Log of Air Passengers series")

It is clear that there is still persistence in the seasons, that is,

dlx$t\approx;$ dlx${t-12}$.

We apply a twelvth-order difference:

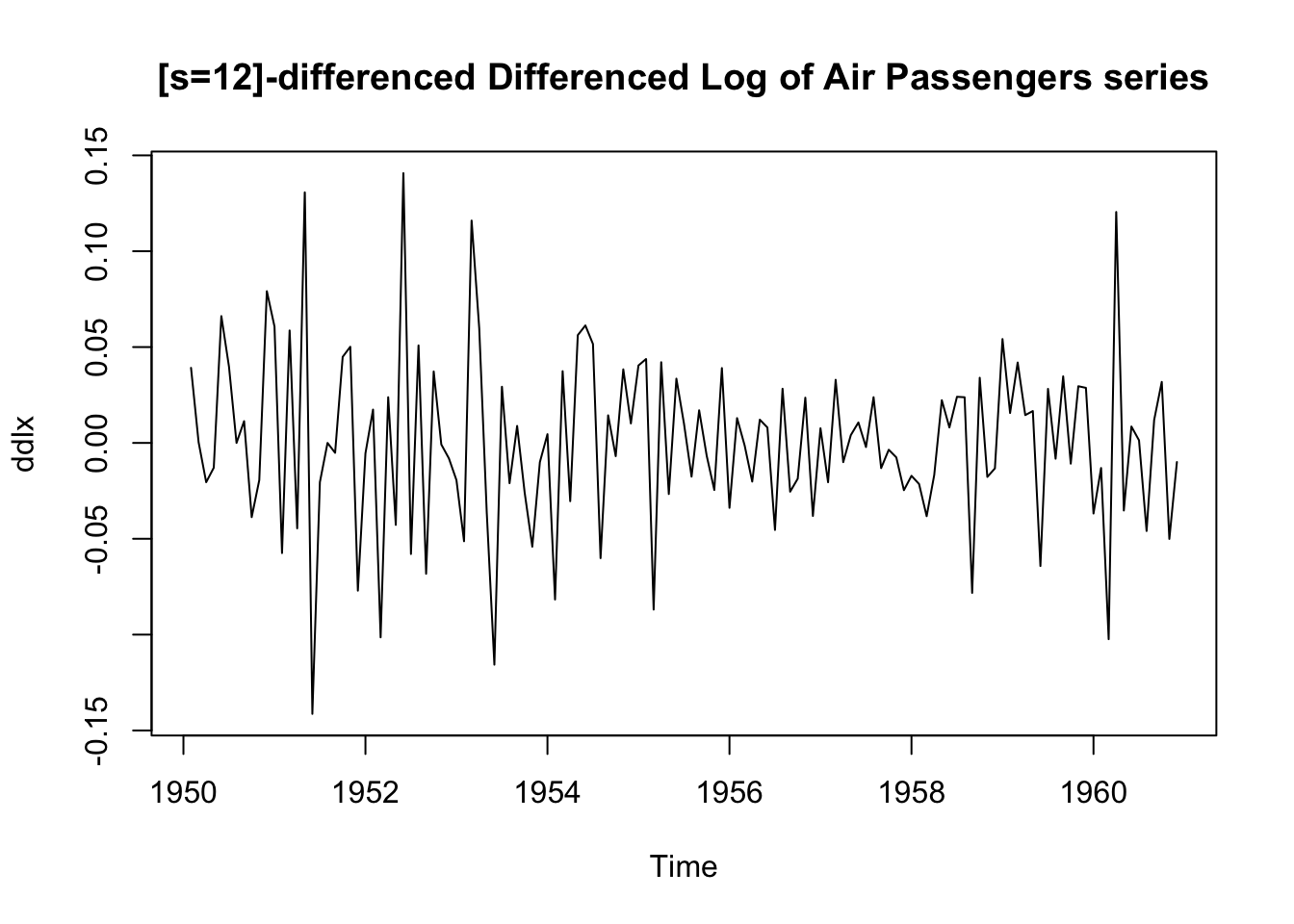

ddlx <- diff(dlx, 12)

plot.ts(ddlx, main = "[s=12]-differenced Differenced Log of Air Passengers series")

This seems to have removed the seasonality:

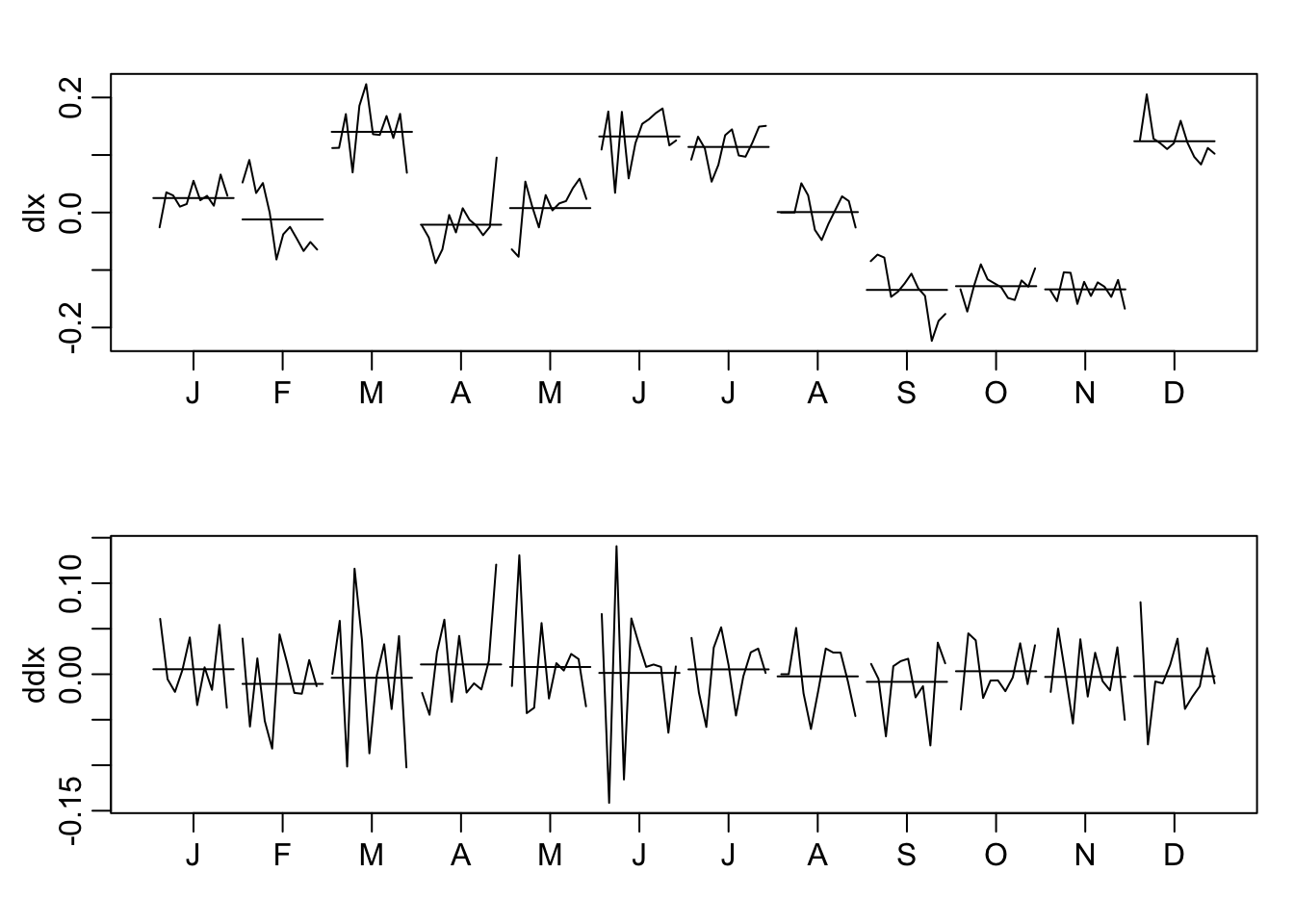

par(mfrow = c(2, 1), mar = c(3, 3, 2, 1), mgp = c(1.6, 0.6, 0))

monthplot(dlx)

monthplot(ddlx)

Note the monthplot function.

To summarise:

plot.ts(cbind(x, lx, dlx, ddlx), main = "")

The transformed data appears to be stationary and

we are now ready to fit a model (see Module 10).

Multivariate time series (TS 5.6) #

Introduction #

- Many data sets involve more than one time series, and we are often interested in the possible dynamics relating all series.

- We are thus interested in modelling and forecasting

- Unfortunately, extending univariate ARMA models to the multivariate case is not so simple.

- The multivariate autoregressive model, however, is a straight-forward extension of the univariate AR model.

- The resulting models are called Vector Auto-Regressive (VAR) models.

- The reference for this section is TS 5.6.

VAR(1) model #

For the first-order vector autoregressive model, VAR(1), we take

Example: Mortality, Temperature, Pollution #

- Define

- We might envision dynamic relations with first order relation

- This can be rewritten in matrix form as

We use the R package vars to fit VAR models via least squares.

library(vars)

x <- cbind(cmort, tempr, part)

summary(VAR(x, p = 1, type = "both")) # 'both' fits constant + trend

...

## VAR Estimation Results:

## =========================

## Endogenous variables: cmort, tempr, part

## Deterministic variables: both

## Sample size: 507

## Log Likelihood: -5116.02

## Roots of the characteristic polynomial:

## 0.8931 0.4953 0.1444

## Call:

## VAR(y = x, p = 1, type = "both")

...

Note that roots less than one here ensure stability; see

this

library(vars)

x <- cbind(cmort, tempr, part)

summary(VAR(x, p = 1, type = "both")) # 'both' fits constant + trend

...

## Estimation results for equation cmort:

## ======================================

## cmort = cmort.l1 + tempr.l1 + part.l1 + const + trend

##

## Estimate Std. Error t value Pr(>|t|)

## cmort.l1 0.464824 0.036729 12.656 < 2e-16 ***

## tempr.l1 -0.360888 0.032188 -11.212 < 2e-16 ***

## part.l1 0.099415 0.019178 5.184 3.16e-07 ***

## const 73.227292 4.834004 15.148 < 2e-16 ***

## trend -0.014459 0.001978 -7.308 1.07e-12 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

##

## Residual standard error: 5.583 on 502 degrees of freedom

## Multiple R-Squared: 0.6908, Adjusted R-squared: 0.6883

## F-statistic: 280.3 on 4 and 502 DF, p-value: < 2.2e-16

...

library(vars)

x <- cbind(cmort, tempr, part)

summary(VAR(x, p = 1, type = "both")) # 'both' fits constant + trend

...

## Estimation results for equation tempr:

## ======================================

## tempr = cmort.l1 + tempr.l1 + part.l1 + const + trend

##

## Estimate Std. Error t value Pr(>|t|)

## cmort.l1 -0.244046 0.042105 -5.796 1.20e-08 ***

## tempr.l1 0.486596 0.036899 13.187 < 2e-16 ***

## part.l1 -0.127661 0.021985 -5.807 1.13e-08 ***

## const 67.585598 5.541550 12.196 < 2e-16 ***

## trend -0.006912 0.002268 -3.048 0.00243 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

##

## Residual standard error: 6.4 on 502 degrees of freedom

## Multiple R-Squared: 0.5007, Adjusted R-squared: 0.4967

## F-statistic: 125.9 on 4 and 502 DF, p-value: < 2.2e-16

...

library(vars)

x <- cbind(cmort, tempr, part)

summary(VAR(x, p = 1, type = "both")) # 'both' fits constant + trend

...

## Estimation results for equation part:

## =====================================

## part = cmort.l1 + tempr.l1 + part.l1 + const + trend

##

## Estimate Std. Error t value Pr(>|t|)

## cmort.l1 -0.124775 0.079013 -1.579 0.115

## tempr.l1 -0.476526 0.069245 -6.882 1.77e-11 ***

## part.l1 0.581308 0.041257 14.090 < 2e-16 ***

## const 67.463501 10.399163 6.487 2.10e-10 ***

## trend -0.004650 0.004256 -1.093 0.275

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

##

## Residual standard error: 12.01 on 502 degrees of freedom

## Multiple R-Squared: 0.3732, Adjusted R-squared: 0.3683

## F-statistic: 74.74 on 4 and 502 DF, p-value: < 2.2e-16

...

library(vars)

x <- cbind(cmort, tempr, part)

summary(VAR(x, p = 1, type = "both")) # 'both' fits constant + trend

...

## Covariance matrix of residuals:

## cmort tempr part

## cmort 31.172 5.975 16.65

## tempr 5.975 40.965 42.32

## part 16.654 42.323 144.26

##

## Correlation matrix of residuals:

## cmort tempr part

## cmort 1.0000 0.1672 0.2484

## tempr 0.1672 1.0000 0.5506

## part 0.2484 0.5506 1.0000

...

- It is easy to extend the VAR(1) process to higher orders (that means with correlations going farther than one step into the past), leading to

- The regressors are

- The regression model is then

- The function

VARselectsuggests the optimal order

Example: VAR(1) on Mortality, Temperature, Pollution #

VARselect(x, lag.max = 10, type = "both")

## $selection

## AIC(n) HQ(n) SC(n) FPE(n)

## 9 5 2 9

##

## $criteria

## 1 2 3 4 5

## AIC(n) 11.73780 11.30185 11.26788 11.23030 11.17634

## HQ(n) 11.78758 11.38149 11.37738 11.36967 11.34557

## SC(n) 11.86463 11.50477 11.54689 11.58541 11.60755

## FPE(n) 125216.91717 80972.28678 78268.19568 75383.73647 71426.10041

## 6 7 8 9 10

## AIC(n) 11.15266 11.15247 11.12878 11.11915 11.12019

## HQ(n) 11.35176 11.38144 11.38760 11.40784 11.43874

## SC(n) 11.65996 11.73587 11.78827 11.85473 11.93187

## FPE(n) 69758.25113 69749.89175 68122.40518 67476.96374 67556.45243

We will proceed with order

summary(fit <- VAR(x, p = 2, type = "both"))

...

## VAR Estimation Results:

## =========================

## Endogenous variables: cmort, tempr, part

## Deterministic variables: both

## Sample size: 506

## Log Likelihood: -4987.186

## Roots of the characteristic polynomial:

## 0.8807 0.8807 0.5466 0.4746 0.4746 0.4498

## Call:

## VAR(y = x, p = 2, type = "both")

...

summary(fit <- VAR(x, p = 2, type = "both"))

...

## Estimation results for equation cmort:

## ======================================

## cmort = cmort.l1 + tempr.l1 + part.l1 + cmort.l2 + tempr.l2 + part.l2 + const + trend

##

## Estimate Std. Error t value Pr(>|t|)

## cmort.l1 0.297059 0.043734 6.792 3.15e-11 ***

## tempr.l1 -0.199510 0.044274 -4.506 8.23e-06 ***

## part.l1 0.042523 0.024034 1.769 0.07745 .

## cmort.l2 0.276194 0.041938 6.586 1.15e-10 ***

## tempr.l2 -0.079337 0.044679 -1.776 0.07639 .

## part.l2 0.068082 0.025286 2.692 0.00733 **

## const 56.098652 5.916618 9.482 < 2e-16 ***

## trend -0.011042 0.001992 -5.543 4.84e-08 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

##

## Residual standard error: 5.295 on 498 degrees of freedom

## Multiple R-Squared: 0.7227, Adjusted R-squared: 0.7188

## F-statistic: 185.4 on 7 and 498 DF, p-value: < 2.2e-16

...

summary(fit <- VAR(x, p = 2, type = "both"))

...

## Estimation results for equation tempr:

## ======================================

## tempr = cmort.l1 + tempr.l1 + part.l1 + cmort.l2 + tempr.l2 + part.l2 + const + trend

##

## Estimate Std. Error t value Pr(>|t|)

## cmort.l1 -0.108889 0.050667 -2.149 0.03211 *

## tempr.l1 0.260963 0.051292 5.088 5.14e-07 ***

## part.l1 -0.050542 0.027844 -1.815 0.07010 .

## cmort.l2 -0.040870 0.048587 -0.841 0.40065

## tempr.l2 0.355592 0.051762 6.870 1.93e-11 ***

## part.l2 -0.095114 0.029295 -3.247 0.00125 **

## const 49.880485 6.854540 7.277 1.34e-12 ***

## trend -0.004754 0.002308 -2.060 0.03993 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

##

## Residual standard error: 6.134 on 498 degrees of freedom

## Multiple R-Squared: 0.5445, Adjusted R-squared: 0.5381

## F-statistic: 85.04 on 7 and 498 DF, p-value: < 2.2e-16

...

summary(fit <- VAR(x, p = 2, type = "both"))

...

## Estimation results for equation part:

## =====================================

## part = cmort.l1 + tempr.l1 + part.l1 + cmort.l2 + tempr.l2 + part.l2 + const + trend

##

## Estimate Std. Error t value Pr(>|t|)

## cmort.l1 0.078934 0.091773 0.860 0.390153

## tempr.l1 -0.388808 0.092906 -4.185 3.37e-05 ***

## part.l1 0.388814 0.050433 7.709 6.92e-14 ***

## cmort.l2 -0.325112 0.088005 -3.694 0.000245 ***

## tempr.l2 0.052780 0.093756 0.563 0.573724

## part.l2 0.382193 0.053062 7.203 2.19e-12 ***

## const 59.586169 12.415669 4.799 2.11e-06 ***

## trend -0.007582 0.004180 -1.814 0.070328 .

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

##

## Residual standard error: 11.11 on 498 degrees of freedom

## Multiple R-Squared: 0.4679, Adjusted R-squared: 0.4604

## F-statistic: 62.57 on 7 and 498 DF, p-value: < 2.2e-16

...

summary(fit <- VAR(x, p = 2, type = "both"))

...

## Covariance matrix of residuals:

## cmort tempr part

## cmort 28.034 7.076 16.33

## tempr 7.076 37.627 40.88

## part 16.325 40.880 123.45

##

## Correlation matrix of residuals:

## cmort tempr part

## cmort 1.0000 0.2179 0.2775

## tempr 0.2179 1.0000 0.5998

## part 0.2775 0.5998 1.0000

...

Furthermore, the Portmanteau test rejects the null hypothesis of white noise, suggesting poor fit:

serial.test(fit, lags.pt = 12, type = "PT.adjusted")

##

## Portmanteau Test (adjusted)

##

## data: Residuals of VAR object fit

## Chi-squared = 162.35, df = 90, p-value = 4.602e-06

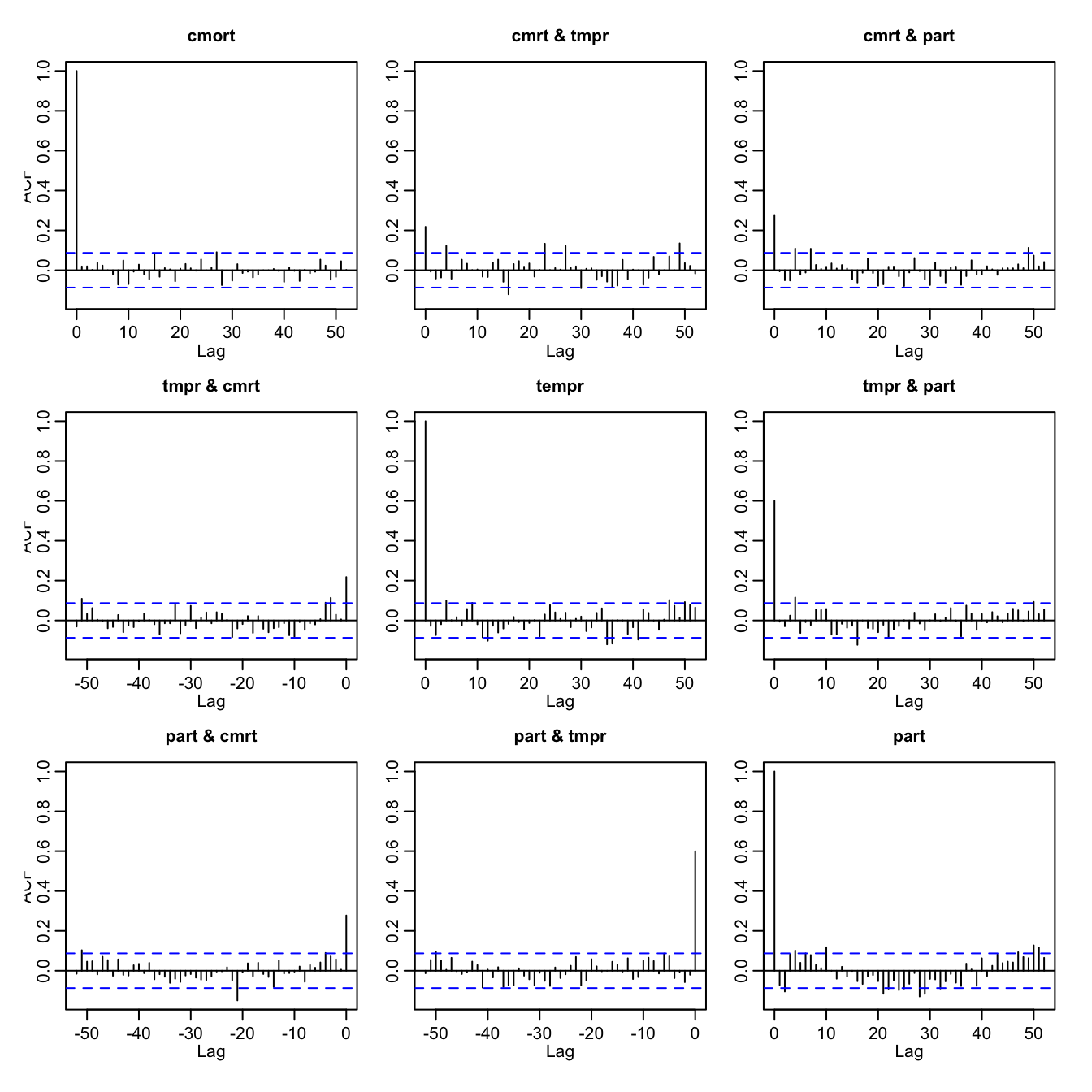

This is confirmed by visual inspection:

acf(resid(fit), 52)

acf(resid(fit), 52)

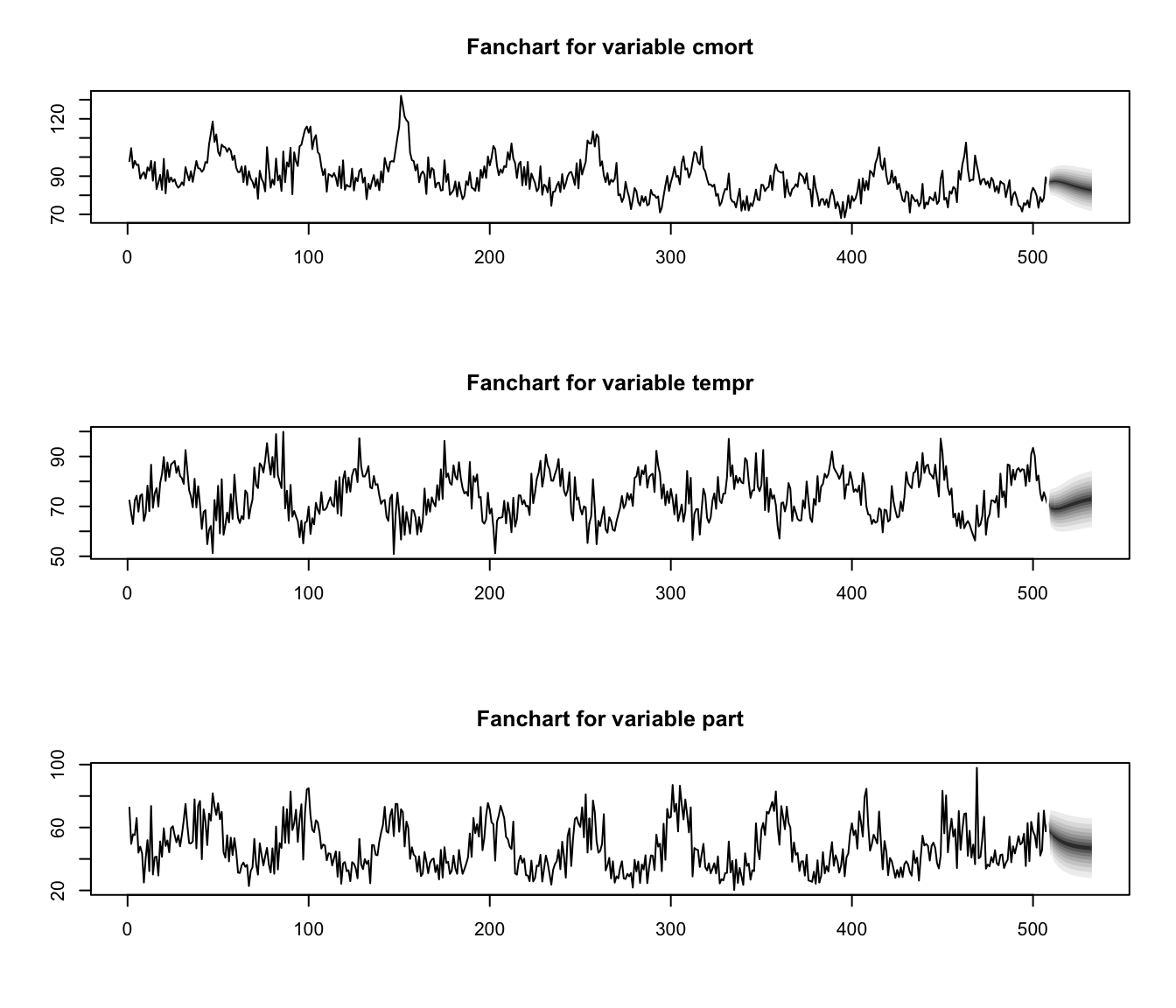

Predictions are produced with

fit.pr <- predict(fit, n.ahead = 24, ci = 0.95) # 24 weeks ahead

fanchart(fit.pr) # plot prediction + error

Special cases briefly mentioned in CS2 syllabus (S6) #

Bilinear models #

- Consider the bilinear model

- This equation is linear in

- This can be rewritten as

- As opposed to ARMA models, the bilinear models exhibit behaviour: when the process is far from its mean it tends to exhibit larger fluctuations.

- When the process is “at” its mean, dynamics are similar

to that of an

Threshold autoregressive models #

- Consider the threshold autogressive model

- A distinctive feature of some models from the threshold autoregressive class is limit cycle behaviour. This makes the threshold autoregressive models potential candidates for modelling ‘cyclic’ phenomena.

- The idea is to have parameters to be allowed to change in a “regime-switching” fashion, sometimes depending on the past values of

- Some additional details can be found, for instance, on Wikipedia

here(not assessable).

Random coefficient autoregressive models #

- Here

- Such a model could be used to represent the behaviour of an investment fund, with

- The extra randomness make such processes more irregular than the corresponding

Autoregressive models with conditional heteroscedasticity #

- A feature that is frequently observed in asset price data is that a significant change in the price of an asset is often followed by a period of high volatility.

- The class of autoregressive models with conditional heteroscedasticity of order

- Because of the scaling of

- If

- ARCH models have been used for modelling financial time series. If

- Note:

- Setting all

- So what we are modelling here is purely the variance of the process

- If we extend the idea of conditional heteroskedasticity to an ARMA structure (rather than just AR), we get the so-called “GARCH” models.

- Setting all

References #

Shumway, Robert H., and David S. Stoffer. 2017. Time Series Analysis and Its Applications: With r Examples. Springer.