Estimation #

Summary: analysing the ACF and PACF #

Behaviour of the ACF and PACF for ARMA Models #

| ACF | Tails off | Cuts off after lag |

Tails off |

| PACF | Cuts off after lag |

Tails off | Tails off |

- The PACF for MA models behaves much like the ACF for AR models.

- The PACF for AR models behaves much like the ACF for MA models.

- Because an invertible ARMA model has an infinite AR representation, its PACF will not cut off.

- Remember that the data might have to be detrended and/or transformed first (e.g., to stabilise the variance, apply a log transform), before such analysis is performed.

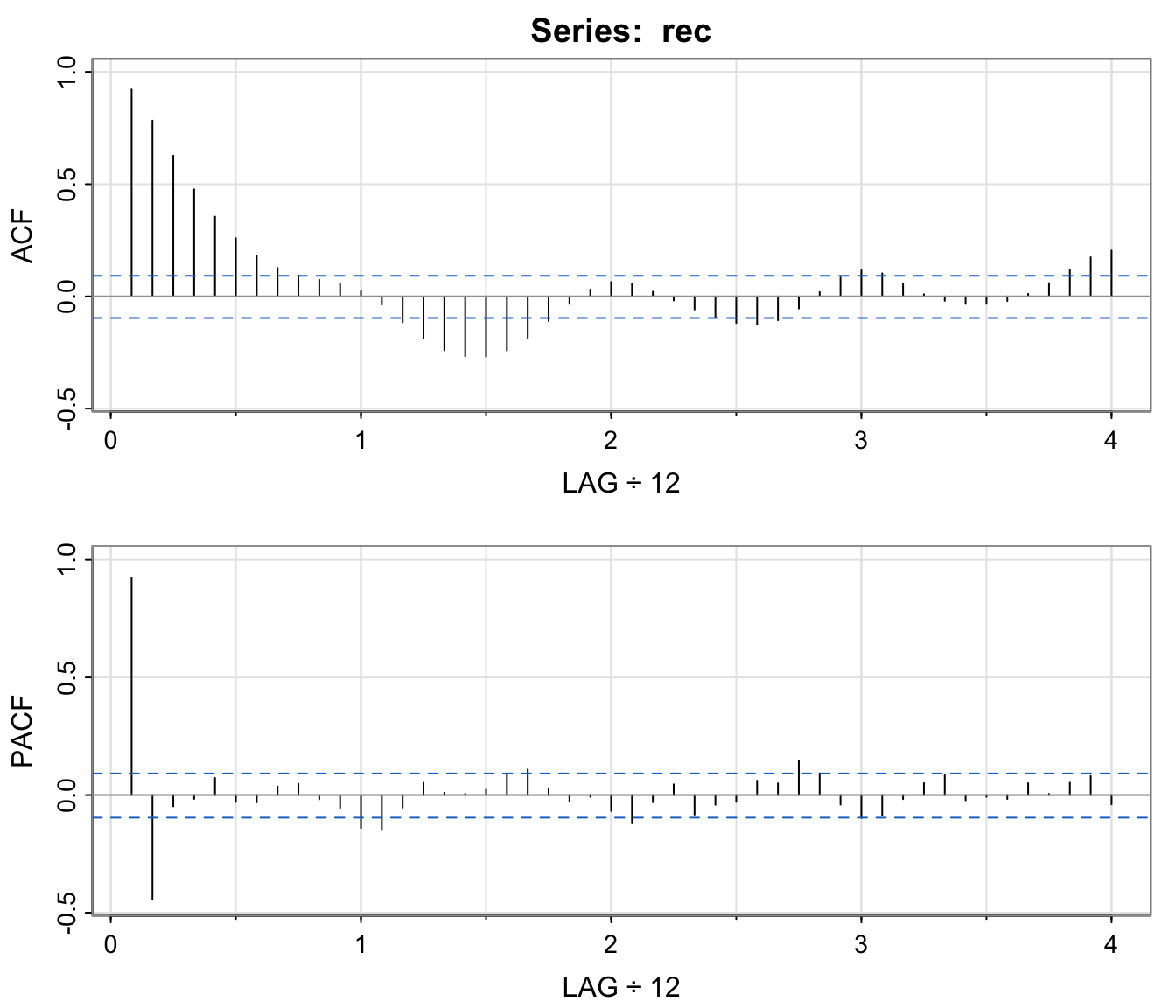

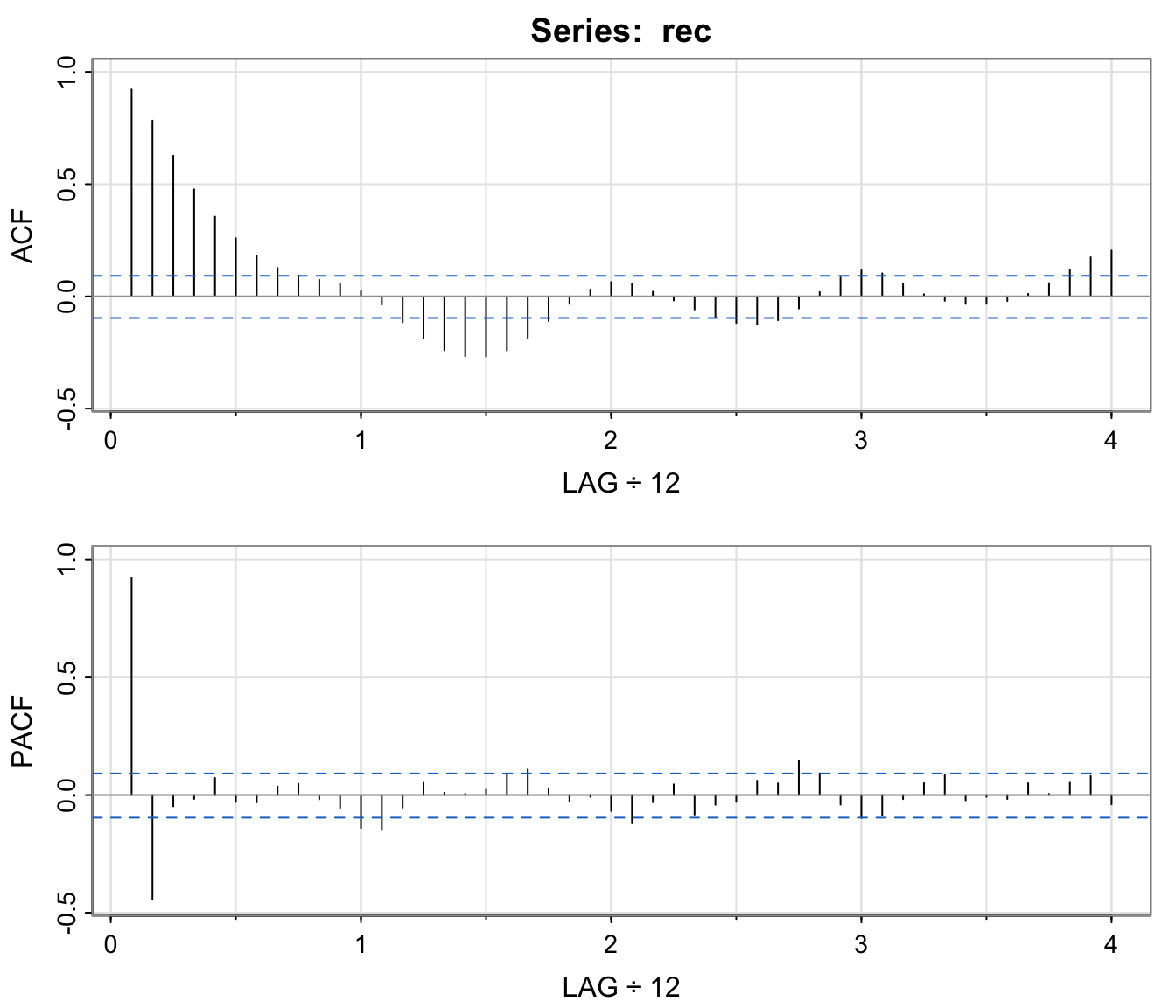

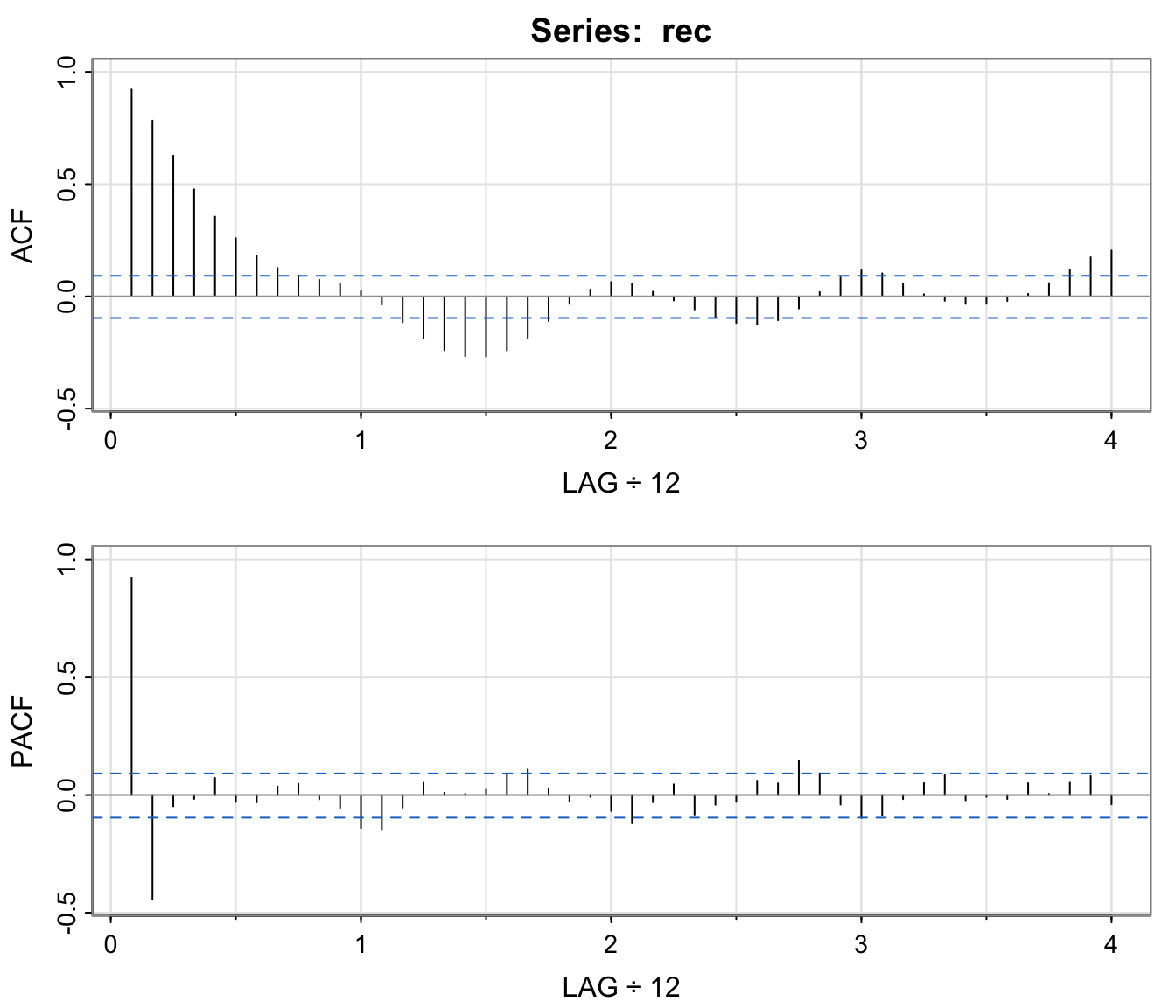

Example: Recruitment Series #

acf2(rec, 48) # will produce values and a graph

## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10] [,11]

## ACF 0.92 0.78 0.63 0.48 0.36 0.26 0.18 0.13 0.09 0.07 0.06

## PACF 0.92 -0.44 -0.05 -0.02 0.07 -0.03 -0.03 0.04 0.05 -0.02 -0.05

## [,12] [,13] [,14] [,15] [,16] [,17] [,18] [,19] [,20] [,21]

## ACF 0.02 -0.04 -0.12 -0.19 -0.24 -0.27 -0.27 -0.24 -0.19 -0.11

## PACF -0.14 -0.15 -0.05 0.05 0.01 0.01 0.02 0.09 0.11 0.03

## [,22] [,23] [,24] [,25] [,26] [,27] [,28] [,29] [,30] [,31]

## ACF -0.03 0.03 0.06 0.06 0.02 -0.02 -0.06 -0.09 -0.12 -0.13

## PACF -0.03 -0.01 -0.07 -0.12 -0.03 0.05 -0.08 -0.04 -0.03 0.06

## [,32] [,33] [,34] [,35] [,36] [,37] [,38] [,39] [,40] [,41]

## ACF -0.11 -0.05 0.02 0.08 0.12 0.10 0.06 0.01 -0.02 -0.03

## PACF 0.05 0.15 0.09 -0.04 -0.10 -0.09 -0.02 0.05 0.08 -0.02

## [,42] [,43] [,44] [,45] [,46] [,47] [,48]

## ACF -0.03 -0.02 0.01 0.06 0.12 0.17 0.20

## PACF -0.01 -0.02 0.05 0.01 0.05 0.08 -0.04

- The behaviour of the PACF is consistent with the behaviour of an AR(2): the ACF tails off, the PACF cuts after lag 2.

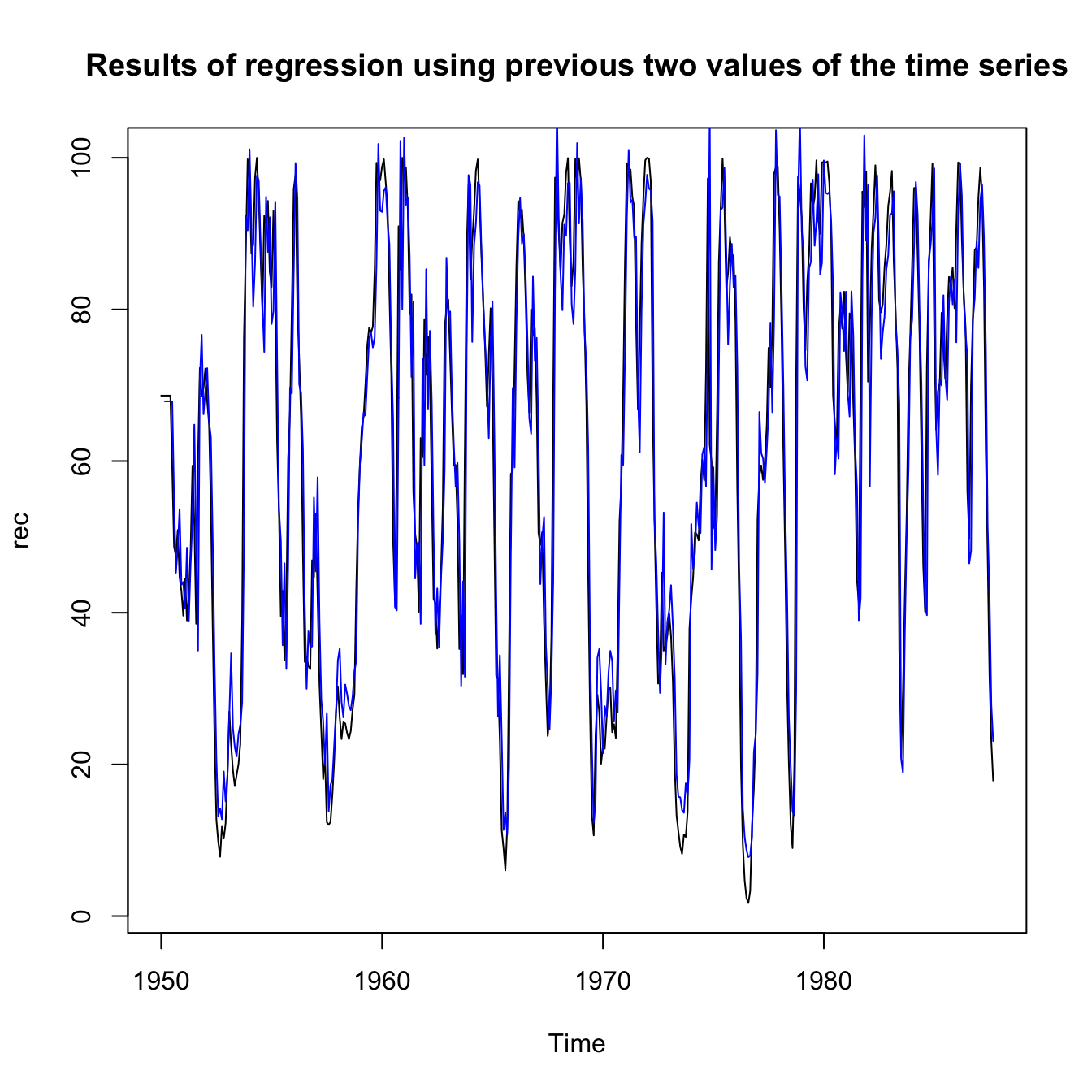

- The following slide shows the fit of a regression using the data triplets

- Let us fit this to an AR process using

ar.ols, which, according to R, willFit an autoregressive time series model to the data by ordinary least squares, by default selecting the complexity by AIC. - Note that the “optimal” order will be chose up to a maximum defined by

orderin the function.

regr <- ar.ols(rec, order = 10, demean = FALSE, intercept = TRUE)

regr

##

## Call:

## ar.ols(x = rec, order.max = 10, demean = FALSE, intercept = TRUE)

##

## Coefficients:

## 1 2

## 1.3541 -0.4632

##

## Intercept: 6.737 (1.111)

##

## Order selected 2 sigma^2 estimated as 89.72

regr$asy.se.coef # standard errors of the estimates

## $x.mean

## [1] 1.110599

##

## $ar

## [1] 0.04178901 0.04187942

ts.plot(rec, main = "Results of regression using previous two values of the time series")

lines(time(rec)[-c(1, 2)], regr$x.intercept + regr$ar[1] * rec[-c(1,

length(rec))] + regr$ar[2] * rec[-c(length(rec) - 1, length(rec))],

col = "blue", lwd = 1)

ACF and PACF in presence of seasonality #

| ACF* | Tails off at lags |

Cuts off after | Tails off at |

| lag |

lags |

||

| PACF* | Cuts off after | Tails off at lags |

Tails off at |

| lag |

lags |

*The values at nonseasonal lags

- This can be seen as a generalisation of the previous table

(which is the special case

- Fitting seasonal autoregressive and moving average components first generally leads to more satisfactory results

The Box-Jenkins methodology #

Overview - The Box-Jenkins methodology #

- Determine the integration order

- Then choose candidates for

- For fixed

- Method of moments

- Maximum likelihood (Note MLE is more involved than the Method of moments, but way more efficient when

- Least squares and variations

- Perform diagnostics, to choose the best

- Use the chosen model for forecasting.

This general approach to fitting is called

the Box-Jenkins methodology.

Choosing

- A time series

- Let

Several candidates could be selected, a final choice of which could be made after full estimation of all candidates and based on diagnostics.

One should be careful to not over-difference, as this introduces artificial dependence in the data.

Example: Recruitment series #

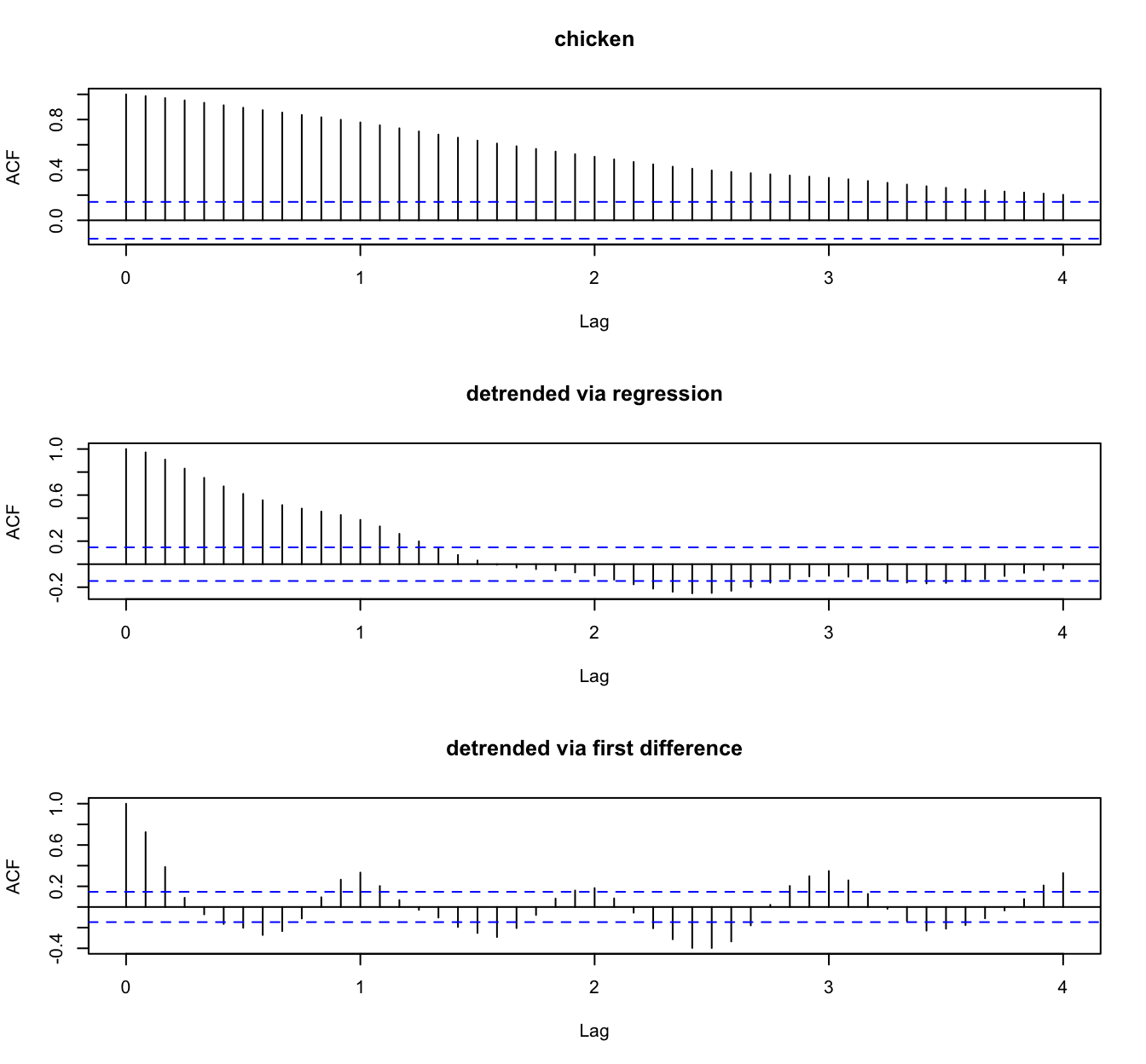

Example: Chicken prices #

Choosing

- We work on the differenced series, which is then assumed to be

- We assume the mean of the differenced series is 0. If it isn’t the case in the data, subtract its sample mean, and work on the residuals.

- Examine ACF and PACF to choose candidates for

- Alternatively or additionally, work iteratively from the simplest models and increase orders

- Higher orders will always reduce the sum of squares (more parameters). At the extreme a model with

- The appropriate order could be chosen using information criteria (e.g., BIC or AIC).

- Higher orders will always reduce the sum of squares (more parameters). At the extreme a model with

Example: Recruitment series #

Estimation of the parameters #

- We now need estimates for

- Use R to fit the model with the function

fit <- arima(x,order=c(p,0,q))wherexis the differenced time series, and wherepandqneed to replaced by their chosen numerical values. The default estimation procedure (unless there are missing values) is to use conditional sum-of-squares to find starting values, then maximum likelihood. - This will output parameter estimates, their standard errors, as well as

- (One could alternatively use a method of moments approach (see later), but this is much less efficient than MLE if

Example: Recruitment series #

MLE estimators are implemented in R as follows:

rec.mle <- ar.mle(rec, order = 2)

rec.mle$x.mean

## [1] 62.26153

rec.mle$ar

## [1] 1.3512809 -0.4612736

sqrt(diag(rec.mle$asy.var.coef))

## [1] 0.04099159 0.04099159

rec.mle$var.pred

## [1] 89.33597

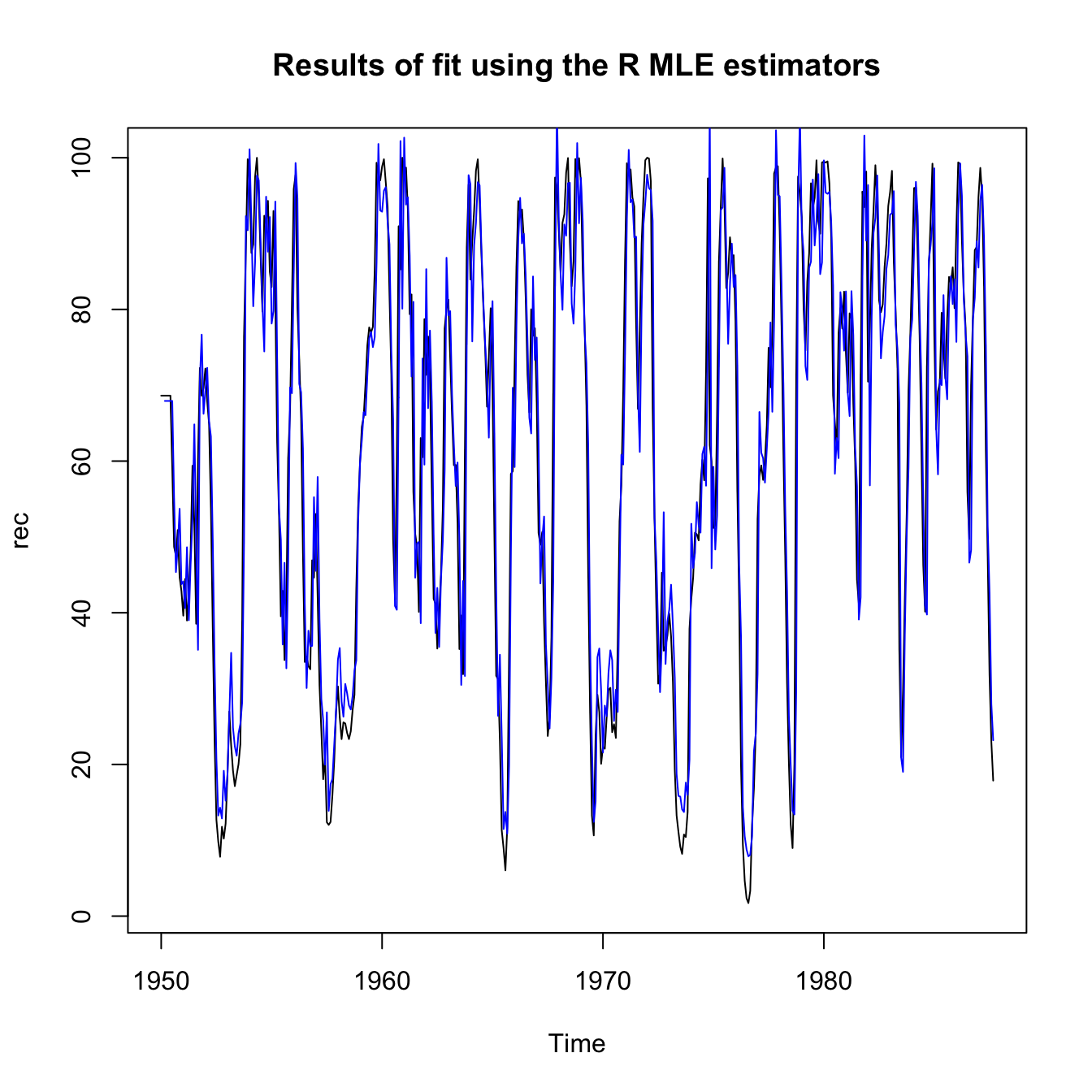

and then the fit is displayed as follows:

ts.plot(rec, main = "Results of fit using the R MLE estimators")

lines(rec[1:length(rec)] - rec.mle$resid, col = "blue", lwd = 1)

The best R estimators are implemented in R as follows:

rec.arima0 <- arima(rec, order = c(2, 0, 0)) # to use with tsdiag

rec.arima <- sarima(rec, 2, 0, 0)

rec.arima0$coef[3] # 61.86

## intercept

## 61.85847

rec.arima0$coef[1:2] # 1.35, -.46

## ar1 ar2

## 1.3512196 -0.4612128

sqrt(diag(rec.arima0$var.coef)) # .04, .04

## ar1 ar2 intercept

## 0.04158461 0.04166812 4.00393378

rec.arima0$sigma2 # 89.33

## [1] 89.33436

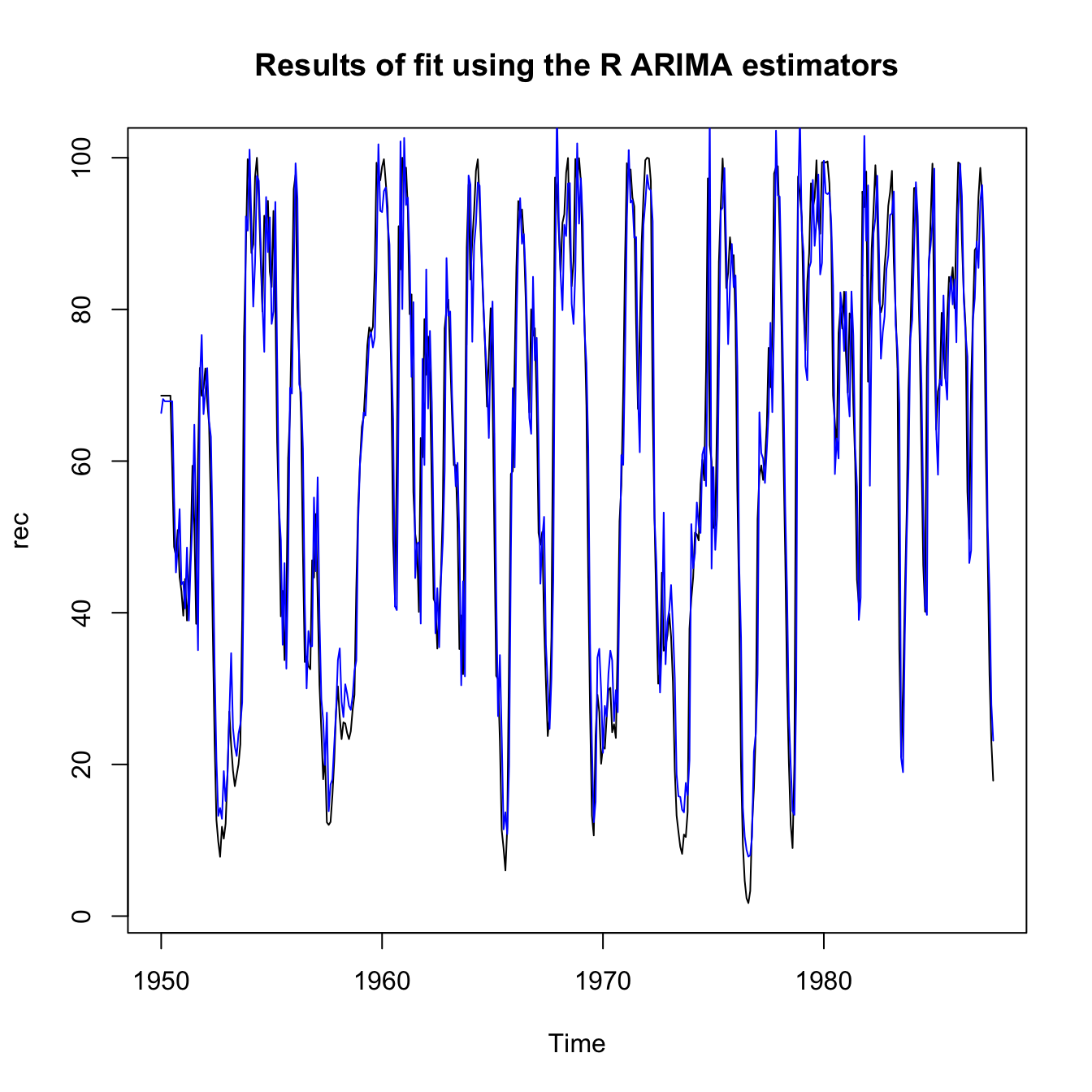

and then the fit is displayed as follows:

ts.plot(rec, main = "Results of fit using the R ARIMA estimators")

lines(rec[1:length(rec)] - rec.arima$fit$residuals, col = "blue",

lwd = 1)

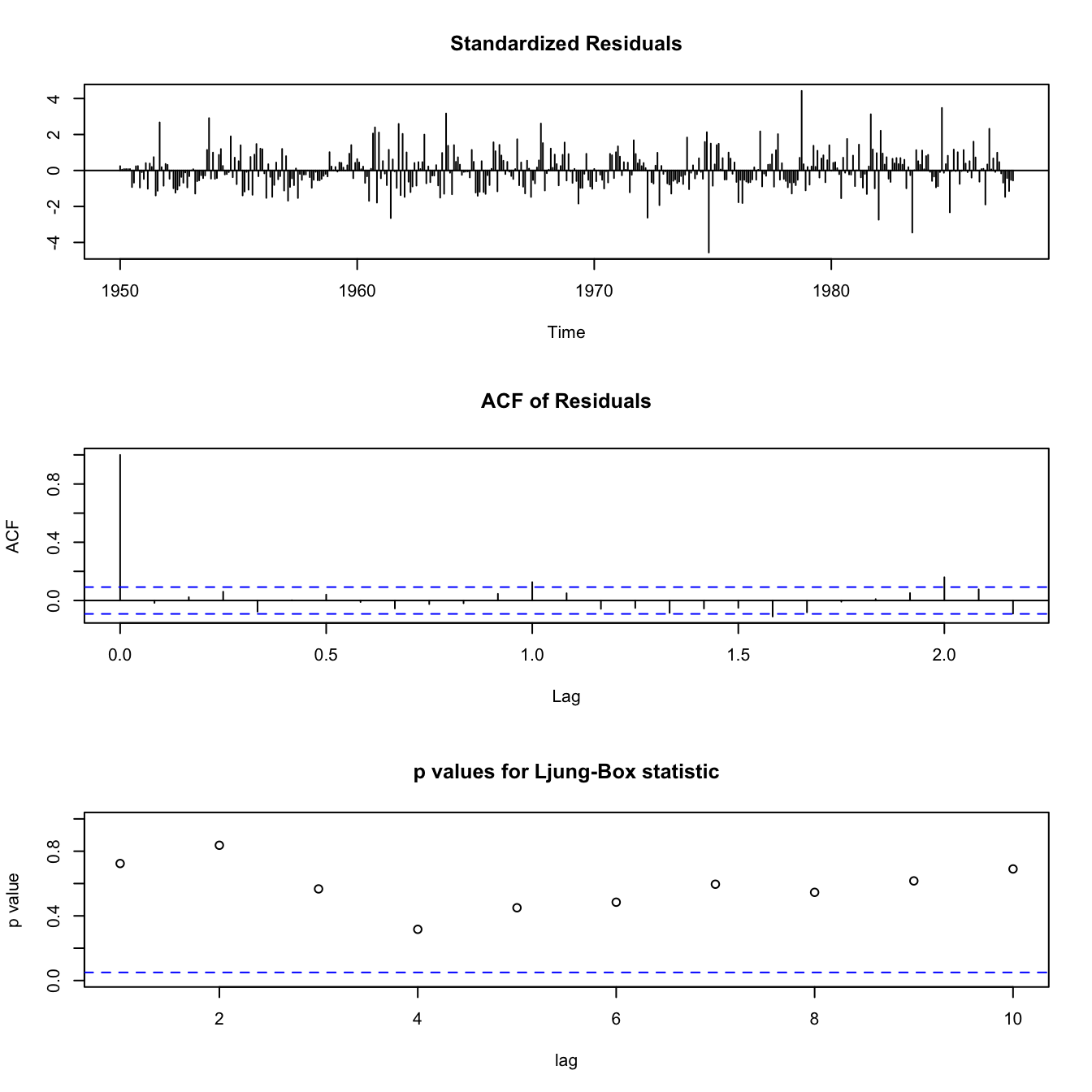

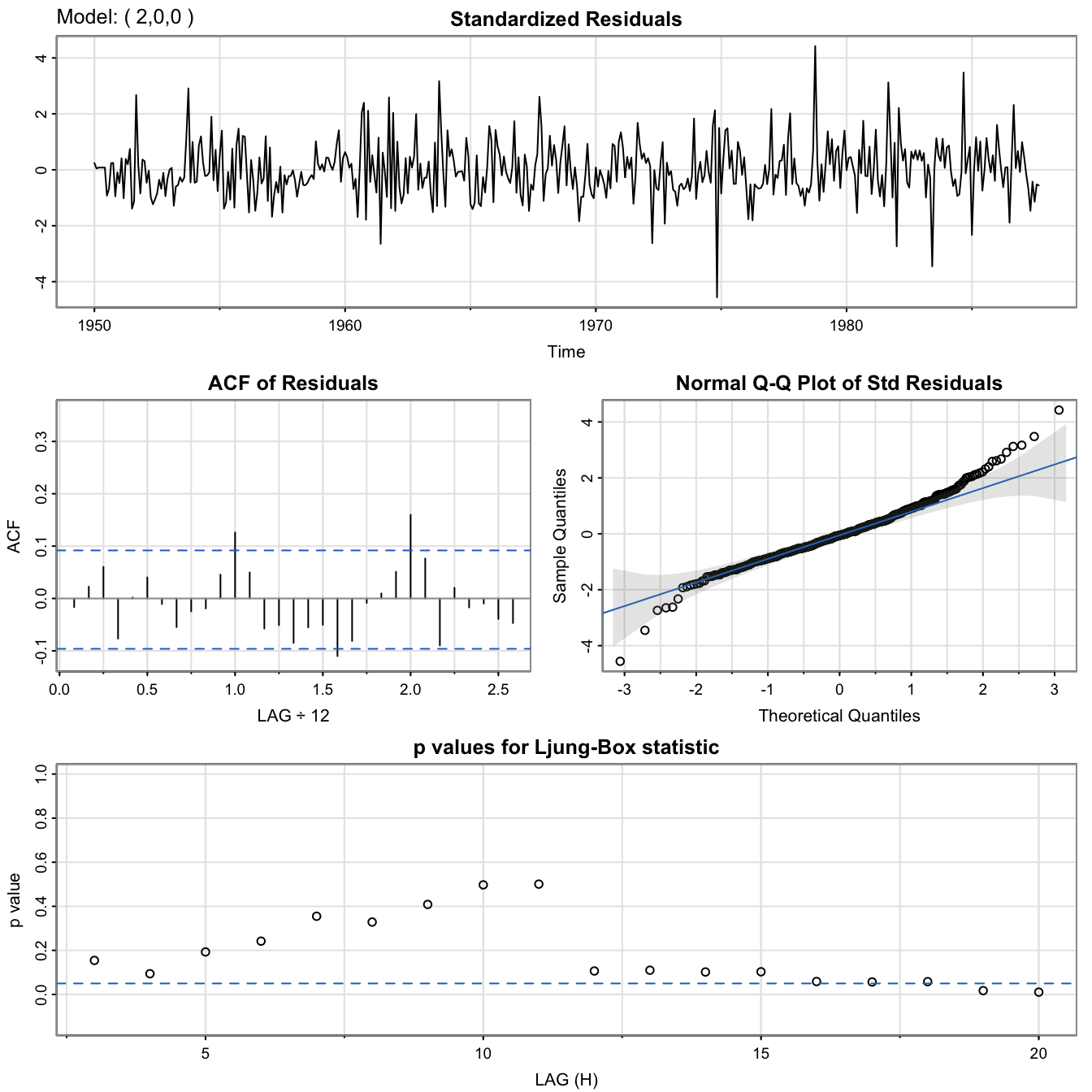

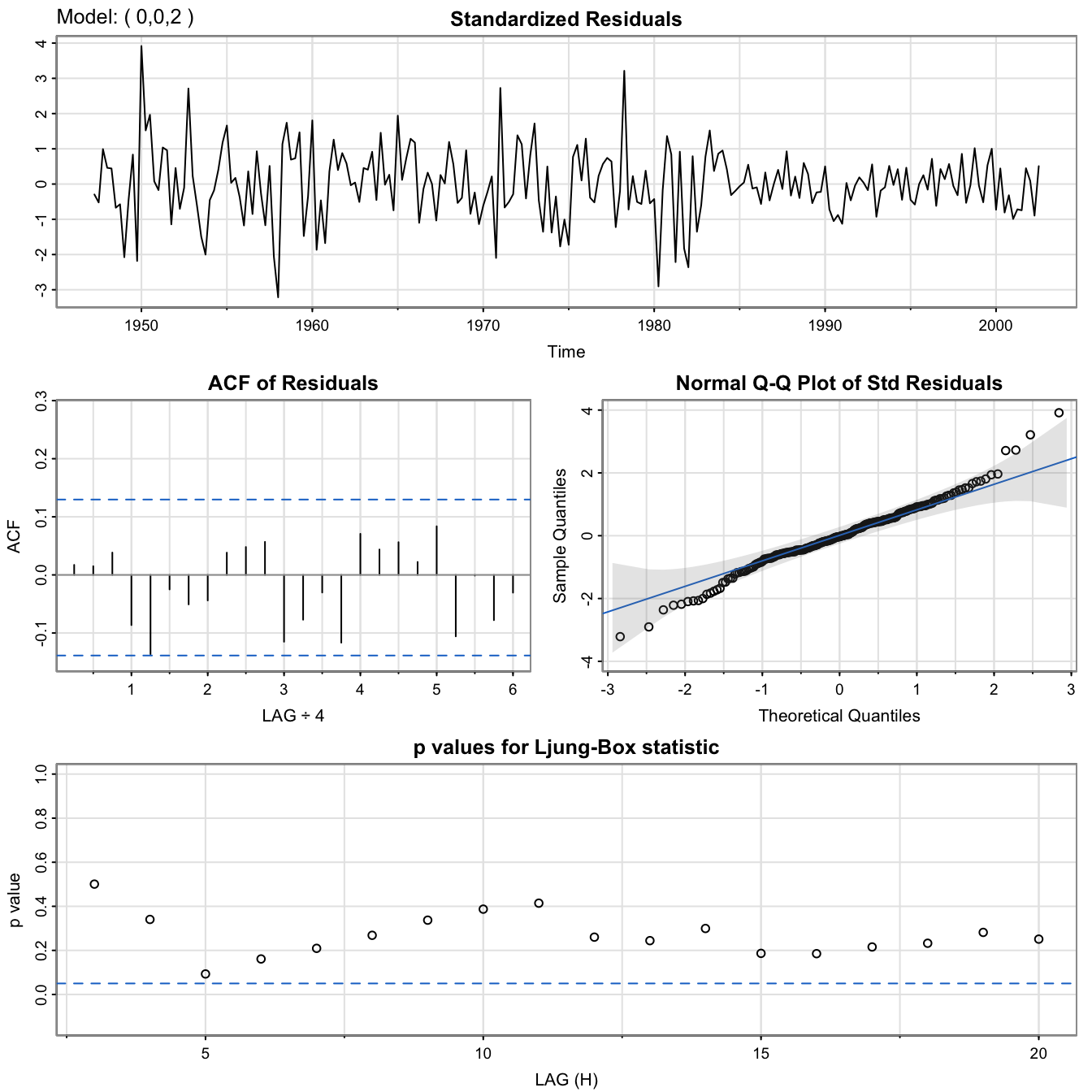

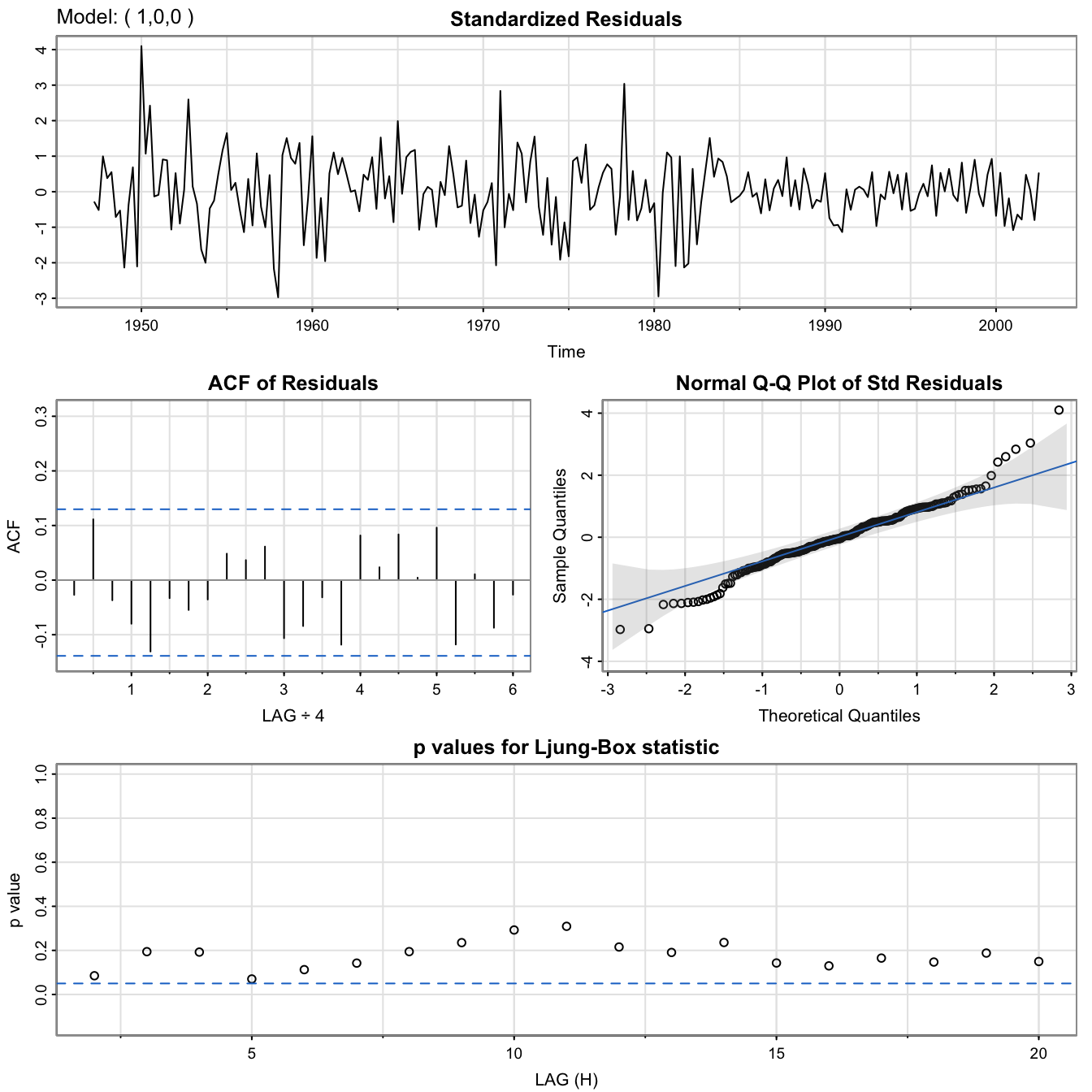

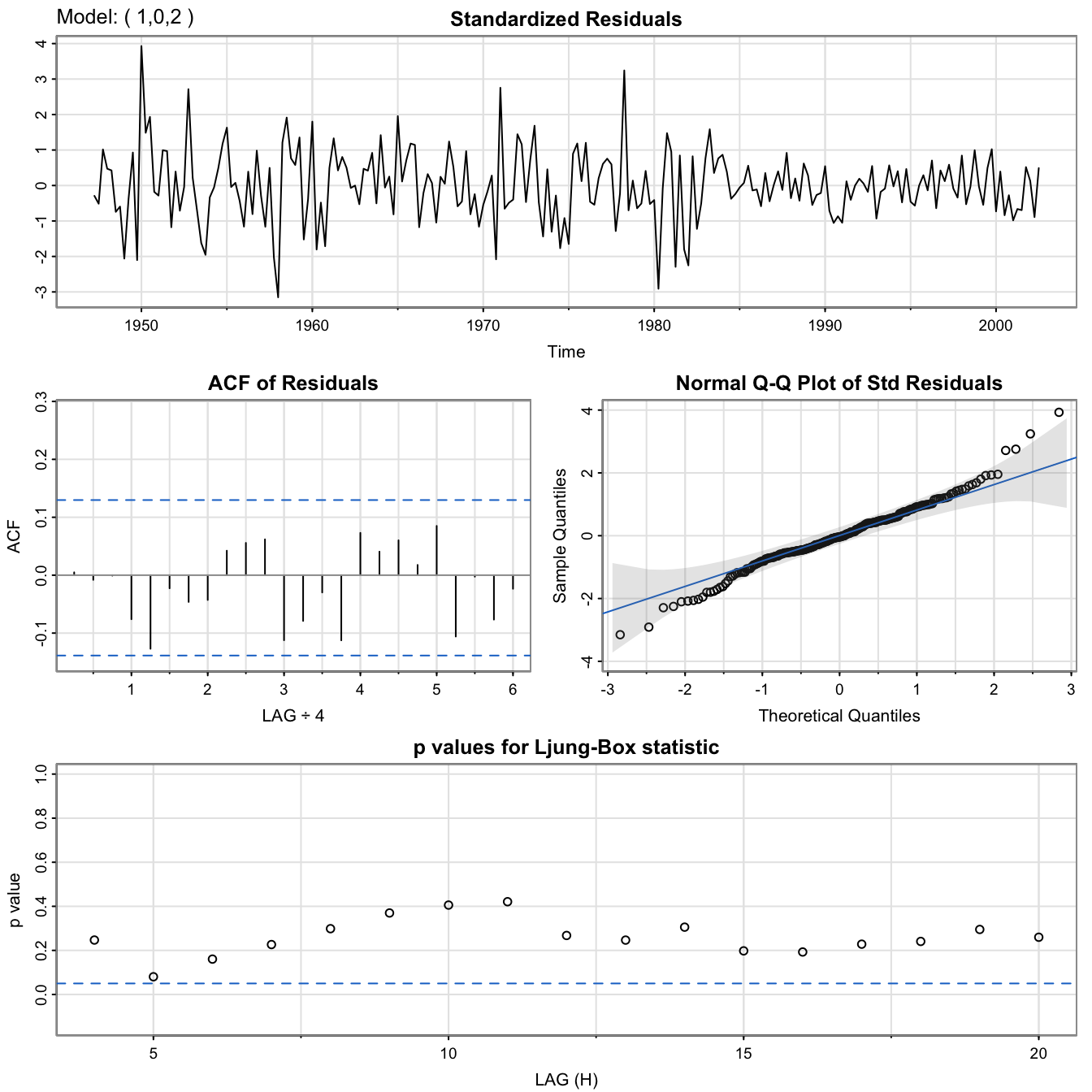

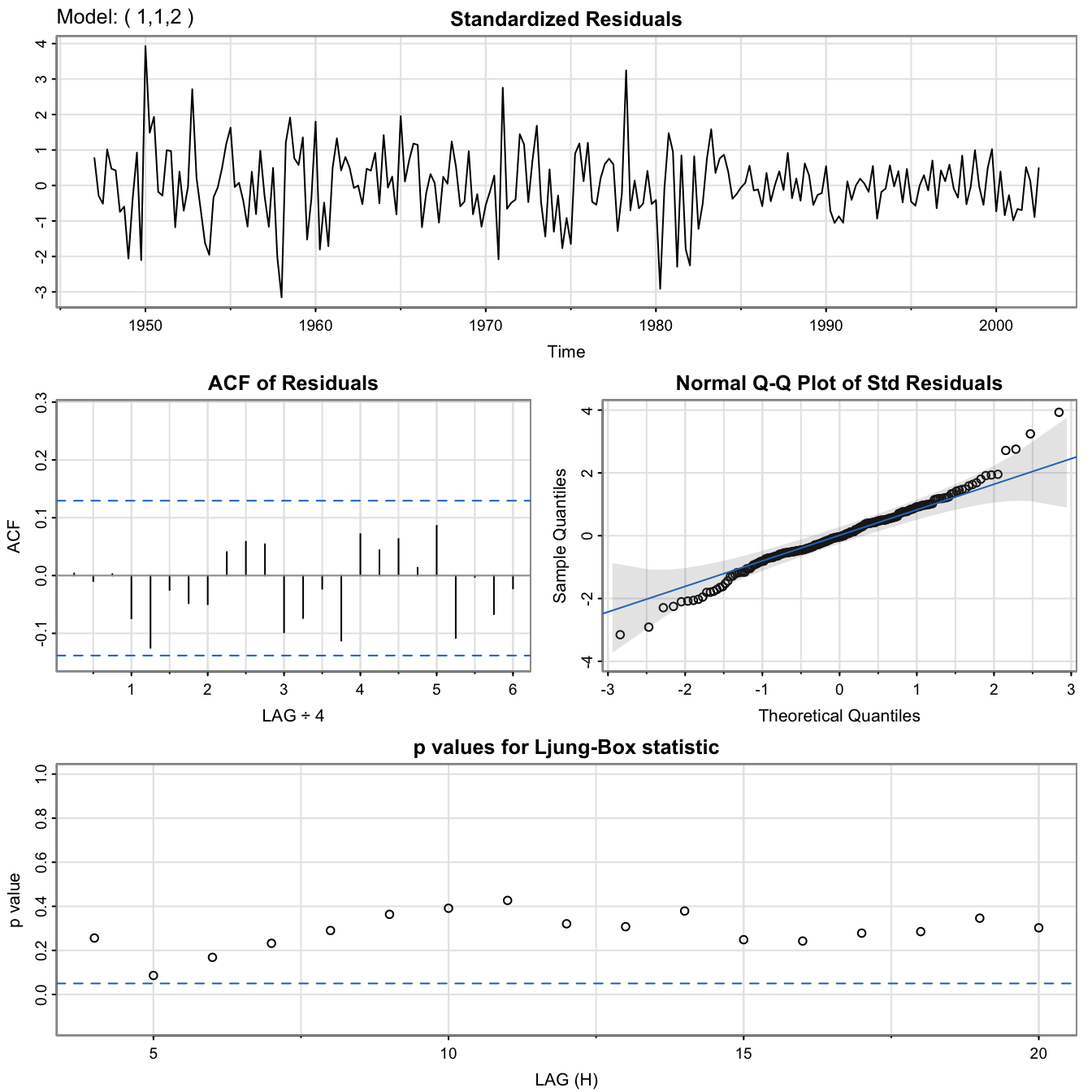

Diagnostics #

Guiding principle: if we have a good fit, the residuals should be (uncorrelated) white noise. This can be done in three ways:

- Residuals: If visual inspection of the residuals lets appear any pattern (trend or magnitude) then it can’t be white noise

- ACF and PACF: these should be within their confidence intervals with appropriate frequency (95%)

- More formally, one can test for white noise with the Ljung-Box portmanteau test. This test considers the dimension of the models (the number of parameters), and tests whether correlations are 0 at all lags, and displays

In Base R , those three diagnostics are output when running the R function tsdiag(fit) where fit is where we stored our estimation from the function arima (but this function has errors!).

Better still, use the fitting function sarima(rec,2,0,0) of astsa.

tsdiag(rec.arima0)

[note no output in the console]

RecSARIMAdiag <- sarima(rec, 2, 0, 0)

## initial value 3.332380

## iter 2 value 3.251366

## iter 3 value 2.564654

## iter 4 value 2.430141

## iter 5 value 2.258212

## iter 6 value 2.253343

## iter 7 value 2.248346

## iter 8 value 2.248345

## iter 9 value 2.248345

## iter 10 value 2.248341

## iter 11 value 2.248332

## iter 12 value 2.248331

## iter 13 value 2.248330

## iter 13 value 2.248330

## iter 13 value 2.248330

## final value 2.248330

## converged

## initial value 2.248862

## iter 2 value 2.248857

## iter 3 value 2.248855

## iter 4 value 2.248855

## iter 5 value 2.248854

## iter 6 value 2.248854

## iter 7 value 2.248854

## iter 8 value 2.248854

## iter 9 value 2.248853

## iter 10 value 2.248853

## iter 10 value 2.248853

## iter 10 value 2.248853

## final value 2.248853

## converged

## <><><><><><><><><><><><><><>

##

## Coefficients:

## Estimate SE t.value p.value

## ar1 1.3512 0.0416 32.4933 0

## ar2 -0.4612 0.0417 -11.0687 0

## xmean 61.8585 4.0039 15.4494 0

##

## sigma^2 estimated as 89.33436 on 450 degrees of freedom

##

## AIC = 7.353244 AICc = 7.353362 BIC = 7.389587

##

RecSARIMAdiag$fit

##

## Call:

## arima(x = xdata, order = c(p, d, q), seasonal = list(order = c(P, D, Q), period = S),

## xreg = xmean, include.mean = FALSE, transform.pars = trans, fixed = fixed,

## optim.control = list(trace = trc, REPORT = 1, reltol = tol))

##

## Coefficients:

## ar1 ar2 xmean

## 1.3512 -0.4612 61.8585

## s.e. 0.0416 0.0417 4.0039

##

## sigma^2 estimated as 89.33: log likelihood = -1661.51, aic = 3331.02

RecSARIMAdiag$ICs

## AIC AICc BIC

## 7.353244 7.353362 7.389587

Overfitting caveat #

- If we choose an order that is too high, the unnecessary parameters will be insignificant, but this reduces the efficiency of the parameters that are significant. This might look like a better fit, but might lead to bad forecasts. Hence, this should be avoided.

- This can be used as a diagnostic though: if we increment the order and we get similar parameter estimates, then the smaller (original) model should have the appropriate order.

Although generally not well-known, it is an obvious fact to seasoned modellers that extrapolating overfitted models is almost guaranteed to lead to aberrant results.

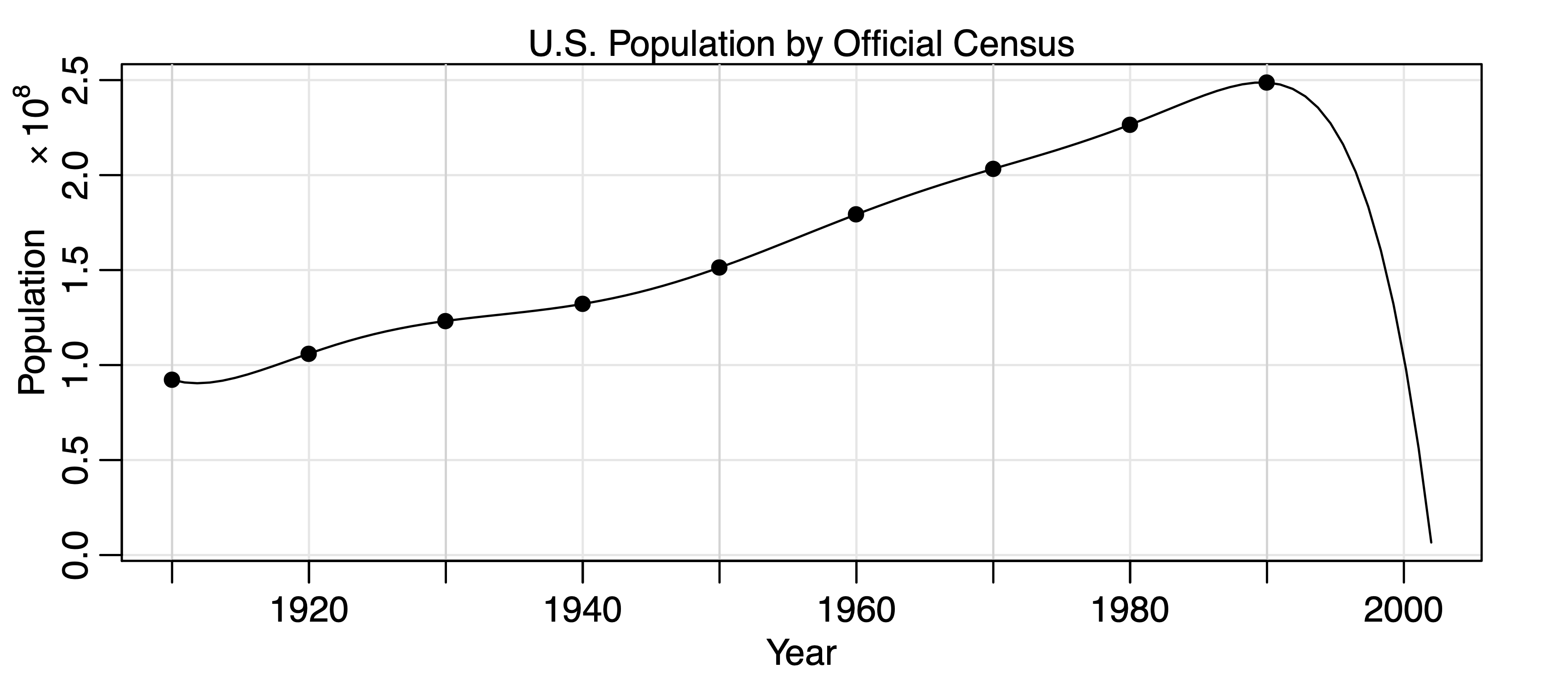

Example: Overfitting caveat #

- shows the U.S. population by official census, every ten years from 1910 to 1990, as points. If we use these nine observations to predict the future population, we can use an eight-degree polynomial so the fit to the nine observations is perfect.

- The model predicts that the population of the United States will be close to zero in the year 2000, and will cross zero sometime

in the year 2002!

When seasonality is involved #

The process described above is applied by analogy when some seasonality is present in the residuals:

- Of course, the first step is to include a seasonal component in the trend if appropriate (such as with

- The series is then “in-season” and “out-of-season” differenced to lead to stationarity (if needed).

- Peaks in the ACF and PACF are then analysed with the tables presented at the beginning of this section, and eliminated with an appropriate

- Goodness-of-fit is assessed as usual by examining the whiteness of the residuals

(e.g., this can be done thanks to the diagnostics ofsarima)

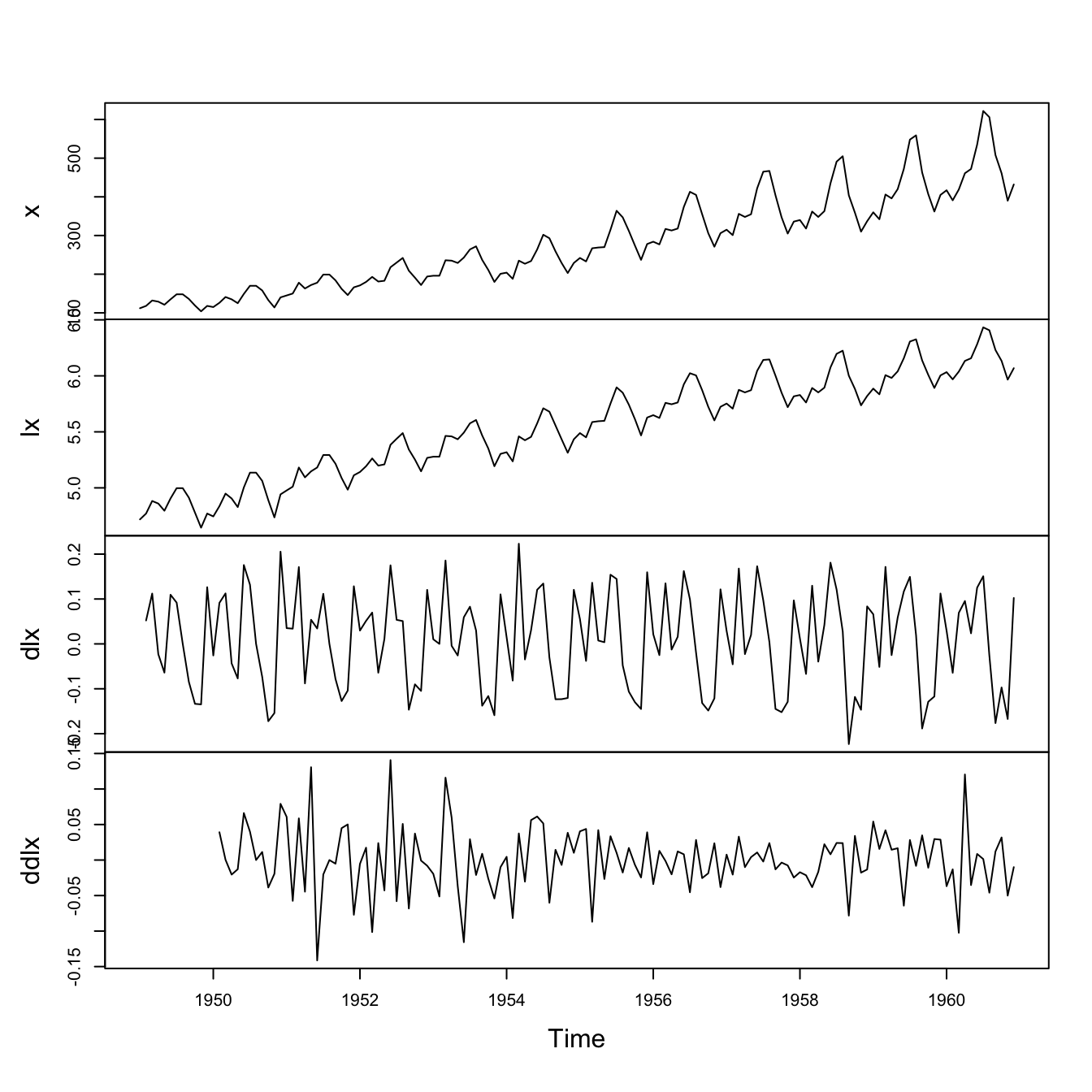

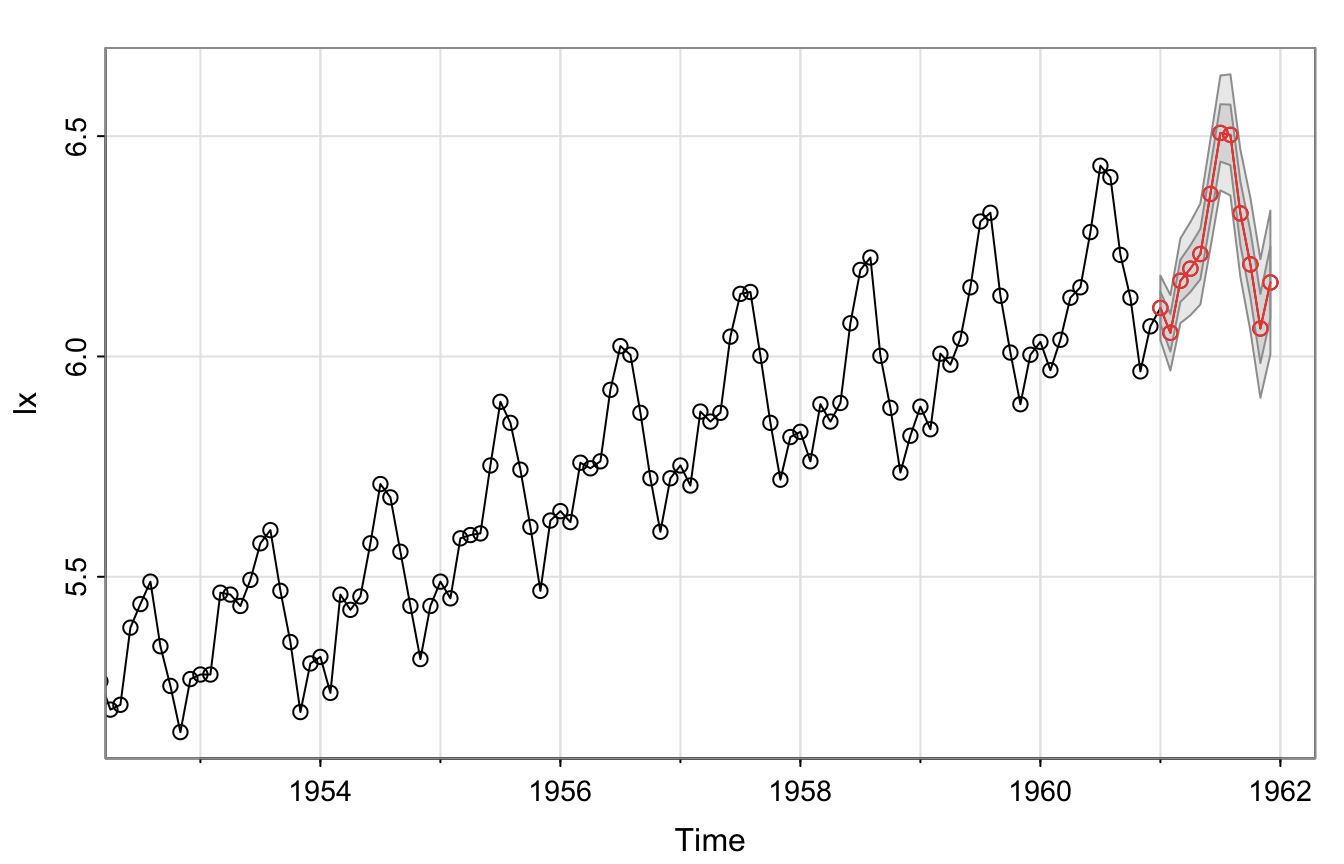

Example: Air Passengers #

Remember the steps 1.-2. performed in the previous module:

x <- AirPassengers

lx <- log(x)

dlx <- diff(lx)

ddlx <- diff(dlx, 12)

plot.ts(cbind(x, lx, dlx, ddlx), main = "")

This corresponds to step 2 above, and had led to residuals that look reasonably stationary.

We now need to choose a model for them.

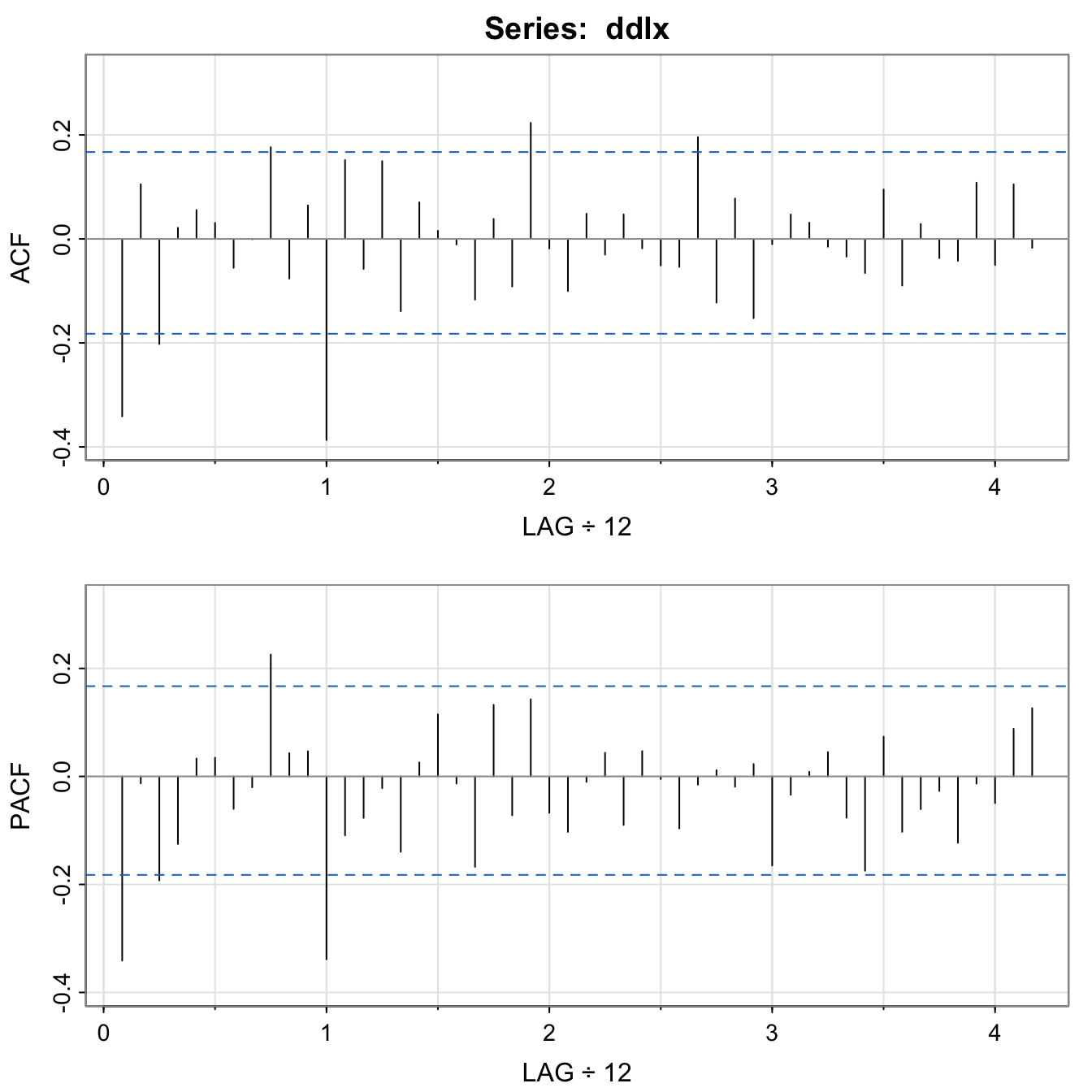

acf2(ddlx, 50)

## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10] [,11]

## ACF -0.34 0.11 -0.20 0.02 0.06 0.03 -0.06 0.00 0.18 -0.08 0.06

## PACF -0.34 -0.01 -0.19 -0.13 0.03 0.03 -0.06 -0.02 0.23 0.04 0.05

## [,12] [,13] [,14] [,15] [,16] [,17] [,18] [,19] [,20] [,21]

## ACF -0.39 0.15 -0.06 0.15 -0.14 0.07 0.02 -0.01 -0.12 0.04

## PACF -0.34 -0.11 -0.08 -0.02 -0.14 0.03 0.11 -0.01 -0.17 0.13

## [,22] [,23] [,24] [,25] [,26] [,27] [,28] [,29] [,30] [,31]

## ACF -0.09 0.22 -0.02 -0.1 0.05 -0.03 0.05 -0.02 -0.05 -0.05

## PACF -0.07 0.14 -0.07 -0.1 -0.01 0.04 -0.09 0.05 0.00 -0.10

## [,32] [,33] [,34] [,35] [,36] [,37] [,38] [,39] [,40] [,41]

## ACF 0.20 -0.12 0.08 -0.15 -0.01 0.05 0.03 -0.02 -0.03 -0.07

## PACF -0.02 0.01 -0.02 0.02 -0.16 -0.03 0.01 0.05 -0.08 -0.17

## [,42] [,43] [,44] [,45] [,46] [,47] [,48] [,49] [,50]

## ACF 0.10 -0.09 0.03 -0.04 -0.04 0.11 -0.05 0.11 -0.02

## PACF 0.07 -0.10 -0.06 -0.03 -0.12 -0.01 -0.05 0.09 0.13

Seasonal component:

- At the seasons, the ACF appears to be cutting off at lag

- PACF appears to be tailing off at lags

Non-Seasonal component:

- We inspect the ACF and PACF at lower lags.

- Both appear to be tailing off, which suggests ARMA within the seasons, say with

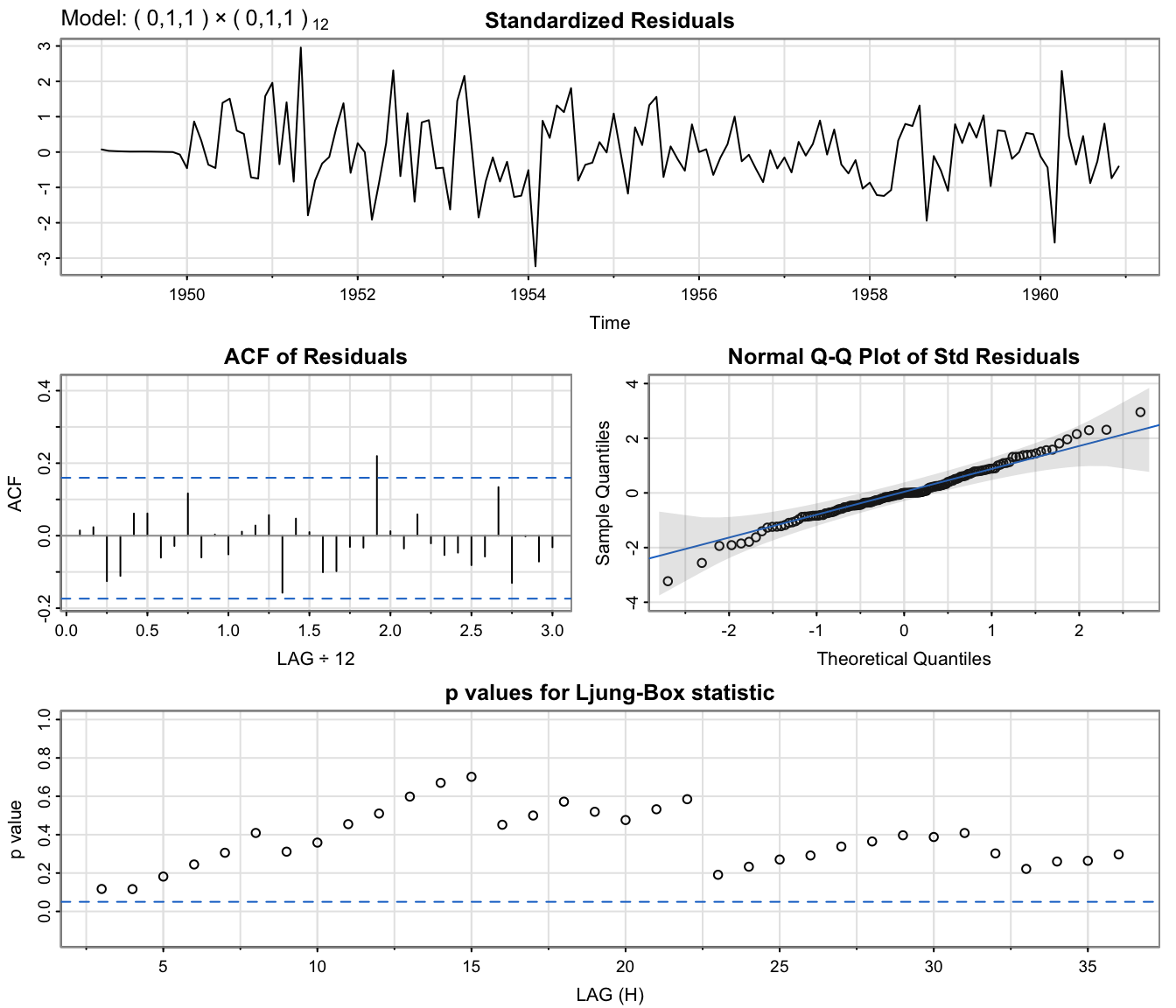

Thus, we will first try an

AirPassFit1 <- sarima(lx, 1, 1, 1, 0, 1, 1, 12)

AirPassFit1$fit

##

## Call:

## arima(x = xdata, order = c(p, d, q), seasonal = list(order = c(P, D, Q), period = S),

## include.mean = !no.constant, transform.pars = trans, fixed = fixed, optim.control = list(trace = trc,

## REPORT = 1, reltol = tol))

##

## Coefficients:

## ar1 ma1 sma1

## 0.1960 -0.5784 -0.5643

## s.e. 0.2475 0.2132 0.0747

##

## sigma^2 estimated as 0.001341: log likelihood = 244.95, aic = -481.9

The AR parameter is not significant, so we try dropping one parameter from the within seasons part. We will try

AirPassFit2 <- sarima(lx, 0, 1, 1, 0, 1, 1, 12) # ARIMA(0,1,1)x(0,1,1)_12

AirPassFit3 <- sarima(lx, 1, 1, 0, 0, 1, 1, 12) # ARIMA(1,1,0)x(0,1,1)_12

AirPassFit2$fit

...

## Coefficients:

## ma1 sma1

## -0.4018 -0.5569

## s.e. 0.0896 0.0731

##

## sigma^2 estimated as 0.001348: log likelihood = 244.7, aic = -483.4

...

AirPassFit3$fit

...

## Coefficients:

## ar1 sma1

## -0.3395 -0.5619

## s.e. 0.0822 0.0748

##

## sigma^2 estimated as 0.001367: log likelihood = 243.74, aic = -481.49

...

AirPassFit2$ICs

## AIC AICc BIC

## -3.690069 -3.689354 -3.624225

AirPassFit3$ICs

## AIC AICc BIC

## -3.675493 -3.674777 -3.609649

All information criteria prefer the

AirPassFit2 <- sarima(lx, 0, 1, 1, 0, 1, 1, 12) # ARIMA(0,1,1)x(0,1,1)_12

Except for 1-2 outliers, the model fits well.

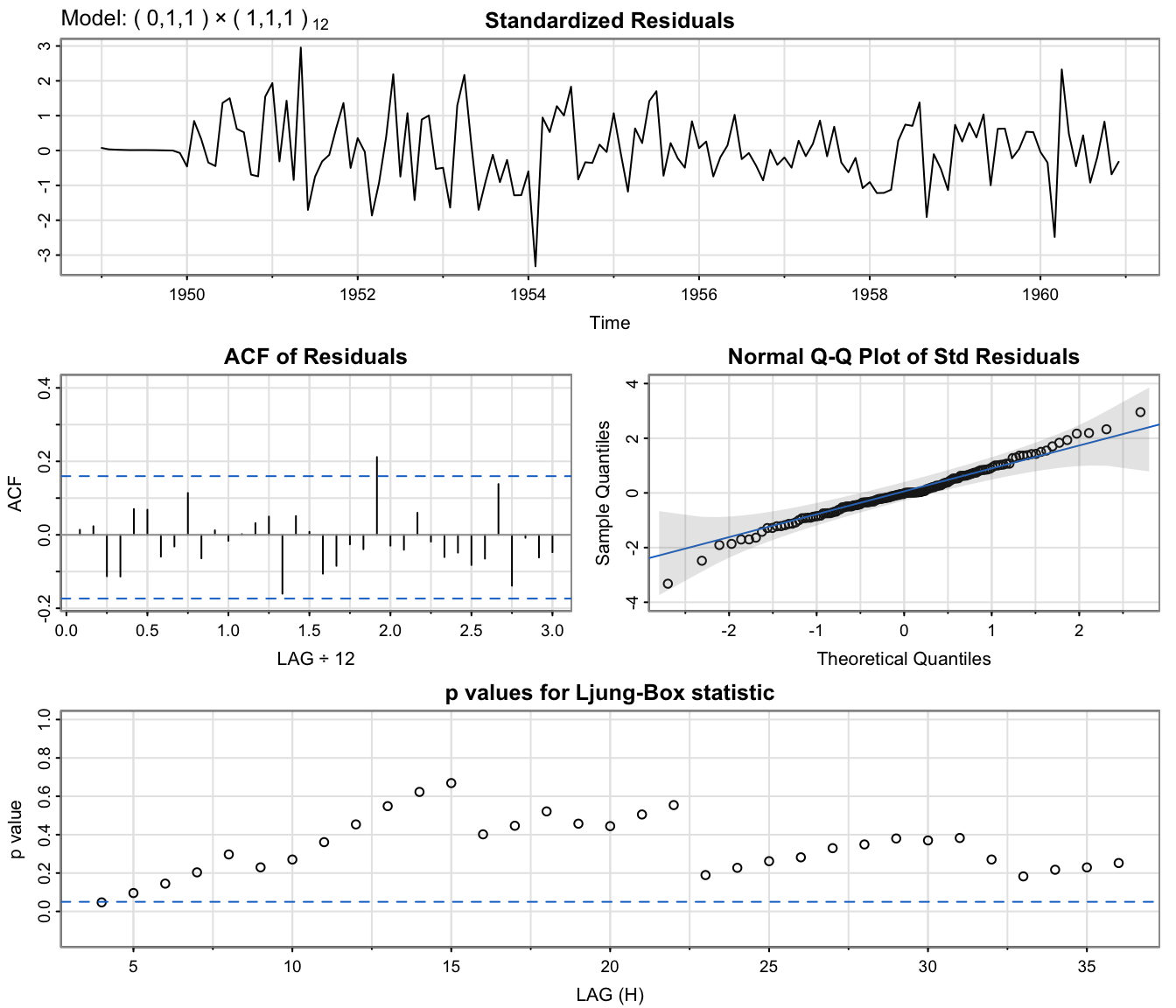

Checking whether we should have

AirPassFit4 <- sarima(lx, 0, 1, 1, 1, 1, 1, 12) # ARIMA(0,1,1)x(1,1,1)_12

AirPassFit2$ICs

## AIC AICc BIC

## -3.690069 -3.689354 -3.624225

AirPassFit4$ICs

## AIC AICc BIC

## -3.678726 -3.677284 -3.590934

All information criteria prefer the

AirPassFit4 <- sarima(lx, 0, 1, 1, 1, 1, 1, 12) # ARIMA(0,1,1)x(1,1,1)_12

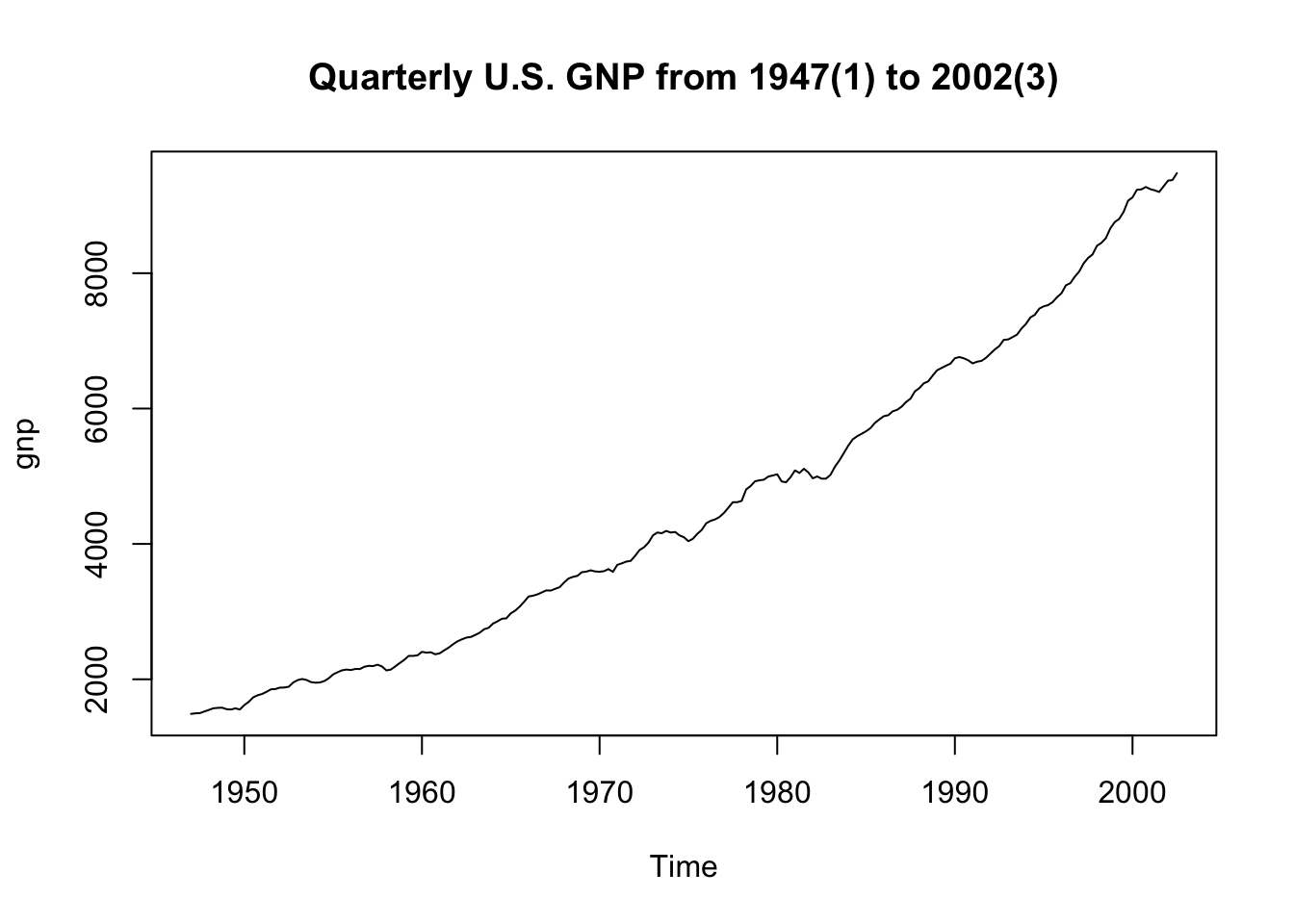

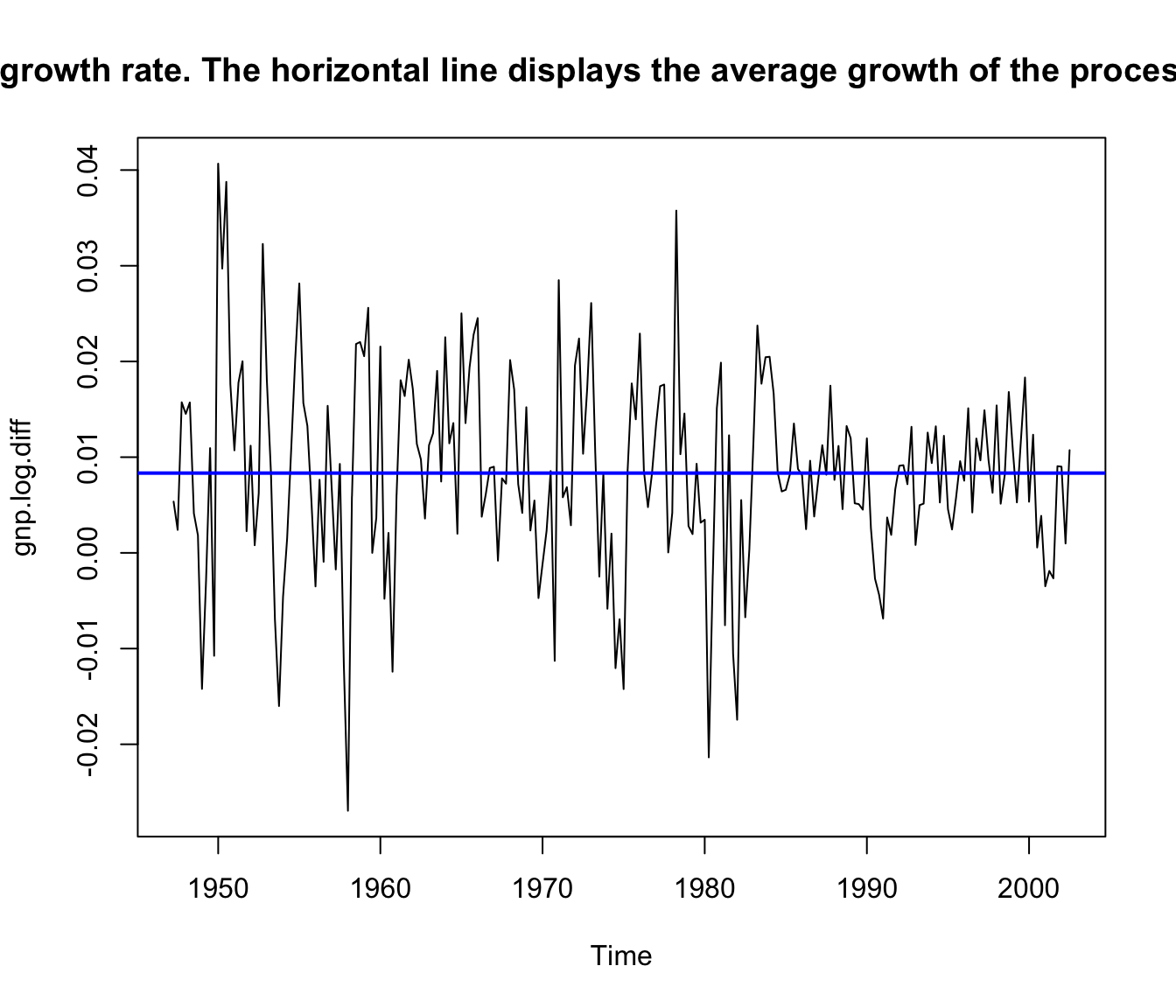

Complete case study: GNP data #

plot(gnp, main = "Quarterly U.S. GNP from 1947(1) to 2002(3)")

GNP data seems to have exponential growth,

so a log transformation might be appropriate.

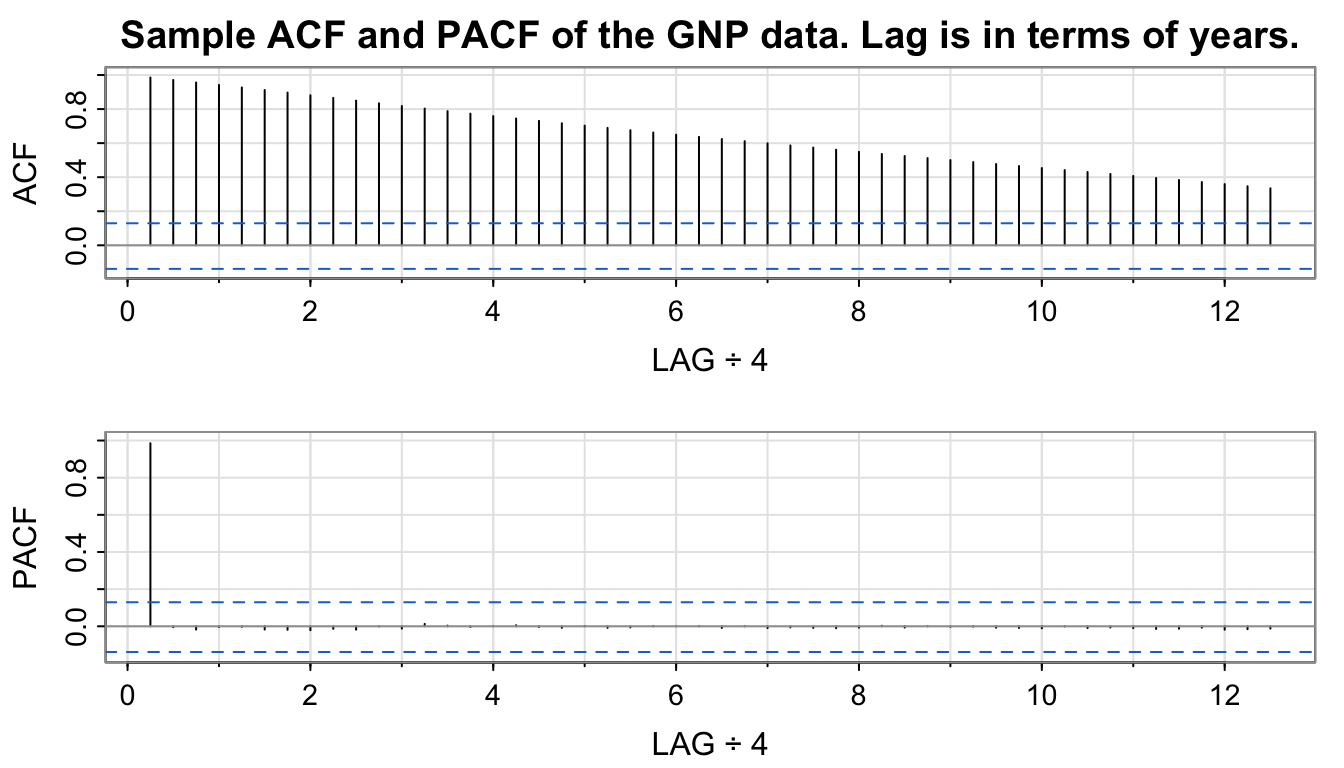

acf2(gnp, 50, main = "Sample ACF and PACF of the GNP data. Lag is in terms of years.")

...

## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10] [,11]

## ACF 0.99 0.97 0.96 0.94 0.93 0.91 0.90 0.88 0.87 0.85 0.83

## PACF 0.99 0.00 -0.02 0.00 0.00 -0.02 -0.02 -0.02 -0.01 -0.02 0.00

...

Slow decay of ACF

Making those two modifications leads to

gnp.log.diff <- diff(log(gnp)) # growth rate plot(gnpgr)

ts.plot(gnp.log.diff, main = "U.S. GNP quarterly growth rate. The horizontal line displays the average growth of the process, which is close to 1%.")

abline(mean(gnp.log.diff), 0, col = "blue", lwd = 2)

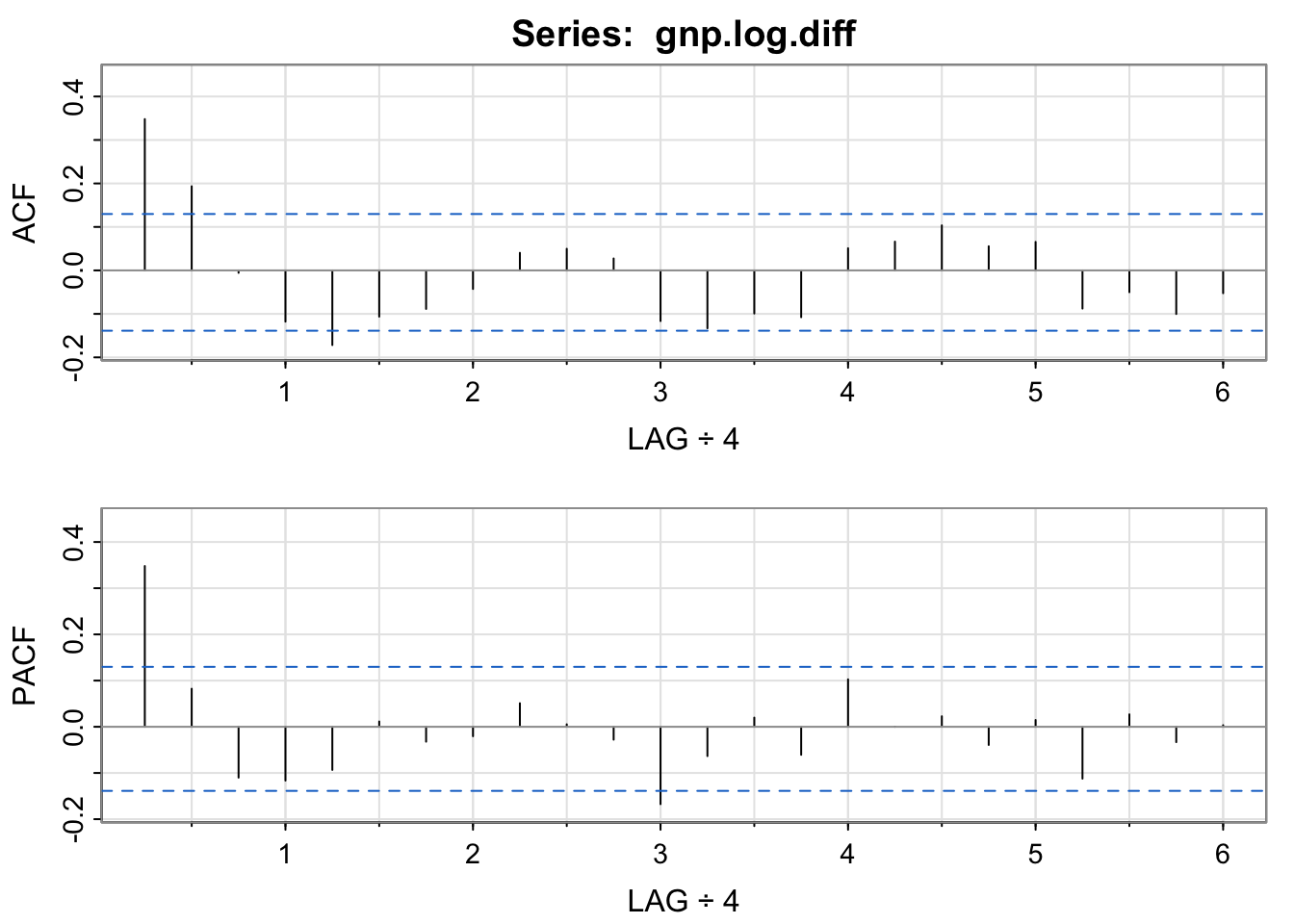

acf2(gnp.log.diff, 24)

...

## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10] [,11]

## ACF 0.35 0.19 -0.01 -0.12 -0.17 -0.11 -0.09 -0.04 0.04 0.05 0.03

## PACF 0.35 0.08 -0.11 -0.12 -0.09 0.01 -0.03 -0.02 0.05 0.01 -0.03

...

GNP data:

GNP.MA2 <- sarima(gnp.log.diff, 0, 0, 2) # MA(2)

GNP.MA2$fit

##

## Call:

## arima(x = xdata, order = c(p, d, q), seasonal = list(order = c(P, D, Q), period = S),

## xreg = xmean, include.mean = FALSE, transform.pars = trans, fixed = fixed,

## optim.control = list(trace = trc, REPORT = 1, reltol = tol))

##

## Coefficients:

## ma1 ma2 xmean

## 0.3028 0.2035 0.0083

## s.e. 0.0654 0.0644 0.0010

##

## sigma^2 estimated as 8.919e-05: log likelihood = 719.96, aic = -1431.93

GNP.MA2$ICs

## AIC AICc BIC

## -6.450133 -6.449637 -6.388823

GNP data:

GNP.AR1 <- sarima(gnp.log.diff, 1, 0, 0) # AR(1)

GNP.AR1$fit

##

## Call:

## arima(x = xdata, order = c(p, d, q), seasonal = list(order = c(P, D, Q), period = S),

## xreg = xmean, include.mean = FALSE, transform.pars = trans, fixed = fixed,

## optim.control = list(trace = trc, REPORT = 1, reltol = tol))

##

## Coefficients:

## ar1 xmean

## 0.3467 0.0083

## s.e. 0.0627 0.0010

##

## sigma^2 estimated as 9.03e-05: log likelihood = 718.61, aic = -1431.22

GNP.AR1$ICs

## AIC AICc BIC

## -6.446940 -6.446693 -6.400958

GNP data:

GNP.ARMA <- sarima(gnp.log.diff, 1, 0, 2) # ARMA(1,2)

GNP.ARMA$fit

##

## Call:

## arima(x = xdata, order = c(p, d, q), seasonal = list(order = c(P, D, Q), period = S),

## xreg = xmean, include.mean = FALSE, transform.pars = trans, fixed = fixed,

## optim.control = list(trace = trc, REPORT = 1, reltol = tol))

##

## Coefficients:

## ar1 ma1 ma2 xmean

## 0.2407 0.0761 0.1623 0.0083

## s.e. 0.2066 0.2026 0.0851 0.0010

##

## sigma^2 estimated as 8.877e-05: log likelihood = 720.47, aic = -1430.95

GNP.ARMA$ICs

## AIC AICc BIC

## -6.445712 -6.444882 -6.369075

GNP data: Comparison #

Note fitted models are

- MA(2):

- AR(1):

- In fact, both models are nearly the same. This is because the AR model can be rewritten (ignoring the constant) as

formatC(ARMAtoMA(ar = 0.35, ma = 0, 6), digits = 3)

## [1] "0.35" "0.122" "0.0429" "0.015" "0.00525" "0.00184"

GNP data: Model selection #

- Information criteria (the lower the better):

- AR(1):

$AIC: -6.446940$AICc: -6.446693$BIC: -6.400958 - MA(2):

$AIC: -6.450133$AICc: -6.449637$BIC: -6.388823 - ARMA(1,2):

$AIC: -6.445712$AICc: -6.444882$BIC: -6.369075

- AR(1):

- The AIC and AICc both prefer the MA(2) fit to AR(1)

- The BIC prefers the simpler AR(1) model to MA(2).

- It is often the case that the BIC will select a model of smaller order than the AIC or AICc. In either case, it is not unreasonable to retain the AR(1) because pure autoregressive models are easier to work with.

- Combining the two to

Side comment: compare with ARIMA(1,1,2) on log(GNP) #

GNP.MA2 <- sarima(log(gnp), 1, 1, 2) # ARIMA(1,1,2)

GNP.MA2$fit

##

## Call:

## arima(x = xdata, order = c(p, d, q), seasonal = list(order = c(P, D, Q), period = S),

## xreg = constant, transform.pars = trans, fixed = fixed, optim.control = list(trace = trc,

## REPORT = 1, reltol = tol))

##

## Coefficients:

## ar1 ma1 ma2 constant

## 0.2407 0.0761 0.1623 0.0083

## s.e. 0.2066 0.2026 0.0851 0.0010

##

## sigma^2 estimated as 8.877e-05: log likelihood = 720.47, aic = -1430.95

GNP.MA2$ICs

## AIC AICc BIC

## -6.445711 -6.444880 -6.369074

Appendix: Method of moments for

We seek to estimate

Using the method of moments, we replace

- For

- The Yule-Walker estimators are essentially least square estimators, which work well on AR models because they are linear. MA (and ARMA) processes are nonlinear in the parameters, and will fare badly with the Yule-Walker estimators.

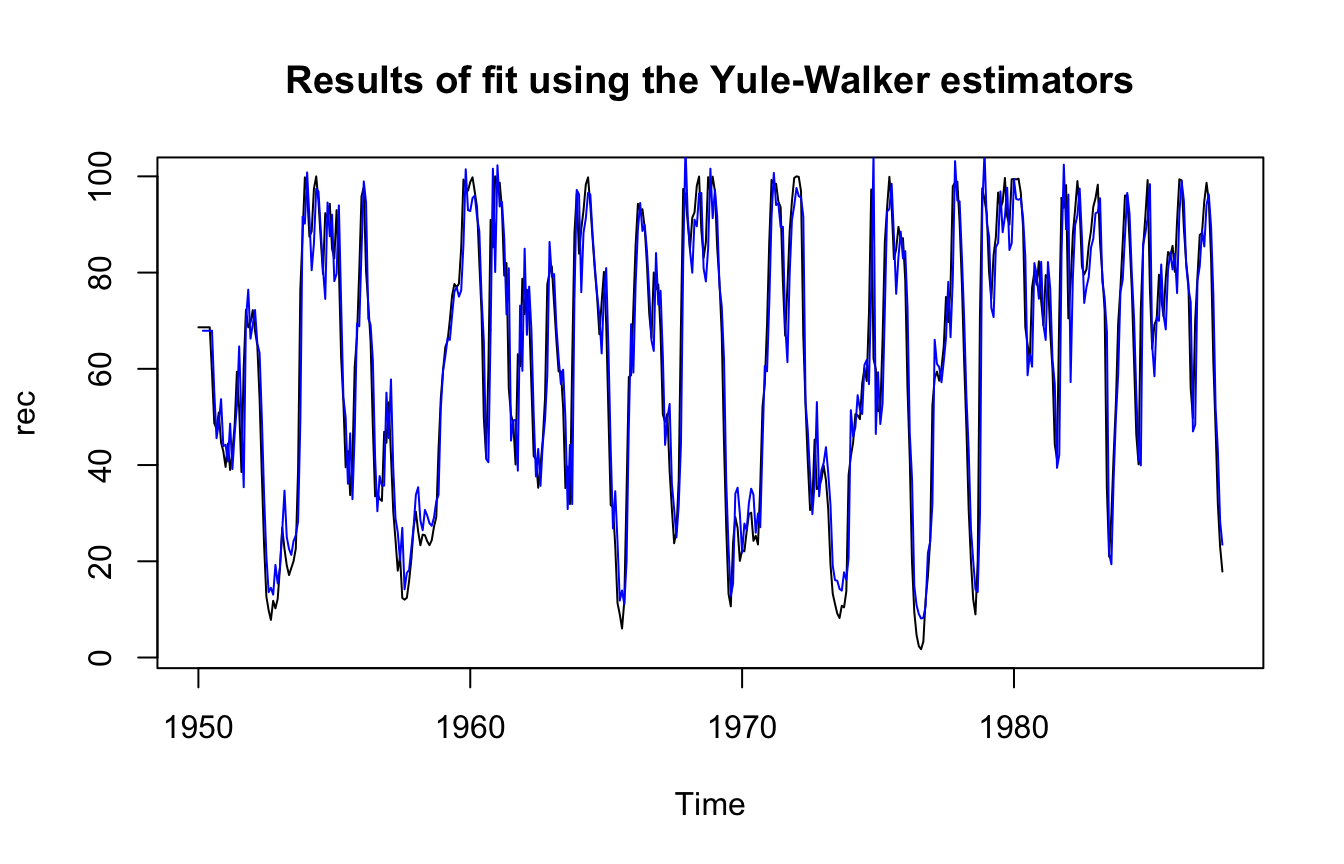

Example: Recruitment series #

Yule-Walker estimators are implemented in R as follows:

rec.yw <- ar.yw(rec, order = 2)

rec.yw$x.mean # (mean estimate)

## [1] 62.26278

rec.yw$ar # (coefficient estimates)

## [1] 1.3315874 -0.4445447

sqrt(diag(rec.yw$asy.var.coef)) # (standard errors)

## [1] 0.04222637 0.04222637

rec.yw$var.pred # (error variance estimate)

## [1] 94.79912

The fit is then displayed as follows:

ts.plot(rec, main = "Results of fit using the Yule-Walker estimators")

lines(rec[1:length(rec)] - rec.yw$resid, col = "blue", lwd = 1)

The estimators obtained are nearly identical to the MLE ones (although MLE has lower variance as expected). It can be shown that this will typically be the case for AR models.

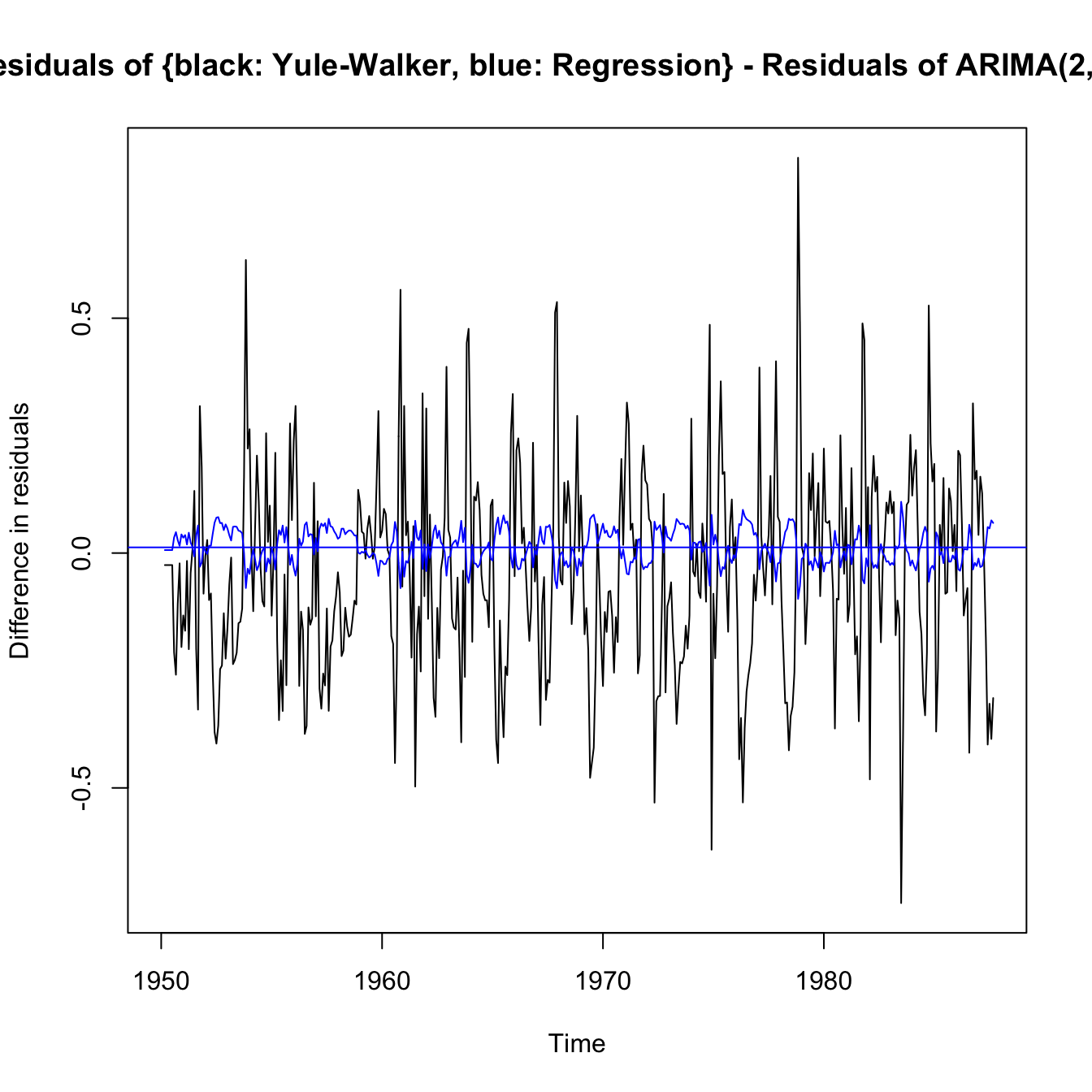

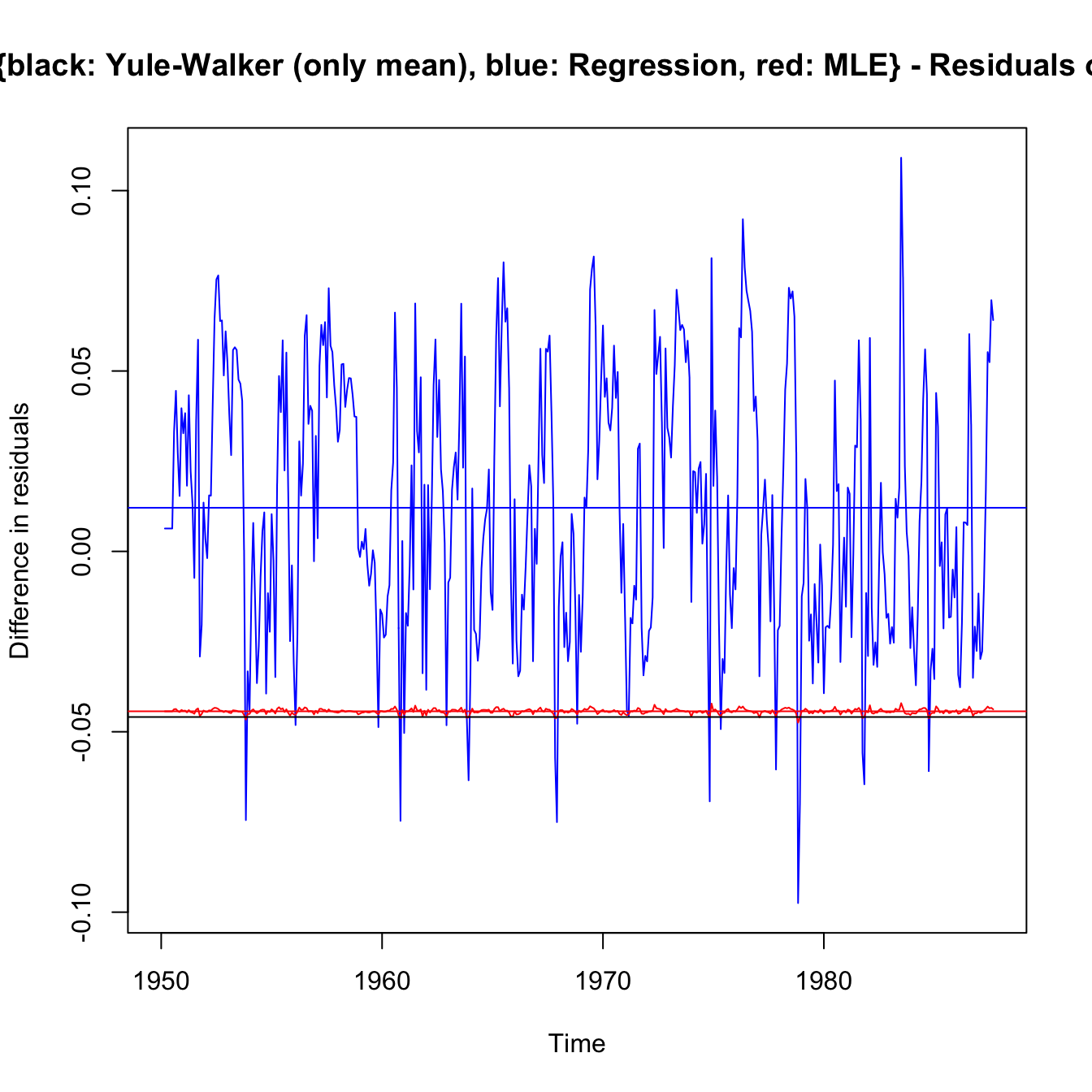

ts.plot(rec.yw$resid - rec.arima$fit$residuals, ylab = "Difference in residuals",

main = "Residuals of {black: Yule-Walker, blue: Regression} - Residuals of ARIMA(2,0,0) fit")

abline(a = mean((rec.yw$resid[3:length(rec)] - rec.arima$fit$residuals[length(rec)])^1),

0, col = "black")

lines(regr$resid - rec.arima$fit$residuals, col = "blue")

abline(a = mean((regr$resid[3:length(rec)] - rec.arima$fit$residuals[3:length(rec)])^1),

0, col = "blue")

ts.plot(regr$resid - rec.arima$fit$residuals, col = "blue", ylab = "Difference in residuals",

main = "Residuals of {black: Yule-Walker (only mean), blue: Regression, red: MLE} - Residuals of ARIMA(2,0,0) fit")

abline(a = mean((regr$resid[3:length(rec)] - rec.arima$fit$residuals[3:length(rec)])^1),

0, col = "blue")

lines(rec.mle$resid - rec.arima$fit$residuals, col = "red")

abline(a = mean((rec.mle$resid[3:length(rec)] - rec.arima$fit$residuals[3:length(rec)])),

0, col = "red")

# lines(rec.yw$resid-rec.arima$fit$residuals,col='black')

abline(a = mean((rec.yw$resid[3:length(rec)] - rec.arima$fit$residuals[3:length(rec)])^1),

0, col = "black")

| Model residuals |

Mean | arima |

|

|---|---|---|---|

regr$resid[3:length(rec)] |

0.000 | -0.020 | 40343 |

rec.yw$resid[3:length(rec)] |

-0.021 | -0.041 | 40369 |

rec.mle$resid[3:length(rec)] |

-.020 | -0.040 | 40344 |

rec.arima$fit$residuals[3:length(rec)] |

0.020 | - | 404344 |

- Yule-Walker and MLE are very close (location) as pointed out earlier.

- Yule-Walker is focussed on moments irrespective of residuals, so it is the poorest in terms of least squares.

- As compared to Yule-Walker and MLE, the

arimamethodology sacrifices some Least Square performance in favour of a better fit (mean of residuals is closer to 0). Flatness of MLE (in red) suggestsarimais a shifted MLE. It seems to be the best compromise. - The regression is pure least squares, and minimises them, and has 0 mean residuals. However, it can’t be the preferred model as it ignores the fact that the model is autoregressive, and is not regressed on an independent, assumed known and independent, series. Forecasts (and their standard errors) should not be trusted.

(see next slide for code that generated numbers)

mean((regr$resid[3:length(rec)] - rec.arima$fit$residuals[3:length(rec)])^1)

## [1] 0.01208978

mean((rec.yw$resid[3:length(rec)] - rec.arima$fit$residuals[3:length(rec)])^1)

## [1] -0.04591007

mean((rec.mle$resid[3:length(rec)] - rec.arima$fit$residuals[3:length(rec)])^1)

## [1] -0.04432752

mean(regr$resid[3:length(rec)]^1)

## [1] -3.17507e-13

mean(rec.yw$resid[3:length(rec)]^1)

## [1] -0.05799985

mean(rec.mle$resid[3:length(rec)]^1)

## [1] -0.05641729

mean(rec.arima$fit$residuals[3:length(rec)]^1)

## [1] -0.01208978

sum(regr$resid[3:length(rec)]^2)

## [1] 40462.39

sum(rec.yw$resid[3:length(rec)]^2)

## [1] 40491.55

sum(rec.mle$resid[3:length(rec)]^2)

## [1] 40464.39

sum(rec.arima$fit$residuals[3:length(rec)]^2)

## [1] 40463.03

Forecasting #

Introduction #

- In forecasting, the goal is to predict future values of a time series,

- We assume here that

- The problem of forecasting when the model parameters are unknown is more complicated. We mostly focus on performing predictions using R (which allows for that fact appropriately), rather than the deep technicalities of it (which are outside scope of this course).

Best Linear Predictors (BLPs) #

We will restrict our attention to predictors that are linear functions of the data, that is, predictors of the form

Note:

- In fact the

- Such estimators depend only on the second-order moments of the process, which are easy to estimate from the data.

- Most actuarial credibility estimators belong to the family of BLPs (Bühlmann, Bühlmann-Straub,

Best Linear Prediction for Stationary Processes #

Given data

- The

- This results stems from minimising least squares.

If

One-step-ahead prediction #

- Given

- The BLP of

- In this case,

- Using the BLP result above, the coefficients

[Note that these correspond to the Yule-Walker equations.]

- The matrix

- The one-step-ahead forecast is then

- The mean square one-step-ahead prediction error is

The prediction equations (and associated mean-square errors) of the one-step-ahead prediction can be found iteratively thanks to the Durbin-Levinson Algorithm:

- Initial values:

- For all

- If

- For

Concluding notes #

- The results shown above are provided to illustrate the process of forecasting. Students are not expected to be able to show the results.

- There are other algorithms for calculating one-step-ahead forecasts, such as the innovations algorithm (outside scope).

Forecasting ARMA processes #

Introduction #

- The technical side of forecasting ARMA models can get involved.

- Throughout, we assume

- In the non-zero mean case

Two types of forecasts #

We consider two types of forecasts:

- The minimum mean square error predictor of

- The predictor of

Note:

- For ARMA models,

- In general,

- The idea is that, for large samples,

Forecasts for more than one step #

Write

Prediction is then accomplished recursively using

This illustrates why it is easier to forecast with

Mean-square prediction error is calculated next, but we need an intermediary result first - an expression for

Write

Mean-square prediction error can now be calculated thanks to

Note that for a fixed sample size,

Predictions in practice #

- When

- However, when

- The truncated predictor is then written as

- The mean square prediction error, in this case, is approximated using

Truncated prediction for ARMA #

For

- For

- The above approximation is required for

Long-range forecasts #

- Consider forecasting an ARMA process with mean

- Replacing

- Because the

- Moreover, the mean square prediction error

- ARMA forecasts quickly settle to the mean with a constant prediction error as the forecast horizon,

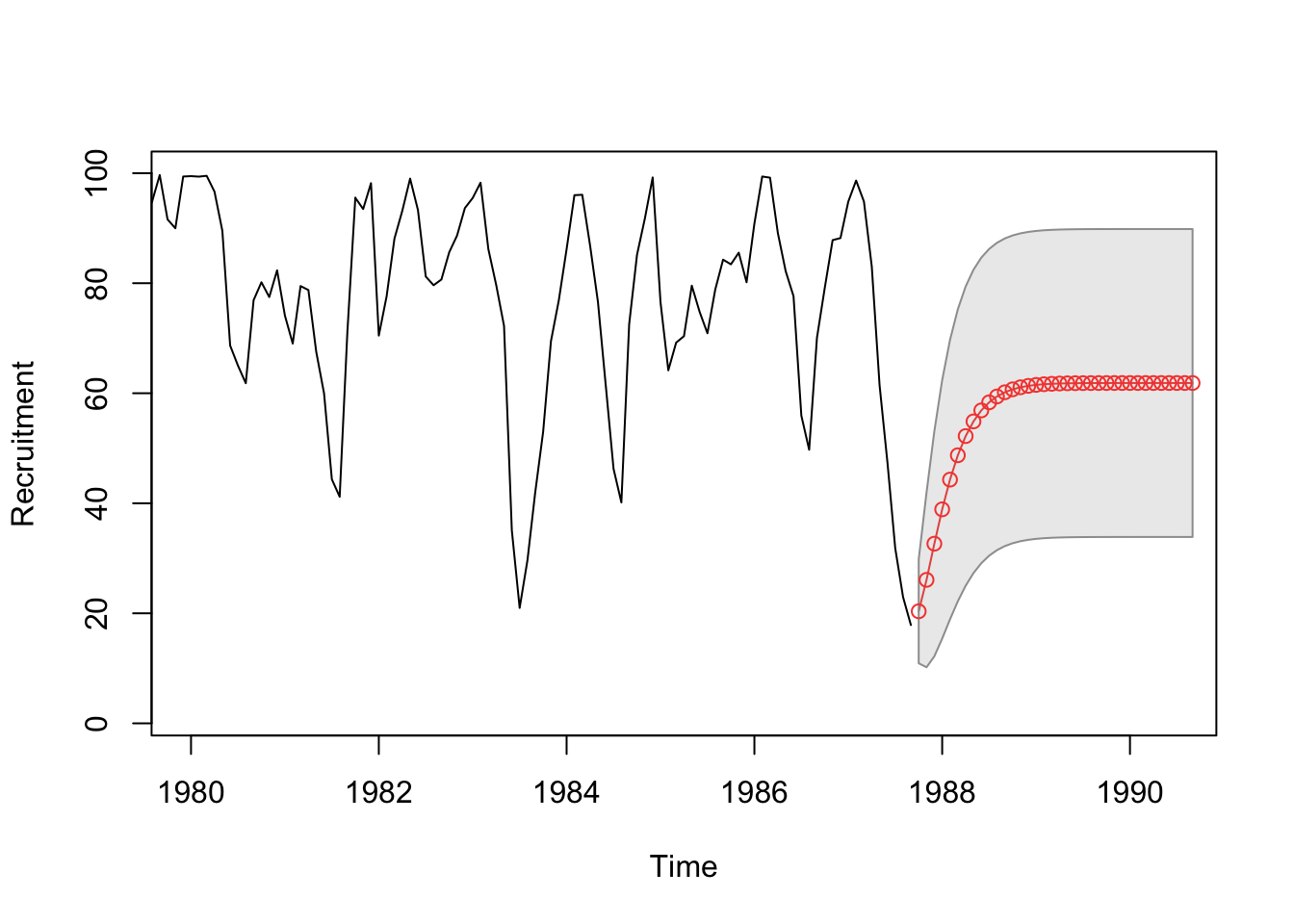

Example: Recruitment Series #

fore2 <- predict(rec.arima0, n.ahead = 36)

cbind(fore2$pred, fore2$se)

...

## fore2$pred fore2$se

## Oct 1987 20.36547 9.451686

## Nov 1987 26.08036 15.888378

## Dec 1987 32.65148 20.464325

## Jan 1988 38.89474 23.492457

## Feb 1988 44.30006 25.393693

## Mar 1988 48.72437 26.537088

## Apr 1988 52.20958 27.199368

## May 1988 54.87831 27.570234

## Jun 1988 56.87693 27.771616

## Jul 1988 58.34666 27.877923

## Aug 1988 59.41079 27.932597

## Sep 1988 60.17081 27.960042

## Oct 1988 60.70697 27.973508

## Nov 1988 61.08091 27.979974

## Dec 1988 61.33890 27.983014

...

ts.plot(rec, fore2$pred, col = 1:2, xlim = c(1980, 1990.5), ylab = "Recruitment")

U <- fore2$pred + fore2$se

L <- fore2$pred - fore2$se

xx <- c(time(U), rev(time(U)))

yy <- c(L, rev(U))

polygon(xx, yy, border = 8, col = gray(0.6, alpha = 0.2))

lines(fore2$pred, type = "p", col = 2)

Example: Air Passengers #

We forecast the model chosen in the previous section:

sarima.for(lx, 12, 0, 1, 1, 0, 1, 1, 12)

Including seasonality leads (apparently) to much higher precision.

References #

Shumway, Robert H., and David S. Stoffer. 2017. Time Series Analysis and Its Applications: With r Examples. Springer.