Introduction: Models for aggregate losses #

A portfolio of contracts or a contract will potentially experience a sequence of losses:

- How many losses will occur?

- if deterministic

- if random

- if deterministic

- How do they relate to each other?

- usual assumption: iid

- When do these losses occur?

- usual assumption: no time value of money

- usual assumption: no time value of money

- How big are these losses?

The Individual Risk Model #

Definition #

The Individual Risk Model #

In the Individual Risk Model

- get the whole distribution of

- Convolutions

- Generating functions

- (

Convolutions of random variables #

In probability, the operation of determining the distribution of the sum of two random variables is called a convolution. It is denoted by

Formulas #

In short

- Discrete case:

- df:

- pmf:

- df:

- Continuous case:

- cdf:

- pdf:

- cdf:

Examples:

- discrete case: Bowers et al. (1997) Example 2.3.1 on page 35

- continuous case: Bowers et al. (1997) Example 2.3.2 on page 36

Numerical example #

Consider 3 discrete r.v.’s with probability mass functions

Calculate the pmf

Solution #

Using generating functions #

There is a 1-1 relation between a distribution and its mgf or pgf.

Because

- Sometimes,

- Otherwise,

Example #

Consider a portfolio of 10 contracts. The losses

- Normal;

- Gamma;

- Poisson.

Using R #

- Contrary to Excel, convolutions are extremely easy to implement in R using vectors.

f1 <- c(1/4, 1/2, 1/4, 0, 0)

f2 <- c(1/2, 0, 1/2, 0, 0)

f12 <- c(f1[1] * f2[1], sum(f1[1:2] * f2[2:1]), sum(f1[1:3] *

f2[3:1]), sum(f1[1:4] * f2[4:1]), sum(f1[1:5] * f2[5:1]))

f12

## [1] 0.125 0.250 0.250 0.250 0.125

- The example above is generalised in Exercise

los9R. - A more advanced R function is

convolve. It actually involves the Fast Fourier Transform (a method that is related to that of the mgf’s) for efficiency. We do not discuss this here, but it is used in the implementation of convolutions in the functionaggregateDistof the packageactuar(introduced later).

The Collective Risk Model (Compound distributions, MW 2.1) #

Definition #

Introduction #

Two models, depending on the assumption on the number of losses:

- deterministic -

- main focus on the claims of individual policies (whose number is a priori known)

- discussed in previous sections

- random -

- main focus on claims of a whole portfolio (whose number is a priori unknown)

- this is another way of separating frequency and severity

In this section we focus on the Collective Risk Model.

Definition #

In the Collective Risk Model, aggregate losses become

- the

- (c)df

- p(d/m)f

- (c)df

- the

Moments of

We have

Moment generating function of

It is possible to get

Example (Bowers et al. (1997), 12.2.1) #

Assume that

Distribution of

It is possible to get a fairly general expression for the df of

where

Note that

- However, the type of

Distribution of

If

- with a mass at 0 because of

- continuous elsewhere, but with a density integrating to

Example, continued (Bowers et al. (1997), 12.2.3) #

Assume now that

Distribution of

If

- with a mass at 0 because of

- mixed (if

- with a density integrating to something

Distribution of

For discrete

where

- This can be implemented in a table and/or in a program.

- However, if the range of

- This formula is more efficient if the number of possible outcomes for

(see Module 4)

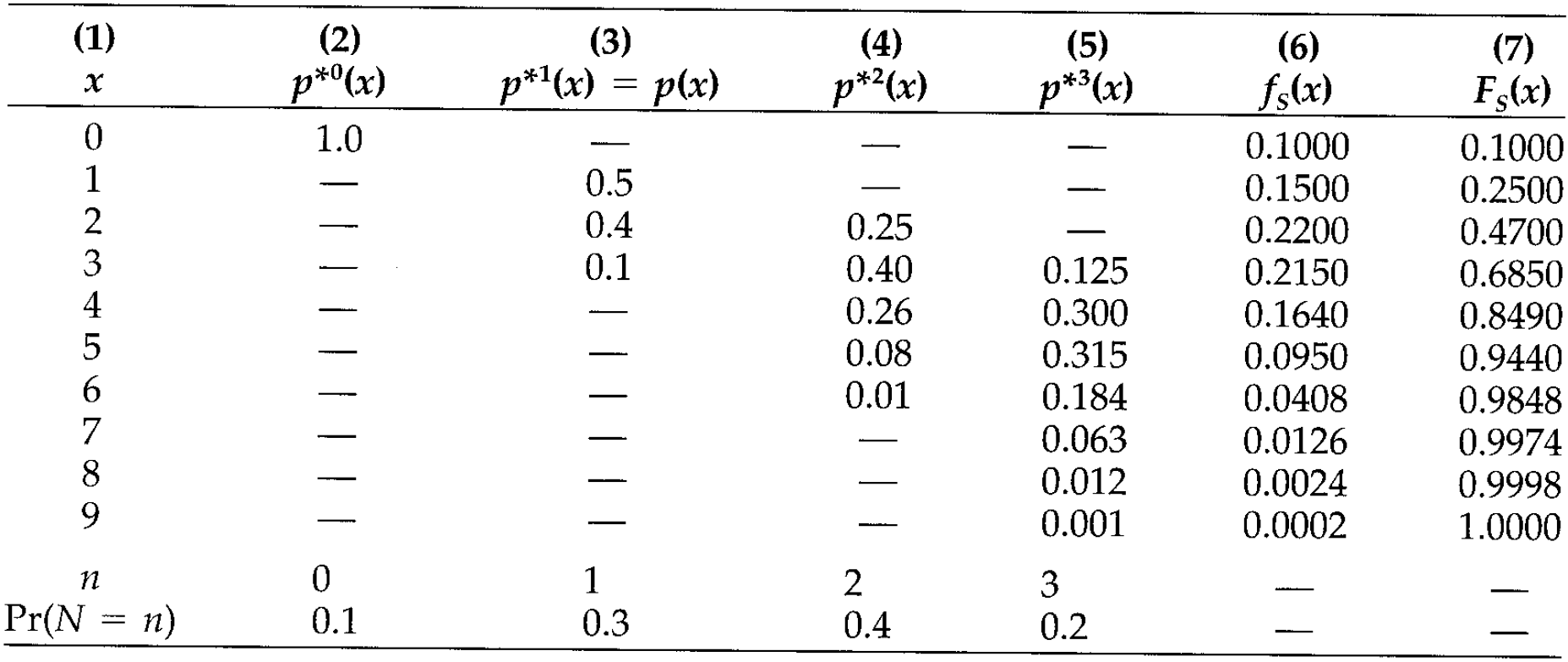

Example with tabular approach #

From Bowers et al. (1997), 12.2.2:

- The convolutions are in done the usual way.

- The number of columns depends on the range of

- The

Using R #

We will make extensive use of the function aggregateDist from the package actuar (Dutang, Goulet, and Pigeon 2008):

- This function allows for several different aggregate distribution approaches, which will be introduced here (and in Module 4 as the associated theory is presented).

- Here, we show how the function can be used to implement formulas (1) and (2) (using the function

convolvein the background). This corresponds to themethod="convolution"approach.

actuar::aggregateDist(method="convolution"):

- A discrete distribution for

model.sev=. The first element must be - There is no restriction on the shape of the frequency distribution, but it must have a finite range.

This is input as a vector of claim number probability masses after the argument

model.freq=. The first element must be - The outcome of the function is (1). Additional outputs:

plot: to get a pretty plot of the dfsummary: to get summary statisticsmean: to get the meandiff: to get the pmf

- Additional options are:

x.scale: currency units per unit ofsevin the severity model (this allows calculations on multiples of $1)

# Bowers 12.2.2

fy <- c(0, 0.5, 0.4, 0.1)

fn <- c(0.1, 0.3, 0.4, 0.2)

Fs <- aggregateDist("convolution", model.freq = fn, model.sev = fy)

mean(Fs)

## [1] 2.72

pmf <- c(Fs(0), diff(Fs(0:9)))

cbind(s = c(0:9), fs = pmf, Fs = Fs(0:9))

## s fs Fs

## [1,] 0 0.1000 0.1000

## [2,] 1 0.1500 0.2500

## [3,] 2 0.2200 0.4700

## [4,] 3 0.2150 0.6850

## [5,] 4 0.1640 0.8490

## [6,] 5 0.0950 0.9440

## [7,] 6 0.0408 0.9848

## [8,] 7 0.0126 0.9974

## [9,] 8 0.0024 0.9998

## [10,] 9 0.0002 1.0000

summary(Fs)

## Aggregate Claim Amount Empirical CDF:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 0.00 2.00 3.00 2.72 4.00 9.00

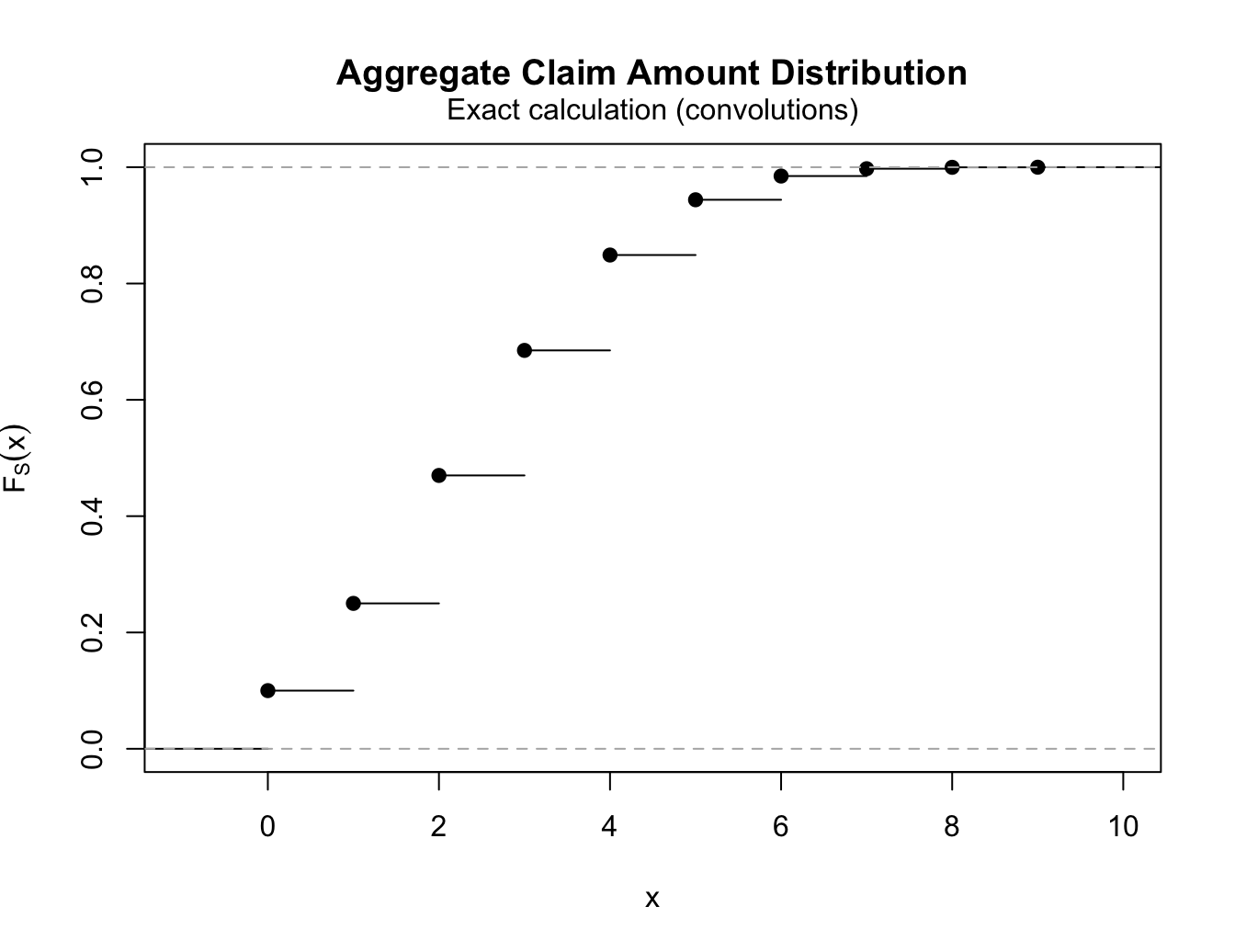

plot(Fs)

Explicit claims count distributions (MW 2.2) #

Introduction #

Exposure #

- It makes no sense to talk about frequency in an insurance portfolio without considering exposure. Chapter 4 of Werner and Modlin (2010) defines exposure as “the basic unit that measures a policy’s exposure to loss”.

- One primary criterion for choosing an exposure base is that it “should be directly proportional to expected loss”. Here we are focussing on frequency, so exposure should be something directly proportional to the expected frequency.

- Wuthrich (2023) calls exposure “volume”, denoted

Basic models for claims frequency #

- In our case, we will assume that it directly affects the likelihood of a claim to occur - the frequency - such that

- MW defines

- There are three main assumptions for

- binomial (with variance less than mean)

- Poisson (with variance equal to the mean)

- negative-binomial (a Poisson with random mean, so that variance is more than the mean)

- A summary table of those distributions is also given in Bowers et al. (1997), see Table 12.3.1 on page 376.

- These all belong to a class of distributions called

Binomial distribution #

- fixed volume

- fixed default probability

- pmf of

- same as a sum of Bernoulli (which is the case

- makes sense for homogenous portfolio with unique possible events, such as credit defaults, or deaths in a life insurance model

- In R:

dbinom,pbinom,qbinom,rbinom, wheresizeisprobis - Note that

choose.

Compound binomial model #

The total claim amount

Corollary 2.7: Assume

Exercise NLI3 considers the decomposition of

Poisson distribution #

-

fixed volume

-

expected claims frequency

-

pmf of

-

Lemma 2.9: increase volume while keeping

-

In R:

dpois,ppois,qpois,rpois, wherelambdais

Compound Poisson model #

The total claim amount

- The compound Poisson distribution has nice properties such as:

- The aggregation property

- The disjoint decomposition property

- The aggregation property

- These are reviewed in the next section, along with related new techniques for computing the distribution of

Mixed Poisson distribution #

Inhomogeneous portfolio #

- So far we have seen distributions with variance less (binomial) or exactly equal (Poisson) to the mean.

- In reality, actuarial data is often overdispersed, that is, variance is larger than mean.

- This could be due to frequency or severity, but it makes sense that some of this extra variability would come from frequency.

- If we believe in a Poisson frequency for known frequency parameter, then additional uncertainty such as heterogeneity of risks in a portfolio, uncertain conditions (weather, for instance) could be modelled with a random Poisson parameter, and could explain the extra variability.

- This is the idea of a mixed Poisson.

The mixed Poisson distribution #

- Assume random

- Conditionally, given

We have then

Example #

If

- This distribution is the

pigdistribution inactuar, so that you can usedpig,ppig, etc…); see Section 5 of the vignette “distribution” ofactuar.

Another example, which is very famous, is

Negative-binomial distribution #

Assume

- Define

- Now,

- If conditionally, given

and dispersion parameter

Proof:

which can be recognised as a negative-binomial with probability of “failure”

dnbinom, pnbinom, qnbinom, rnbinom, where size is prob is probability of success

and will affect the scale of the distribution).

Interpretation #

Alternatively, it describes the distributions of “$\lambda$’s” in the population.- In the end we have

- This additional uncertainty is not diversifiable

(remains even for large

Compound negative-binomial model #

The total claim amount

Additional properties and applications of Poisson frequencies #

Theorem 2.12: Aggregation property

Assume

- Independent

- Alternatively (or in addition), total claims paid over

- “Bottom-up” modelling

- In Bowers et al. (1997), this is Theorem 12.4.1.

Example 12.4.1 of Bowers et al. (1997) #

Suppose that

Theorem 2.14: Disjoint decomposition property

Preliminary 1: Add LoBs in the CompPoi formulation #

Let us introduce Lines of Business (“LoB”) in the notation:

- Let the set

- Let

- We assume

- We further assume that

- Finally, we define the mixture distribution by

- Note that this matches the formulation in the aggregation property Theorem 2.12 with

- Now, define a discrete random variable

We are now ready to define the following extended compound Poisson model:

- The total claims

- In addition, we assume that

- mutually i.i.d. and independent of

- with

- mutually i.i.d. and independent of

Preliminary 2: Partition #

- The random vector

- On this set we choose a finite sequence of sets

- Such a sequence is called a “measurable disjoint decomposition” or “partition” of

- This partition is called “admissible” for

partition above (no overlap and all-inclusive)

We have two levels of partition:

- Into LoBs:

- Claims are classified according to a sub-portfolio or LoB

- For instance: domestic motor and commercial motor

- The probability of a claim being in LoB

- The indicator for the claim to be in LoB

(with probability

- Into a second level:

- Claims are classified according to another set of criteria

- For instance: geographical areas NSW and VIC

- The probability of a claim being in geographical area

Theorem 2.14: Disjoint decomposition

Assume

- We chose an admissible partition

Then the random variable (sum of claims for partition

Thinning of the Poisson process #

- Assume that

- The disjoint decomposition theorem implies that

- For for each partition

- This means that the volume remains constant in each partition, but the expected claims frequencies

- This is called thinning of the Poisson process.

If

- represent the number of claims of amount

- are mutually independent;

- are Poi

Proof: see tutorial exercise los18. Note also that this is a special case of Theorem 2.14, and is Theorem 12.4.2 of Bowers et al. (1997).

So what?

- Sparse vector algorithm: allows to develop an alternative method for tabulating the distribution of

(Bowers et al. 1997, Example 12.4.2) Suppose

| $y_i$ | ||

|---|---|---|

| 1 | 0.250 | |

| 2 | 0.375 | |

| 3 | 0.375 |

Compute

This can be done in two ways:

- Basic method (seen earlier in the lecture): requires to calculate up to the 6th convolution of

- Sparse vector algorithm: requires no convolution of

Solution - Basic Method

| 0 | 1 | - | - | - | - | - | - | 0.4493 |

| 1 | - | 0.250 | - | - | - | - | - | 0.0899 |

| 2 | - | 0.375 | 0.0625 | - | - | - | - | 0.1438 |

| 3 | - | 0.375 | 0.1875 | 0.0156 | - | - | - | 0.1624 |

| 4 | - | - | 0.3281 | 0.0703 | 0.0039 | - | - | 0.0499 |

| 5 | - | - | 0.2813 | 0.1758 | 0.0234 | 0.0010 | - | 0.0474 |

| 6 | - | - | 0.1406 | 0.2637 | 0.0762 | 0.0073 | 0.0002 | 0.0309 |

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | ||

| 0.4493 | 0.3595 | 0.1438 | 0.0383 | 0.0077 | 0.0012 | 0.0002 |

- The convolutions are done in the usual way.

- The

- The number of convolutions (and thus of columns) will increase by 1 for each new value of

Solution - Sparse vector algorithm

Thanks to Theorem 2.12, we can write

| 0 | 0.818731 | 0.740818 | 0.740818 | 0.606531 | 0.449329 |

| 1 | 0.163746 | 0 | 0 | 0.121306 | 0.089866 |

| 2 | 0.016375 | 0.222245 | 0 | 0.194090 | 0.143785 |

| 3 | 0.001092 | 0 | 0.222245 | 0.037201 | 0.162358 |

| 4 | 0.000055 | 0.033337 | 0 | 0.030974 | 0.049906 |

| 5 | 0.000002 | 0 | 0 | 0.005703 | 0.047360 |

| 6 | 0.000000 | 0.003334 | 0.033337 | 0.003288 | 0.030923 |

| 1 | 2 | 3 | |||

| 0.2 | 0.3 | 0.3 | |||

The

Note that only two convolutions are needed: columns (5) and (6).

Example 2.16: Large claim separation #

- This is a very important (and convenient) application of the Disjoint decomposition property (Theorem 2.14).

- Attritional and catastrophic claims often have very different distributions (different

- The idea here is to divide the claims into different layers with different distributions:

- Small claims are modelled using a parametric distribution for which it is easy to obtain the distribution of the compound distribution, potentially even approximated with a normal distribution thanks to volume and light right tail;

- Large claims are typically modelled with a Pareto distribution with threshold

Assuming two layers:

- We choose a large claims threshold

- We define the partition

- Assume that

- We now define the small and large claims layers as

- Theorem 2.14 implies that

- The distribution of

You should be familiar with the main estimation methods:

- Method of moments

- Maximum likelihood estimation

Here the problem is slightly complicated because our observations may not be directly comparable due to varying exposures

Assume that

- The key idea here is to find the minimum variance method of moments estimator, when the volumes across the observations can vary.

- This is what is different from a straight method of moments estimator, and explains why we need to think it through: how to deal with those volumes?

- Assume there exist strictly positive volumes

Lemma 2.26 states that the unbiased, linear estimator for

Note:

- We haven’t made any distributional

assumption yet - this estimates

- The superscript “MV” stands for “minimal variance”.

Unbiased, minimal variance estimators:

- binomial case for

- Poisson case for

More complicated, because:

Let the weighted sample variance

Estimators are identical to method of moments estimators. Or conversely, the MLE estimators are actually unbiased.

- binomial case for

- Poisson case for

Assume

The

The

A class of distributions has the following property

This is the

The exhaustive list of its members (see Wuthrich 2023 Lemma 4.7) is

| Distribution | |||

|---|---|---|---|

| Poisson |

|||

| Neg Bin |

|||

| Binomial |

Exercise: prove the results in the above table!

(Note the Negative Binomial is parametrised as per Proposition 2.20 in Wuthrich (2023) (second definition))

First three cumulants of the

| Distribution | |||

|---|---|---|---|

| Poisson |

|||

| Neg Bin |

|||

| Binomial |

Exercise:

- check these results using the cgf

- find the first 3 cumulants of

actuar and the

- The package

actuarextends the definition above to allow for zero-truncated and zero-modified distributions. - The Poisson, binomial and negative-binomial (and special case geometric) are all well supported in Base R with the

d,p,qandrfunctions. - If one takes the Panjer equation for granted, then we can think of

- We introduce here the

- The reference for this section is Section 4 of the

vignette “distribution” of

actuar

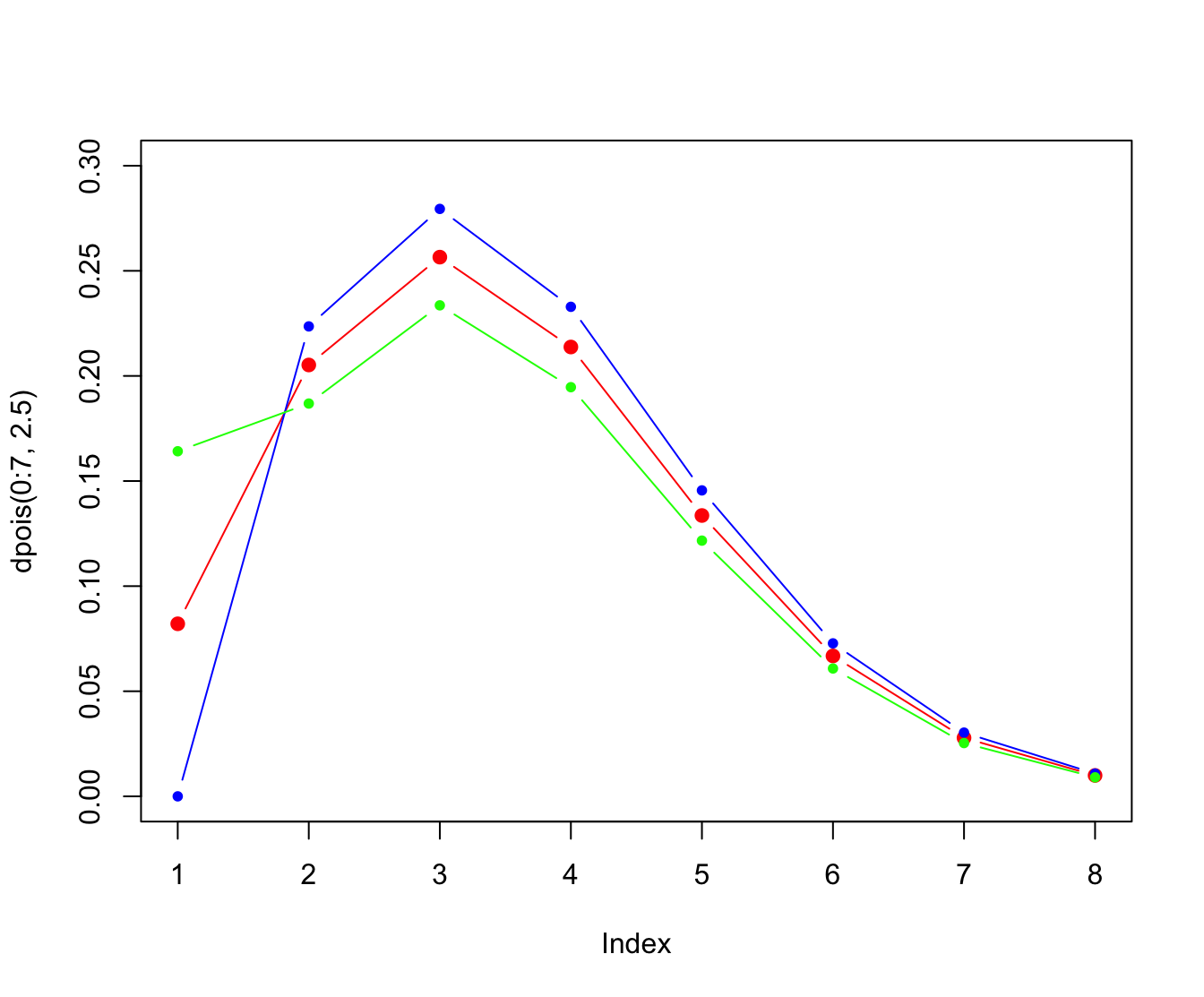

A discrete random variable is a member of the **

- The recursion starts at

- The extra freedom allows the probability at zero to be set to any arbitrary number

- Setting

- Members are the zero-truncated Poisson (

actuar::ztpois), zero-truncated binomial (actuar::ztbinom), zero-truncated negative-binomial (actuar::ztnbinom), and the zero-truncated geometric (actuar::ztgeom). - Let

actuarprovides thed,p,q, andrfunctions of the zero-truncated distributions mentioned above.

- Setting

- These distributions are discrete mixtures between a degenerate distribution at zero, and the corresponding distribution from the

- Let

- Quite obviously, zero-truncated distributions are zero-modified distributions with

- Members are the zero-modified Poisson (

actuar::zmpois), zero-modified binomial (actuar::zmbinom), zero-modified negative-binomial (actuar::zmnbinom), and the zero-modified geometric (actuar::zmgeom).actuarprovides thed,p,q, andrfunctions of the zero-truncated distributions mentioned above.

plot(dpois(0:7, 2.5), pch = 20, col = "red", ylim = c(0, 0.3),

cex = 1.5, type = "b")

points(dztpois(0:7, 2.5), pch = 20, col = "blue", type = "b")

points(dzmpois(0:7, 2.5, 2 * dpois(0, 2.5)), pch = 20, col = "green",

type = "b")

References #

Bowers, Newton L. Jr, Hans U. Gerber, James C. Hickman, Donald A. Jones, and Cecil J. Nesbitt. 1997. Actuarial Mathematics. Second. Schaumburg, Illinois: The Society of Actuaries.

Dutang, Christophe, Vincent Goulet, and Mathieu Pigeon. 2008. “Actuar: An r Package for Actuarial Science.” Journal of Statistical Software 25 (7).

Werner, Geoff, and Claudine Modlin. 2010. Basic Ratemaking. Casualty Actuarial Society.

Wuthrich, Mario V. 2023. “Non-Life Insurance: Mathematics & Statistics.” Lecture notes. RiskLab, ETH Zurich; Swiss Finance Institute.